Brando Miranda @ ICML 2025

@BrandoHablando

Followers

1K

Following

2K

Media

87

Statuses

1K

CS Ph.D. @Stanford, researching data quality, foundation models, and ML for Theorem Proving. Prev: @MIT, @MIT_CBMM, @IllinoisCS, @IBM. Opinions are mine. 🇲🇽

Stanford, CA

Joined March 2013

RT @allenainie: Come to EXAIT today for our best paper talk at 9:05 and poster session 11:45-2-15! The workshop also has a great set of tal….

0

5

0

@arimorcos this is the workshop paper were we show your takeaways from ICML that target distribution is "all you need" (sorry can't remember how you actually said it! Didn't mean to be cheesy). #ICML2025.

0

0

2

Come to my second poster session about Data centric Machine Learning (DMLR)! . At 209-2010!. #ICML2025

Come to 208-209 ICML data workshop and chat with me about how to use data optimally! Scale isn't everything! . Ask me how to use it beyond post-training ;). - Scale isn’t enough: LLM performance rises with training‑task alignment more than with data volume. - Robust Alignment

1

1

8

RT @_akhaliq: Beyond Scale: the Diversity Coefficient as a Data Quality Metric Demonstrates LLMs are Pre-trained on Formally Diverse Data….

0

12

0

@_akhaliq @_alycialee Joint work with @ObbadElyas Mario Krrish Aryan @sanmikoyejo Me Sudarsan at @stai_research !. Thank you!. 🧵3/3.

1

2

3

We first demonstrated scale isn't enough in our Beyond Scale paper using the diversity coefficient! . thanks for featuring us @_akhaliq !. Work led by @_alycialee et al!. 🧵 2/3.

Beyond Scale: the Diversity Coefficient as a Data Quality Metric Demonstrates LLMs are Pre-trained on Formally Diverse Data. paper page: Current trends to pre-train capable Large Language Models (LLMs) mostly focus on scaling of model and dataset size.

2

3

15

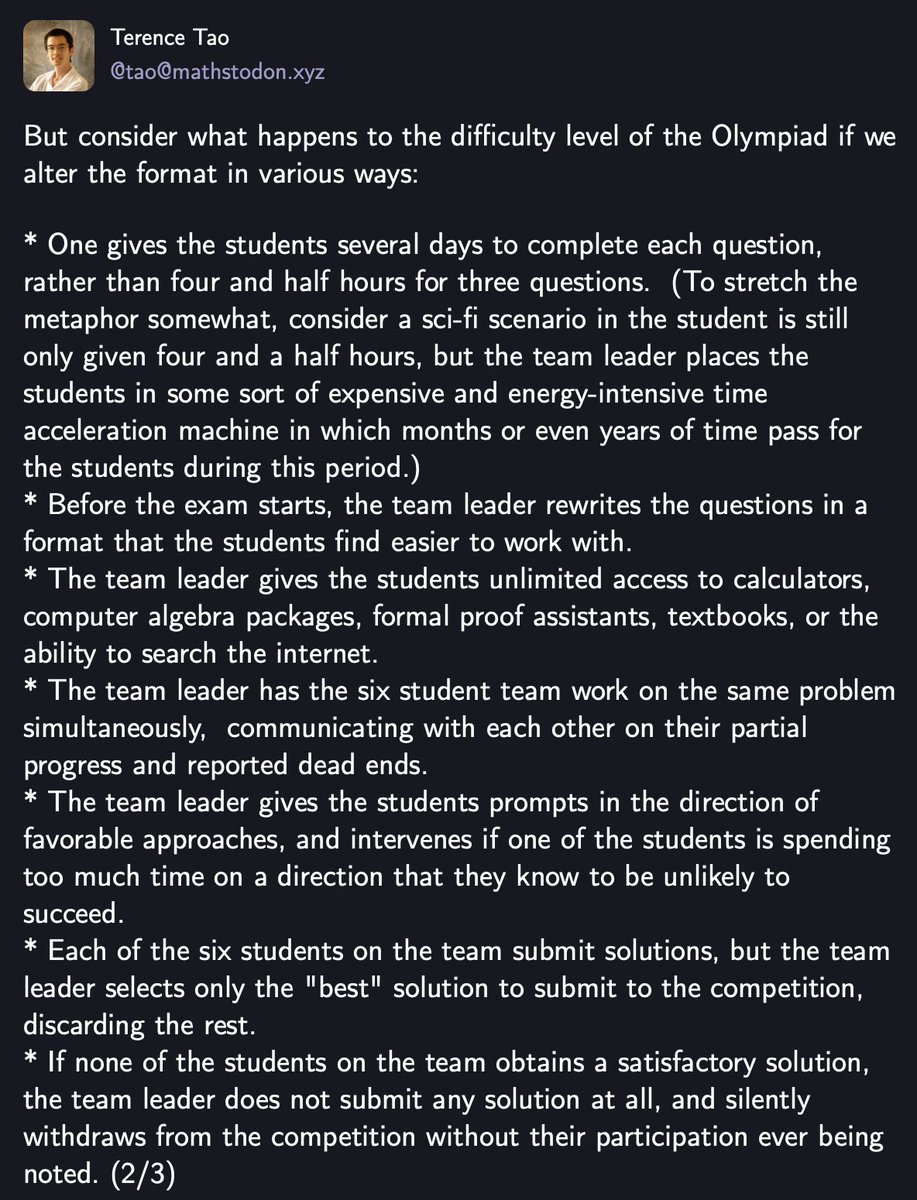

Come to Convention Center West room 208-209 2nd floor to learn about optimal data selection using compression like gzip! . tldr; you can learn much faster if you use gzip compression distances to select data given a task!. DM if you are interested or what to use the code!.

🚨 What’s the best way to select data for fine-tuning LLMs effectively?. 📢Introducing ZIP-FIT—a compression-based data selection framework that outperforms leading baselines, achieving up to 85% faster convergence in cross-entropy loss, and selects data up to 65% faster. 🧵1/8

0

4

7

RT @allenainie: If you missed @wanqiao_xu’s presentation, here are some of our slides! (The workshop will post full slides later on their w….

0

18

0

RT @heeney_luke: Academia must be the only industry where extremely high-skilled PhD students spend much of their time doing low value work….

0

123

0

RT @ai4mathworkshop: It's happening today!.📍Location: West Ballroom C, Vancouver Convention Center.⌚️Time: 8:30 am - 6:00 pm.🎥 Livestream:….

0

11

0

@HenryJamesBosch Picture with Leni! Coauthor of Veribench and long time collaborator!. Main mind behind Pantograph! #TACAS2025

0

1

2

🚶♀️come talk to me live!. Thanks to @HenryJamesBosch for helping set and for the advertisment! :)

1

1

3