RWKV

@RWKV_AI

Followers

3K

Following

63

Media

18

Statuses

70

AI model built by the community, for everyone in this world Part of the Linux Foundation, Apache 2 licensed An RNN scaled to 14B params with GPT-level of perf

World

Joined November 2023

#RWKV is One Dev's Journey to Dethrone Transformers The largest RNN ever (up to 14B). Parallelizable. Fast inference & training. Quantizable. Low vram usage. 3+ years of hard work https://t.co/ebRmfDrQbD Created by @BlinkDL_AI Computation sponsored by @StabilityAI @AiEleuther

1

5

33

Wrapping up: #RWKV was created by @BlinkDL_AI as a project at @AIEleuther - and is now being hosted by @LFAIDataFdn The RWKV wiki can be found at: https://t.co/ebRmfDso1b Our discord can be found at: https://t.co/veH8lO4Kf6 Give the models a try, drop by our discord

discord.com

RWKV Language Model & ChatRWKV | 9668 members

1

1

15

Overall we are excited by the progress we look forward to - the step jump in RWKV7-based attention - and the upcoming converted 70B models Once properly tuned, and trained, it will serve as a full drop-in replacement for the vast majority of AI workloads today 🪿

2

0

16

This is in addition to our latest candidate RWKV-7 "Goose" 🪿 architecture. Which we are excited about, as it shows early signs of a step jump from our v6 finch 📷 models. Future QRWKV model for V7 is to be expected as well https://t.co/K1zp2jO76L

RWKV-7 0.1B/0.4B/1.5B trained on the Pile, showing best performance among all models trained on the exact same dataset & tokenizer: https://t.co/6A83VjNVUw All RWKV-7 results are fully replicable and spike-free (I find some architectures unstable)🙂

1

0

13

Or the latest RWKV-6 Finch 7B World v3 model Which provides the strongest multi-lingual performance at 7B size scale among all our RWKV line of models https://t.co/K6F9OhzrDy

blog.rwkv.com

Moar training, moar capable!

1

0

7

While the Q-RWKV-32B Preview takes the crown as our new Frontier RWKV model, unfortunately, this is not trained on RWKV usual 100+ language dataset For that refer to the other models, such as the RWKV MoE 37B-A11B v0.1 https://t.co/JWmkgT9K7v

substack.recursal.ai

The largest RWKV MoE model yet!

1

1

7

This RWKV Frontier model was, trained by the Recursal AI team, by converting a Qwen 32B model with the following process - freeze feedforward - replace QKV attention layers, with RWKV6 layers - train RWKV layers - unfreeze feedforward & train all layers https://t.co/YIThic1rXR

substack.recursal.ai

The strongest, and largest RWKV model variant to date: QRWKV6 32B Instruct Preview

1

1

17

Announcing a flock of RWKV models all arriving with apache2 licensing: available today First up, the strongest and largest linear model to date: QRWKV6-32B-Instruct-Preview Surpassing previous state space & RWKV models matching transformer performance with much lower inference

4

33

132

🚀 RWKV.cpp AI system - is being deployed to 0.5 billion installs globally Making it one of the world's most deployed, truly open-source (apache2) AI solutions out there As it ships with every Windows 11 system today!! Our group's code install count, went from ~100k -> 0.5B

14

37

242

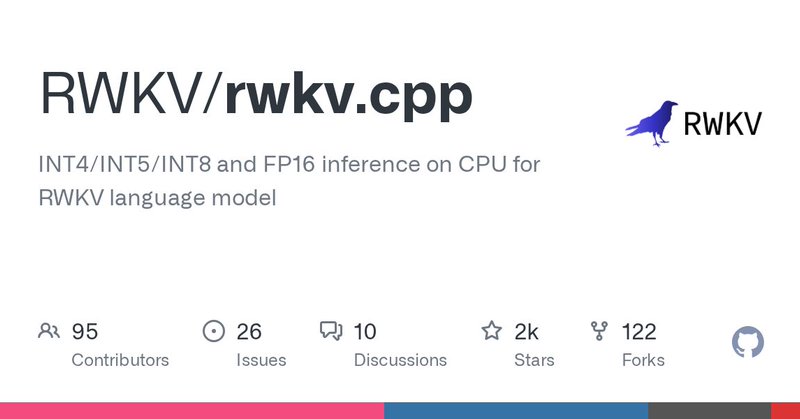

RWKV.cpp project can be found here: https://t.co/XI1dXqlSzd Our RWKV wiki can be found at: https://t.co/ebRmfDso1b Our discord can be found at: https://t.co/veH8lO4Kf6 Give the models a try, drop by our discord, and have fun!

discord.com

RWKV Language Model & ChatRWKV | 9668 members

0

1

14

Energy cost - Is critical for a device that aims for long battery life! Variety of language support - is critical for shipping a product to the whole world (beyond English or Chinese) We will eagerly keep watch on this development https://t.co/ZLAepum3OU

blog.rwkv.com

We went from ~50k installation, to 1.5 billion. On every windows 10 and 11 computer, near you (even the ones in the IT store)

2

0

11

What could Microsoft be using this for? Our main guess would be the beta features being tested - local copilot - local memory recall And it makes sense, especially for our smaller models - we support a 100+ languages - we are extremely low in energy cost (vs transformer)

1

3

15

These are real binaries, which you can decompile and inspect them They are a variation of the RWKV.cpp project, which has been built by the community, to run RWKV on nearly anything (even Raspberry Pi's) here: https://t.co/XI1dXqlSzd

github.com

INT4/INT5/INT8 and FP16 inference on CPU for RWKV language model - RWKV/rwkv.cpp

1

3

28

You can verify this yourself, by updating to the latest Windows 11 - and going to C:\Program Files\Microsoft Office\root\vfs\ProgramFilesCommonX64\Microsoft Shared\OFFICE16 Alternatively, you could just search system files for rwkv, on any "copilot Windows 11" in an IT store

1

1

20

RWKV.cpp - is now being deployed to half a billion systems worldwide Making it one of the world's most widely deployed, truly open-source (apache2) AI solutions out there As it now ships with every Windows 11 system

10

81

464

Wrapping up: #RWKV was created by @BlinkDL_AI as a project at @AIEleuther - and is now being hosted by @LFAIDataFdn The RWKV wiki can be found at: https://t.co/ebRmfDrQbD Our discord can be found at: https://t.co/veH8lO4cpy Give the model a try, drop by our discord

discord.com

RWKV Language Model & ChatRWKV | 9668 members

0

0

4

As previously covered, this model is an upcycled model from our v5 - with an additional 1.4T tokens trained, for the conversion process. The RWKV v6 Finch paper covering the architecture can be found here:

arxiv.org

We present Eagle (RWKV-5) and Finch (RWKV-6), sequence models improving upon the RWKV (RWKV-4) architecture. Our architectural design advancements include multi-headed matrix-valued states and a...

2

0

5

In general, this can be viewed as a jump up from the Eagle v5 7B model, to the Finch v6 7B or 14B model Serving potentially full drop-in replacements for existing RWKV Eagle models across existing tasks like - translation - text summarization - text/code generation

2

0

5

The RWKV v6 Finch lines of models are here Scaling from 1.6B all the way to 14B Pushing the boundary for an Attention-free transformer, and Multi-lingual models. Cleanly licensedm Apache 2, under @linuxfoundation Find out more from the writeup here:

blog.rwkv.com

From 14B, 7B, 3B, 1.6B here are the various RWKV v6 models

2

13

63

Is AI's insatiable appetite for compute holding back innovation? I recently talked with Eugene Cheah about @RWKV_AI. Eugene and the open-source RWKV community are working to democratize AI by addressing some of the key limitations of transformers.

1

4

9

The RWKV community wiki can be found at: https://t.co/ebRmfDso1b Our discord can be found at: https://t.co/veH8lO4Kf6 Give the model a try, drop by our discord, and provide us feedback on how we can improve the model for the community.

discord.com

RWKV Language Model & ChatRWKV | 9668 members

0

0

10