Puyuan Peng

@PuyuanPeng

Followers

2K

Following

819

Media

19

Statuses

307

Research Scientist @Meta Superintelligence Lab. Speech & Audio. Previously @utaustin @uchicago @bnu_1902

New York, USA

Joined December 2019

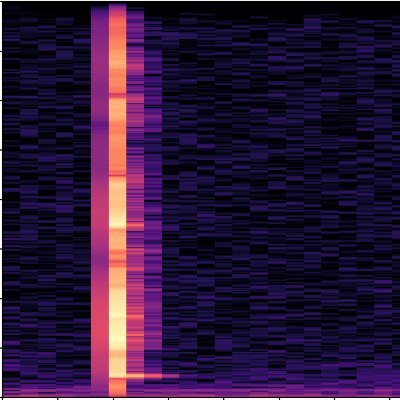

Announcing the new SotA voice-cloning TTS model: 𝗩𝗼𝗶𝗰𝗲𝗦𝘁𝗮𝗿 ⭐️ VoiceStar is - autoregressive, - voice-cloning, - robust, - duration controllable, - *test-time extrapolation*, generates speech longer than training duration! Code&Model: https://t.co/7vxDpnayks

10

61

389

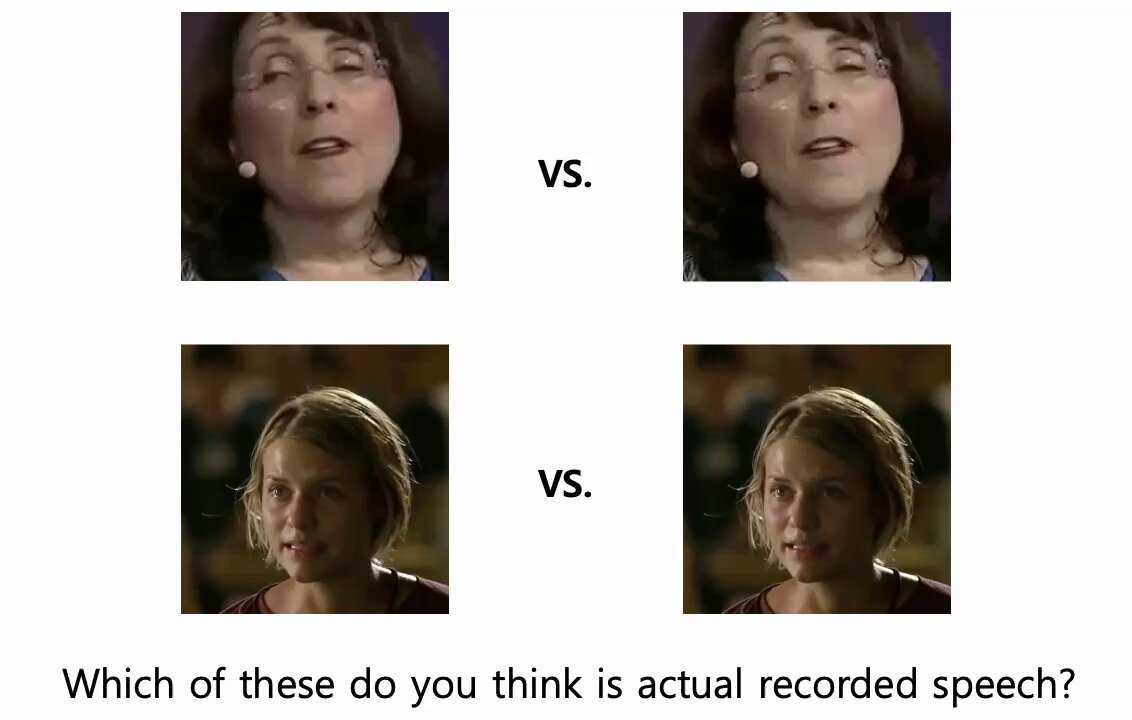

A collaboration work with my student Sungbin Kim and Univ. Texas Austin team will be presented in ICCV 2025.

The work is led by the amazing Sungbin Kim https://t.co/IMN9ZyYhPs, and collaborated with Jeongsoo Choi, Joon Son Chung, @Tae_Hyun_Oh, David Harwath Checkout https://t.co/LEBteX4JSz for more samples, and the forthcoming code and model!

0

1

8

The work is led by the amazing Sungbin Kim https://t.co/IMN9ZyYhPs, and collaborated with Jeongsoo Choi, Joon Son Chung, @Tae_Hyun_Oh, David Harwath Checkout https://t.co/LEBteX4JSz for more samples, and the forthcoming code and model!

sites.google.com

Kim Sung-Bin

0

1

5

Announcing 𝐕𝐨𝐢𝐜𝐞𝐂𝐫𝐚𝐟𝐭-𝐃𝐮𝐛🌟 SotA Voice-Cloning Dubbing Model! Given video of any speaker, and seconds of any reference speech, VoiceCraft-Dub produces lip-synchronized expressive speech for the video, using the reference voice. ICCV 2025: https://t.co/mrWySonzfw

1

1

12

Thanks for featuring VoiceStar, our latest, most powerful TTS (and an upgrade from VoiceCraft last year). Fully open, permissively licensed at

github.com

VoiceStar: Robust, Duration-controllable TTS that can Extrapolate - jasonppy/VoiceStar

The AI landscape is evolving fast, and staying on top of the latest open-source projects is crucial for every developer. 🚀 Swipe to see our list of the top new open-source AI projects on GitHub, from multi-agent systems to composable tools and cutting-edge speech synthesis.

0

0

11

There will be DeepSeek R1 0528 Qwen 3 8B too matching Qwen 3 235B Thinking in performance too 🤯 Whale COOKED!

19

66

691

The paper is out! https://t.co/GikR01dy5S

Announcing the new SotA voice-cloning TTS model: 𝗩𝗼𝗶𝗰𝗲𝗦𝘁𝗮𝗿 ⭐️ VoiceStar is - autoregressive, - voice-cloning, - robust, - duration controllable, - *test-time extrapolation*, generates speech longer than training duration! Code&Model: https://t.co/7vxDpnayks

0

11

60

Introducing ChartMuseum🖼️, testing visual reasoning with diverse real-world charts! ✍🏻Entirely human-written questions by 13 CS researchers 👀Emphasis on visual reasoning – hard to be verbalized via text CoTs 📉Humans reach 93% but 63% from Gemini-2.5-Pro & 38% from Qwen2.5-72B

2

30

76

Extremely excited to announce that I will be joining @UTAustin @UTCompSci in August 2025 as an Assistant Professor! 🎉 I’m looking forward to continuing to develop AI agents that interact/communicate with people, each other, and the multimodal world. I’ll be recruiting PhD

92

65

453

i’m at #chi2025 and i’ll be on the industry job market later this year! i work in human-ai interaction. my prev projects focused on design tools. i love design. i love user interfaces. i trained myself to become an ai engineer to push our tools further. i believe ai is on

0

7

83

You can try yourself using this HuggingFace space, which applies the VoiceCraft codec trained by @PuyuanPeng et al. (5/8) https://t.co/jX7M9Eh0YJ

huggingface.co

1

1

3

As I near the end of my PhD journey, I am excited to share that I will be joining the research efforts @OpenAI, working with @hadisalmanX @aleks_madry and the great team to unlock new capabilities with frontier models. Austin has been one of the best places I have lived in and I

28

6

364

Our incredible team built many models announced here, including image, voice, music and video generation! And: I'm moving to London this summer, and I'm hiring for research scientist and engineering roles! Our focus is on speech & music in Zurich, Paris & London. DM/email me.

Day 1 of #GoogleCloudNext ✅ Here’s a taste of all the things that we announced today across infrastructure, research and models, Vertex AI, and agents → https://t.co/p6EHb0t7D8 Hint: Ironwood TPUs, Gemini on Google Distributed Cloud, Gemini 2.5 Flash, Lyria, and more.

5

6

111

I received a review like this five years ago. It’s probably the right time now to share it with everyone who wrote or got random discouraging reviews from ICML/ACL.

5

38

418

🚨 New paper alert 🚨 Ever struggled with quick saturation or unreliability in benchmark datasets? Introducing SMART Filtering to select high-quality, reducing dataset size by 48% on avg (up to 68% for ARC!) and improving correlation with scores from ChatBot Arena! 📈✨ (1/N)

3

16

95

This project is well on time! Check it out if you are interested in replicating OpenAI’s audio agent

If you'd like an open-source text-to-speech model that follows your style instructions, consider using our ParaSpeechCaps-based model! Model: https://t.co/HCm71MW0aR Paper:

0

0

10

Exciting News!😊INTERSPEECH 2028 will take place at the River Walk in San Antonio, Texas! ✨ I’m honored to serve as one of the General Chairs alongside John Hansen and Carlos Busso @BussoCarlos - We hope you’ll love this city as much as we do! https://t.co/k2dVo7nqdc

0

10

40

Introducing ParaSpeechCaps, our large-scale style captions dataset that enables rich, expressive control for text-to-speech models! Beyond basic pitch or speed controls, our models can generate speech that sounds "guttural", "scared", "whispered" and more; 59 style tags in total.

3

17

75

``Scaling Rich Style-Prompted Text-to-Speech Datasets,'' Anuj Diwan, Zhisheng Zheng, David Harwath, Eunsol Choi,

arxiv.org

We introduce Paralinguistic Speech Captions (ParaSpeechCaps), a large-scale dataset that annotates speech utterances with rich style captions. While rich abstract tags (e.g. guttural, nasal,...

0

3

16