Yonathan Arbel

@ProfArbel

Followers

2K

Following

17K

Media

773

Statuses

4K

Let's build safe AI! Law prof @ Alabama Contracts, Defamation, Legal NLP, & AI Safety

Tuscaloosa, AL

Joined July 2014

Why do workers have to wait for 2-4 weeks to be paid, in the same economy where online transactions go quickly and securely? . A new draft 𝑃𝑎𝑦𝑑𝑎𝑦-forthcoming @WashULaw-proposes that they shouldn't. Daily, or at least weekly, pay can be a reality.

4

9

55

Thinking about LLM psychosis, this is a good reminder that the problem is much older

0

0

3

RT @petersalib: Enjoying the new @80000Hours interview w/ @fish_kyle3 about AI welfare. One important research question he and Luisa discus….

0

2

0

RT @capricelroberts: Calling all law faculty candidates hoping to teach law starting next fall: more ways to get your application seen by a….

0

5

0

RT @IThinkIAgree: Taking 10% of Intel has four facets worth considering separately because “stock” is really a bundle of rights. First, t….

0

1

0

can AI create new math? if so, where are all the new discoveries? . I can hardly adjudicate the merit of the claims in the thread, but even under this more minimalist view, it appears that there is a zone of (a) new math discoveries + (b) that aren't that hard to solve once the.

This is really exciting and impressive, and this stuff is in my area of mathematics research (convex optimization). I have a nuanced take. 🧵 (1/9).

1

0

0

It's just the first study. Much more work is needed to confirm these results and also evaluate consistency and reliability. It's not clear what aspects of model training contribute the most (RLHF?).

1

0

1

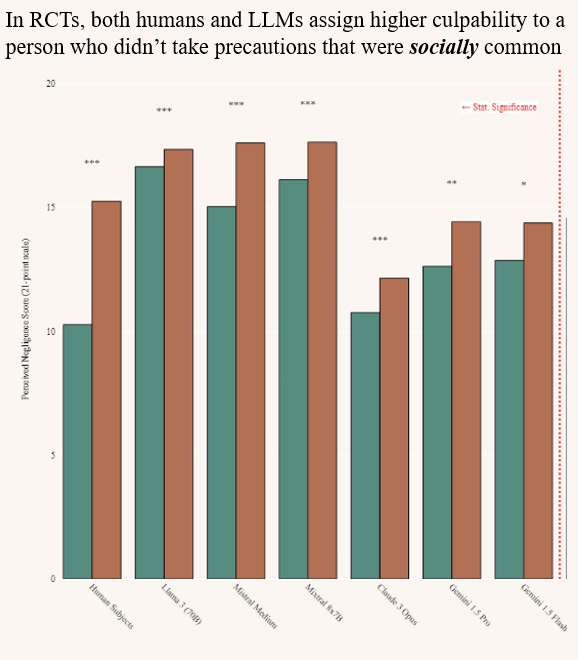

This, alongside other results, is consistent with LLMs having picked up something deep about reasonableness. If true, that would allow tool building for legal and agent applications, with important AI safety implications. but. .

1

0

1

Findings: humans judge harshly people who didn't take the socially common level of precautions, even though textbook says that shouldn't matter. Textbook does say they should care about costs, but humans don't care much. LLM repeat this exact pattern in RCTs. (but not all)

1

0

1

To test whether LLMs may have picked up the deeper structure of reasonableness judgment (not just parroting), I offer a new methodology: Silicon RCTs (S-RCTs). Randomize a relevant fact, run session-isolated “participants,” & compare LLM deltas to human deltas.

1

0

1

A note for CS friends: a large share of law isn’t if-then rules; it’s open-textured standards like “reasonable,” “ordinary meaning,” and “undue burden”. But our instruments are thin. Expert intuitions (judges) are criticized as elite, out-of-touch, and crypto-political, juries.

1

0

1

Two hard problems meet here: . (a) courts must infer what ordinary people will reasonably think (e.g., would a teenager read the Pepsi jet as an offer?); . (b) we want AI agents to follow open-textured norms (“keep a reasonable distance”).

1

0

1

Reasonableness quietly structures daily life: following distance in traffic, how loud a house party can be, what “up to 50% longer” implies, or whether a Pepsi ad offering a jet is a joke. These judgments are fast, intuitive, and hard to explain. In other words: they are

1

0

1

🚨🚨🚨Can judges really know what “reasonable people” think? Can AI help bridge that gap—and can AI agents themselves behave “reasonably” in the wild? . A new draft, The Silicon Reasonable Person, asks these questions. tl;dr: early signs point to yes, with careful limits.

2

3

17

Some thoughts on how I update on gpt-5. Tl:Dr: It suggests longer timelines. I don't think there is nothing magical about "5" per se, and they could have gotten away with calling O3 "5". So I don't inherently base my view of the landscape on what "5" does or does not do. But. .

3

1

7

A terrific workshop, and an opportunity to present work on the Reasonable Silicon Person and AGI Governance

0

0

8

have we all just quietly decided ten years ago that email reached its final form? Surely someone could come up with a better attachment system, integration with calendar, thread organization, working sound notifications for Gmail, a less hellish version of outlook, sane search.

1

0

2

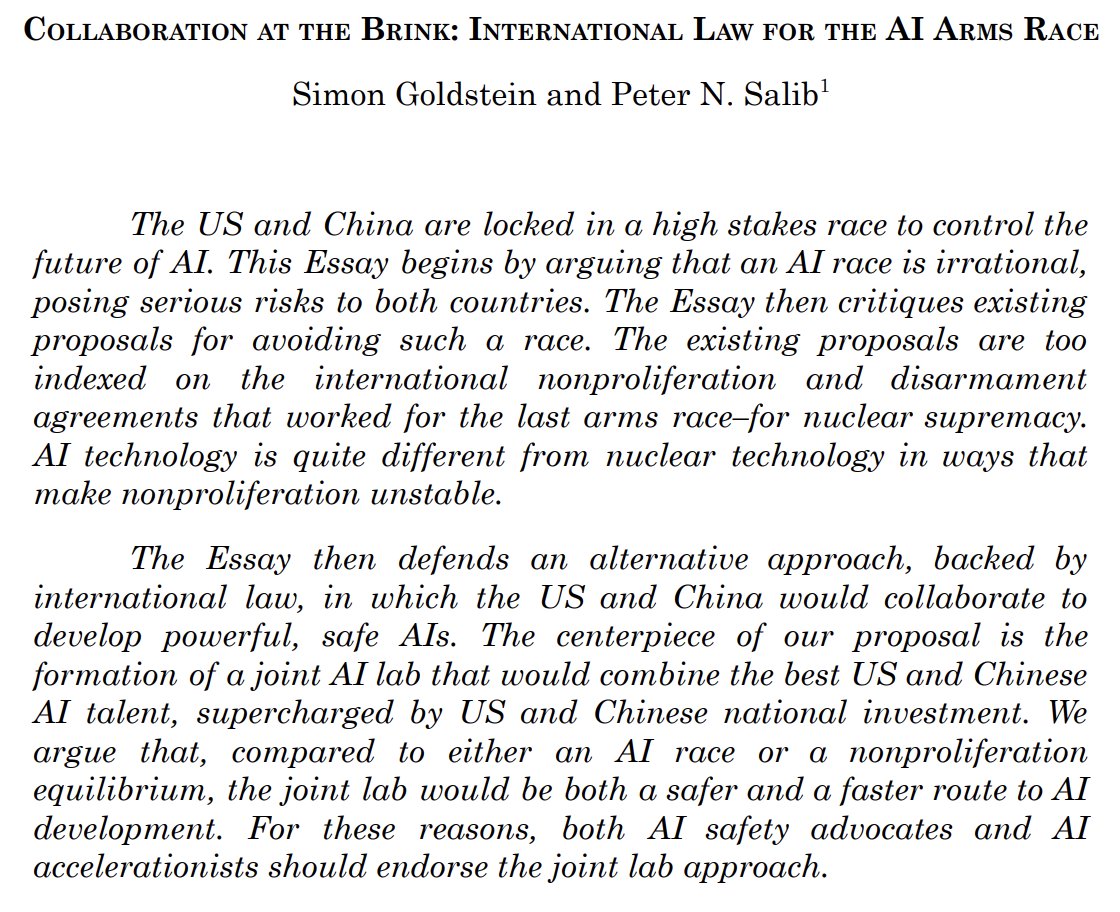

I remember thinking at first that this was a non-starter, pie-in-the-sky proposal. But I actually think this is not only feasible, but quite likely in certain future branches of AI development.

The WH's AI Action plan has some good stuff. But it begins, "The US is in a race to achieve global dominance in AI.". Like many, @simondgoldstein and I think that an AI arms race w/ China is a mistake. Our new paper lays out a novel game-theoretic approach to avoiding the race.

0

0

2