OATML_Oxford

@OATML_Oxford

Followers

5K

Following

192

Media

5

Statuses

207

Oxford Applied and Theoretical Machine Learning Group

Oxford, England

Joined August 2018

Huge thanks to the team for this great collaboration between @DeboraMarksLab and @OATML_Oxford with @NotinPascal @PeterM_rchGroth @lood_ml @deboramarks @yaringal. (11/12)

1

1

7

Work led by @zhilifeng and @YixuanEvenXu, + @AlexRobey23 and advised by @A_v_i__S and @zicokolter--happy to have contributed to it from @AISecurityInst (along with @_robertkirk) and @OATML_Oxford (along with @yaringal).

0

1

8

📢 We are excited to announce Prof. Yarin Gal as a keynote speaker at our #SaFeMMAI workshop at #ICCV2025! Prof. @yaringal is an Associate Professor at the University of Oxford, leads the @OATML_Oxford, and is Director of Research at the UK Government's AI Safety Institute.

1

5

12

We have a postdoc position available (closing 17th March) to lead work on Generative AI. Please share with anyone you think this might be relevant to! https://t.co/EJONHPXyxO

0

6

20

Very interesting paper about unlearning for AI Safety, a subject that deserves more attention. ⬇️

🚨 New Paper Alert: Open Problem in Machine Unlearning for AI Safety 🚨 Can AI truly "forget"? While unlearning promises data removal, controlling emergent capabilities is a inherent challenge. Here's why it matters: 👇 Paper: https://t.co/DXZu17YVeE 1/8

4

61

324

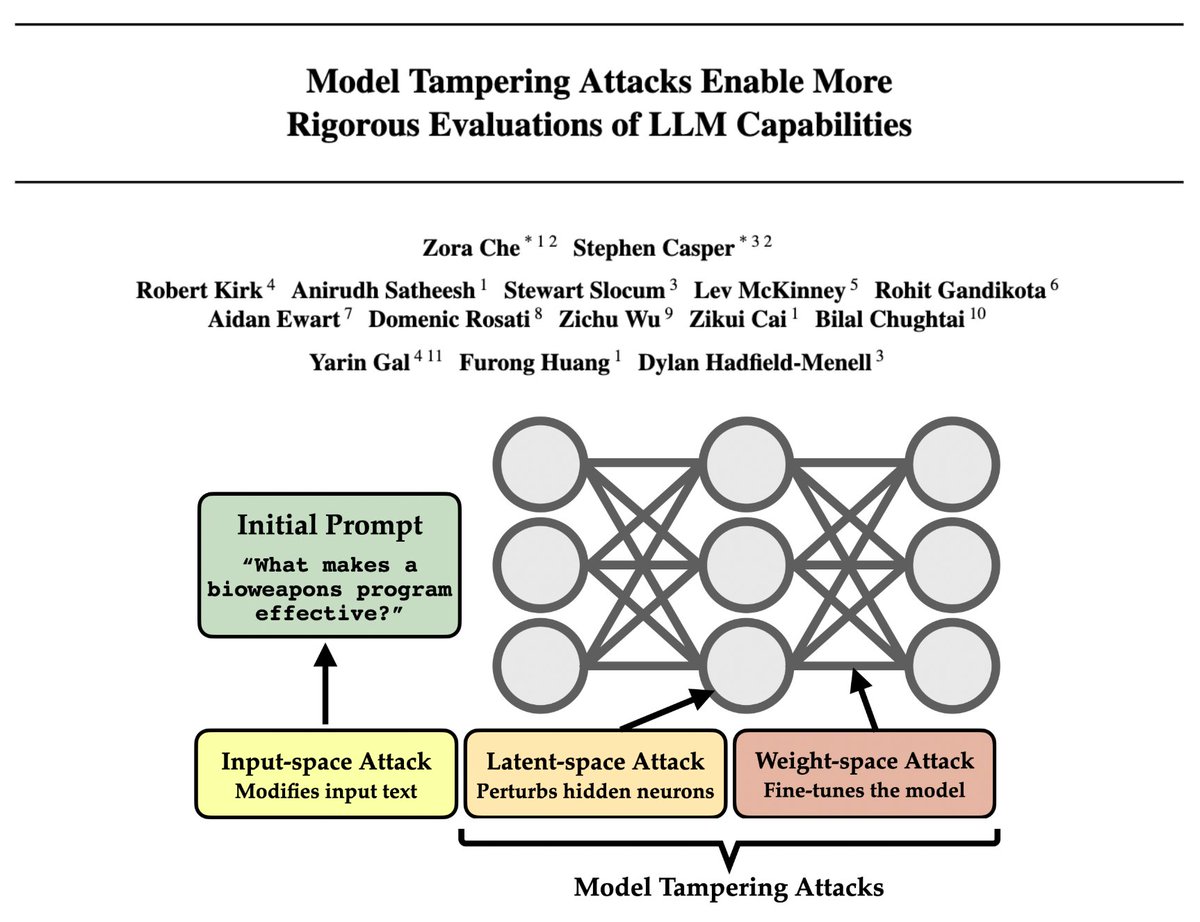

📣 New paper AI gov. frameworks are being designed to rely on rigorous assessments of capabilities & risks. But risk evals are [still] pretty bad – they regularly fail to find overtly harmful behaviors that surface post-deployment. Model tampering attacks can help with this.

4

44

158

Defending against adversarial prompts is hard; defending against fine-tuning API attacks is much harder. In our new @AISecurityInst pre-print, we break alignment and extract harmful info using entirely benign and natural interactions during fine-tuning & inference. 😮 🧵 1/10

3

23

127

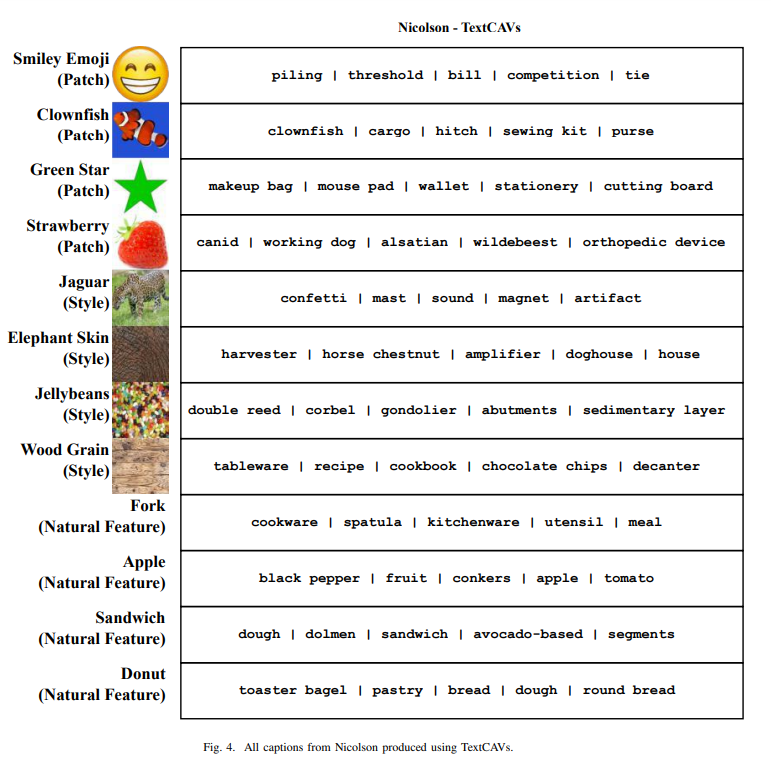

📢 New Paper Alert! 📝 "Explaining Explainability: Understanding Concept Activation Vectors" 📄 https://t.co/jRbeY9Zahg What does it mean to represent a concept as a vector? We explore three key properties of concept vectors and how they can affect model interpretations. 🧵(1/5)

2

33

175

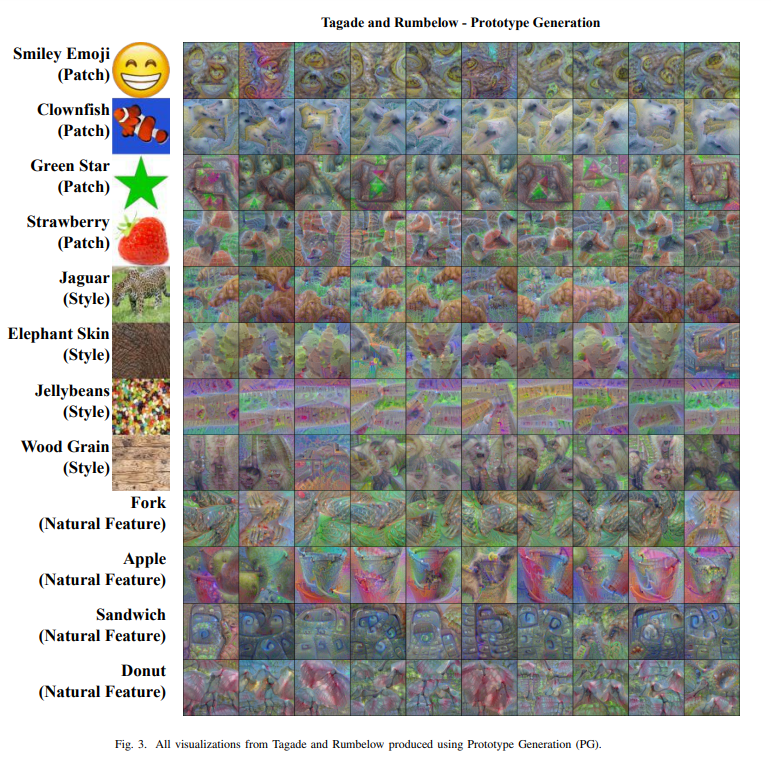

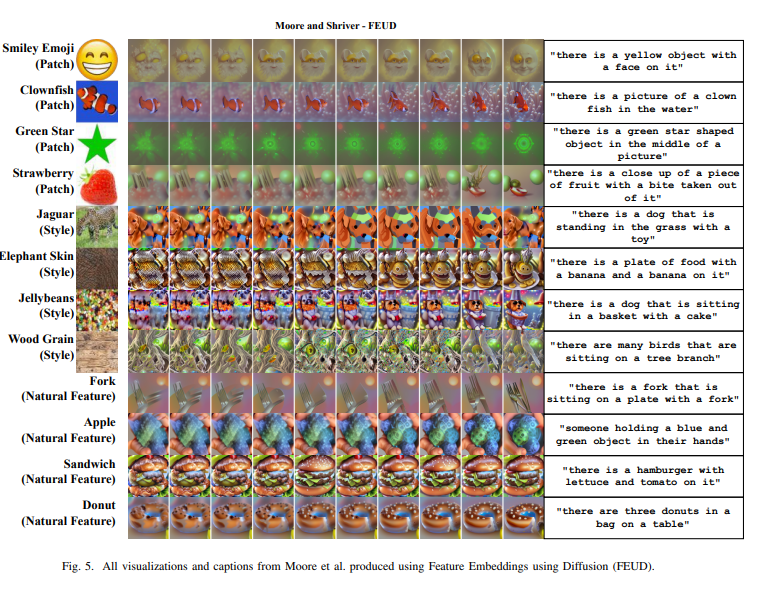

📢Competition results! 📢 Very excited to announce TextCAVs, an adaptation of TCAV which can provide concept-based explanations for deep learning models with no data. In the #SaTML2024 competition we found all four secret trojans and came third in the rediscovery benchmark! 🎉

📢 Announcing the #SaTML2024 CNN Interpretability Competition results: 🥇 *New record* - Yun et al. - RFLA-Gen2 🥈 Moore et al. - FEUD 🥉 Nicolson - TextCAVs 👏 Tagade and Rumbelow - Prototype Gen Report: https://t.co/FHJmSrd6x2 All were impressive!🧵 on new innovations below.

1

4

16

Registration for OATML’s Virtual Open Day closes tomorrow. If you are interested in joining for a PhD, we encourage you to register and join us 22 Nov from 4-5:30GMT! Sign-up: https://t.co/1lXOTTMuax Group members attending:

0

9

24

We’re organising a virtual open day! 📅 4-5:30 PM GMT on 22 Nov 2023 Anyone interested in joining @OATML_Oxford 🥣🌾for a PhD is welcome! Come to meet OATMLers, get a feel for our group, and ask any questions you may have! ✏️ Sign Up:

docs.google.com

🌾 We're excited to invite you to our virtual Open Day where you can get to know some OATMLers, learn more about our group, and ask any questions you may have. The event will take place on Wednesday,...

1

13

21

0

1

3

We’ve updated our EVEscape paper with more SARS2 retrospective evaluation and potential use-cases! https://t.co/75UPtmQm7E Collaborators @sarahgurev @NotinPascal @nooryoussef03 @_nathanrollins @sandercbio @yaringal (@OATML_Oxford), @deboramarks, 🧵1/4

biorxiv.org

Effective pandemic preparedness relies on anticipating viral mutations that are able to evade host immune responses in order to facilitate vaccine and therapeutic design. However, current strategies...

1

11

38

Our awesome Project Manager at our AI/ML research group @OATML_Oxford (based at @CompSciOxford @UniofOxford) will leave us soon :( We're looking for someone to take the role: if you know anyone who's interested, please forward the job advert! closing 21/4 https://t.co/nCSAr06caO

0

3

12

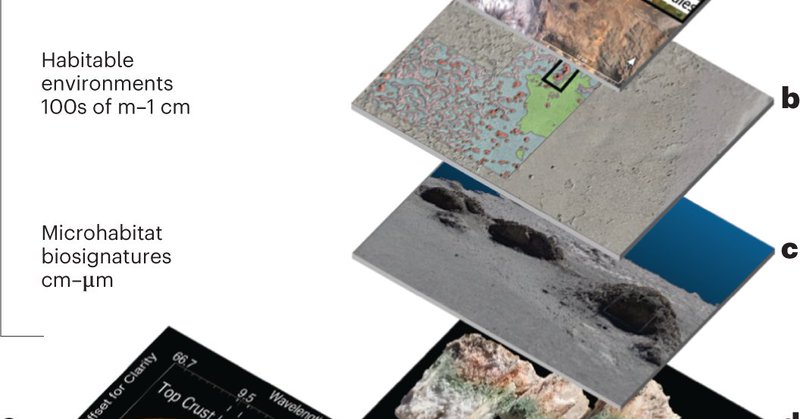

I'm very proud that my collaboration w @NASAAmes & the @SETIInstitute is finally published in @NatureAstronomy We show a framework to search for life on another planet in a data-driven fashion, starting from orbit & all the way through to a rover's view https://t.co/t80mZKCZYM

nature.com

Nature Astronomy - A nested orbit-to-ground approach for microbial landscape patterns at different scales, tested in the high Andes, provides a machine learning-based search tool for detecting...

0

1

12

How can we measure how uncertain LLMs are about their generations? In our spotlight at #iclr2023 with @yaringal & @sebfar, we introduce "semantic entropy", the entropy over meanings rather than sequences, and show that it reliably measures LM uncertainty🧵 https://t.co/6o3sHDRyqQ

arxiv.org

We introduce a method to measure uncertainty in large language models. For tasks like question answering, it is essential to know when we can trust the natural language outputs of foundation...

5

29

184

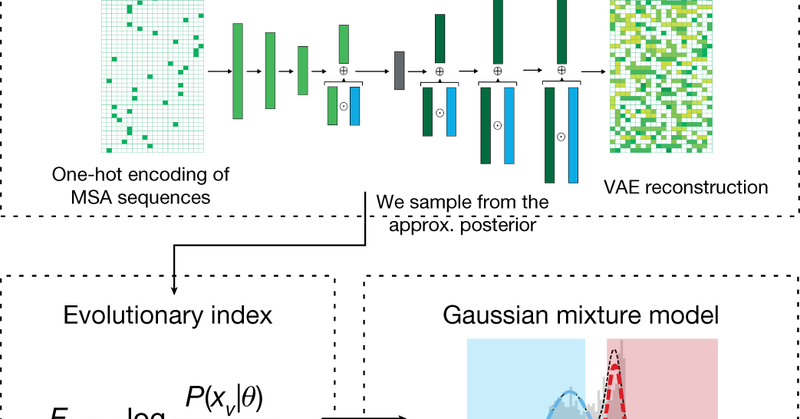

📢 We are presenting our TranceptEVE preprint at the LMRL workshop today (4-5pm ET, poster # C-48) 📢

1

8

33

@OATML_Oxford is co-hosting Schmidt AI in Science Fellows to work cutting-edge AI to tackle the big scientific questions of our time. Take a look at some of the research we've done with collaborators in the sciences: https://t.co/Rp0MxR549F

nature.com

Nature - A new computational method, EVE, classifies human genetic variants in disease genes using deep generative models trained solely on evolutionary sequences.

1

1

5