Normal Computing 🧠🌡️

@NormalComputing

Followers

4K

Following

2K

Media

79

Statuses

425

We build AI systems that natively reason, so they can partner with us on our most important problems. Join us https://t.co/BcjWCoI5b8.

New York, NY

Joined June 2022

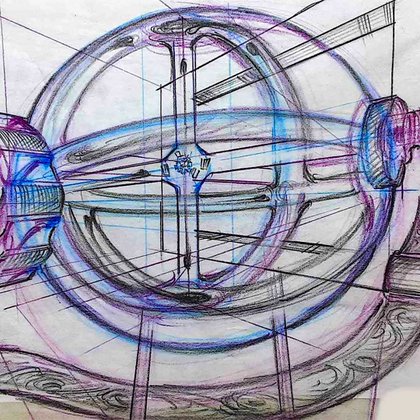

In June, we taped out CN101, the world’s first thermodynamic computing chip. We’re now sharing early bring-up results from the first thermodynamic ASIC, showing how a physics-based approach can enable stochastic, stateful, and asynchronous computation directly in silicon.

3

18

125

Proud to be included in @ARIA_research 2025 lookback as part of the Scaling Compute programme. This year, we taped out the world’s first thermodynamic computing chip, realizing this computing approach in silicon for the first time. ARIA’s support made it possible to pursue a

aria.org.uk

From gaining momentum in our first seven programmes and hosting our first Summit, to growing our portfolio of opportunity spaces with our new Programme Directors, we’re reflecting on ARIA’s 2025.

2

1

2

@amazon @studiokoto @NormalComputing by Company Policy. All AI brands look the same? Not this one. This is B2B with swagger. The name. The brash red. The sophisticated design system. The just-the-right-side-of-arrogant key line: “when the future of AI arrives, it will be Normal.”

1

1

2

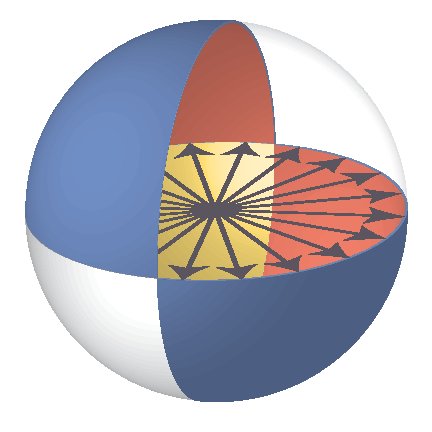

@gavincrooks from @NormalComputing taking the #FPI stage at #Neurips2025. ⚛️💻 Why spend massive energy simulating probabilistic sampling on deterministic GPUs when we can build hardware that is natively probabilistic?

1

4

28

We are pleased to announce that our CEO, @FarisSbahi, will be presenting on behalf of @NormalComputing at the @ARIA_research stand at #NeurIPS2025. He will be speaking today at 19:00 PM at booth 1343. Faris will share our latest R&D work with ARIA, including how our CN101

1

1

7

Inbound to Neurips @ San Deigo. @FarisSbahi will be speaking about @NormalComputing at the ARIA/UK DBT stand at the expo, Tuesday at 19:00. (We're hiring!) I'm speaking on Sunday at 13:15, Frontiers in Probabilistic Inference workshop. Let's do this thing.

0

4

29

Found a bug with this tool just recently, can recommend. The ability to drill down in your LLM traces in an easy fashion is quite useful. When you treat traces like training data, you may need to view it in a different way.

0

2

4

AI for hardware goes *much* deeper than AI for software.

OK super narrow hiring post for a high-prio role (actually, there may not be anyone in the world who fits this) but if you: - have some familiarity with both RL+agents, and have gone deep on at least one - have experience with hardware engineering, device verification in

0

4

16

Everyone knows RLHF and RLVR, but do you know the 17 other RLXX methods published in papers and blog posts? Here's a condensed list:

3

4

23

Diffusion for everything! We share a recipe to start from a pretrained autoregressive VLM and, with very little training compute and some nice annealing tricks, turn it into a SOTA diffusion VLM. Research in diffusion for language is progressing very quickly and in my mind,

Today we're sharing our first research work exploring diffusion for language models: Autoregressive-to-Diffusion Vision Language Models We develop a state-of-the-art diffusion vision language model, Autoregressive-to-Diffusion (A2D), by adapting an existing autoregressive vision

6

28

285

We’re pleased to announce that @NormalComputing, alongside the John Templeton Foundation, the State of Maryland’s Capital of Quantum Initiative, University of Maryland partners, and @Fidelity Investments, have pledged hands-on support to the Maryland Quantum-Thermodynamics Hub

1

2

19

More than $5 million in new funding from several private companies—including @Fidelity and @NormalComputing—will expand Maryland’s Quantum-Thermodynamics Hub, co-led by Nicole Yunger Halpern (@nicoleyh11), and support it for three more years. Read more: https://t.co/wuptEMTDLP

1

5

21

Live from #AIInfraSummit: Maxim Khomiakov (@maximkhv) is on the Demo Stage presenting “Normal EDA: AI-Native Verification Without the Rework.” Mission-critical design verification is fragmented and manual. Humans (and LLMs) are struggling to tame the mathematical complexity of

0

0

6

It’s been an incredible couple of days at #AIInfraSummit so far. If you haven’t yet, swing by before the end of the summit and chat with our team on how AI is transforming chip design and verification. See the demo today at 1:15–1:30pm PDT on the Demo Stage.

0

0

5

LRW helps to enable the workloads Normal ASICs target: diffusion models, probabilistic AI, and scientific simulations. It’s a core algorithmic step toward more AI per watt, rack, and dollar, starting with Normal Computing’s CN101’s on-chip LRW implementation, taped out in June

arxiv.org

We introduce a lattice random walk discretisation scheme for stochastic differential equations (SDEs) that samples binary or ternary increments at each step, suppressing complex drift and...

0

1

4

To summarize, in this paper we - develop a new lattice random walk discretisation for SDEs, - eliminate Gaussian sampling + reduce precision overhead, - demonstrate robustness to quantisation and stability for non-Lipschitz drifts, and - validate scalability on state-of-the-art

1

0

3

Simulating stochastic differential equations (SDEs) is at the heart of modern machine learning, from diffusion models that power state-of-the-art generative AI to finance and physics. But standard methods like Euler–Maruyama assume infinite precision, require Gaussian sampling,

1

0

1

We are excited to announce our new paper on arXiv: Lattice Random Walk (LRW) discretisations of stochastic differential equations (SDEs) by Samuel Duffield (@Sam_Duffield), Maxwell Aifer (@MaxAifer), Denis Melanson, Zach Belateche (@blip_tm), and Patrick J. Coles

2

6

30