Marco Mascorro

@Mascobot

Followers

16K

Following

13K

Media

748

Statuses

6K

Partner @a16z (investor in @cursor_ai, @thinkymachines, @bfl_ml, @WaveFormsAI & more) | Roboticist | Cofounder @Fellow_AI | @MIT 35 under 35 | Opinions my own.

San Francisco, CA

Joined October 2009

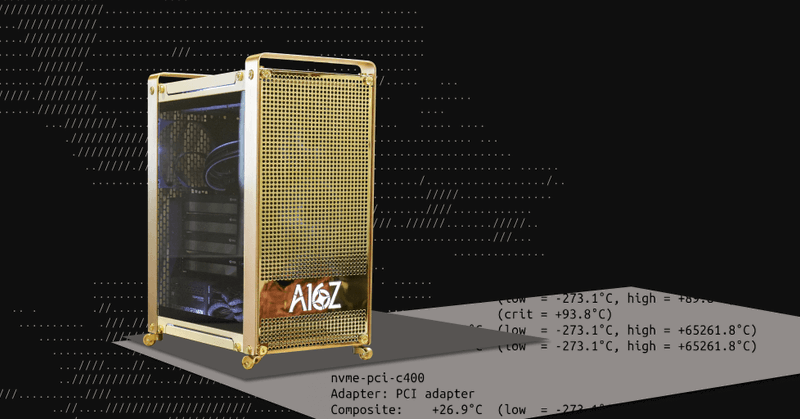

🚨 New: We built @a16z's personal GPU AI Workstation Founders Edition. - 4x NVIDIA RTX 6000 PRO Blackwell Max-Q (384GB total VRAM).- 8TB of NVMe PCIe 5.0 storage.- AMD Threadripper PRO 7975WX (32 cores, 64 threads).- 256GB ECC DDR5 RAM.- 1650Watts at peak (runs on a standard

545

456

5K

For details on the components and a short build guide, check out:.

a16z.com

In the era of foundation models, multimodal AI, LLMs, and ever-larger datasets, access to raw compute is still one of the biggest bottlenecks for researchers, founders, developers, and engineers....

32

20

253

.@jparkerholder's first test of the viral painting the wall clip, including its first-person perspective, and the improvement in time of the spatial memory, going from a few seconds of Genie 2, to a 1+ min in Genie 3:

1

0

5

It's interactivity, spatial memory and consistency are some of the features that stand out. @jparkerholder explains how this was a big improvement and an effort to combine the capabilities from Genie 2, Veo 2 and the DOOM game engine paper:

1

0

5

🚨 New: Interviewed Genie 3 co-leads @jparkerholder and @shlomifruchter from @GoogleDeepMind to talk about their new interactive real-time world model, a next evolution from Veo 3, Genie 2 and the DOOM game engine paper. Genie 3 showed the world what's possible with real-time

8

44

238

GPT-5 scores 65.7% on ARC AGI 1, at only $0.51 per task:.

GPT-5 on ARC-AGI Semi Private Eval. GPT-5.* ARC-AGI-1: 65.7%, $0.51/task.* ARC-AGI-2: 9.9%, $0.73/task. GPT-5 Mini.* ARC-AGI-1: 54.3%, $0.12/task.* ARC-AGI-2: 4.4%, $0.20/task. GPT-5 Nano.* ARC-AGI-1: 16.5%, $0.03/task.* ARC-AGI-2: 2.5%, $0.03/task

0

0

2