Ishaan

@ishaan_jaff

Followers

3K

Following

5K

Media

529

Statuses

2K

Co-Founder LiteLLM (YC W23) - Python SDK & LLM Gateway to Call 100+ LLMs in 1 format, set Budgets https://t.co/nXsBde05K7

san francisco

Joined November 2017

🚅 LiteLLM v1.2.0 🚅 Use Azure, OpenAI, Cohere, Anyscale, Anthropic, Hugging Face 100+ LLMs as a drop in replacement for OpenAI.⚡️ Use LiteLLM to 20x your rate limits using load balancing + queuing.

github.com

Python SDK, Proxy Server (LLM Gateway) to call 100+ LLM APIs in OpenAI format - [Bedrock, Azure, OpenAI, VertexAI, Cohere, Anthropic, Sagemaker, HuggingFace, Replicate, Groq] - BerriAI/litellm

6

21

118

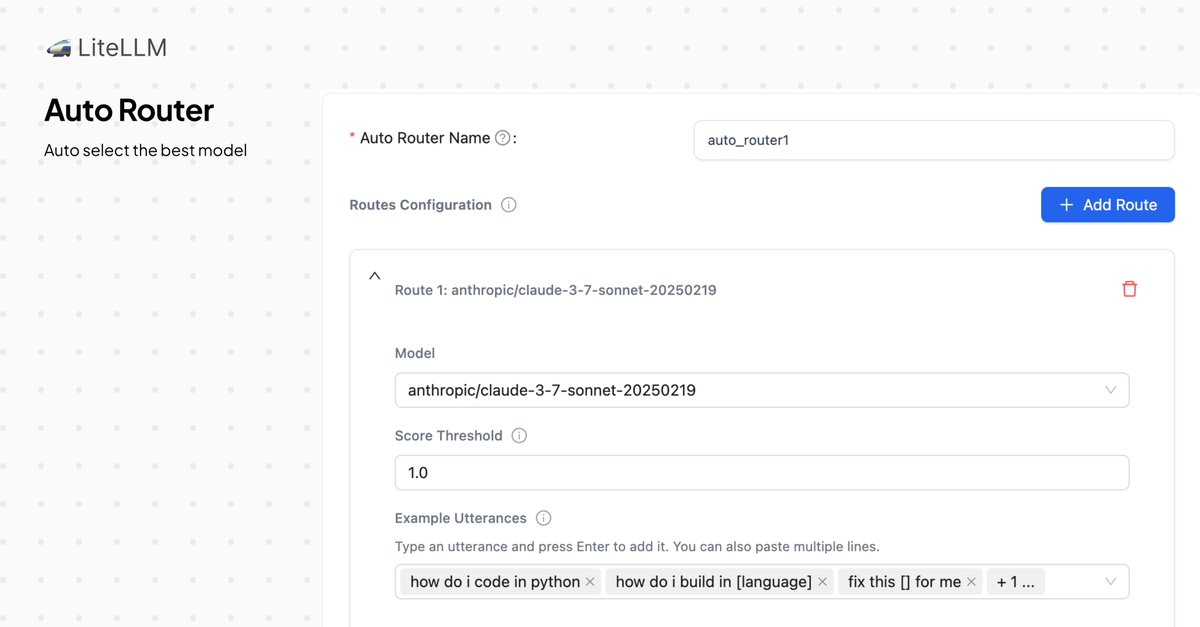

Get started with auto router here: Other improvements on this release 👇.

docs.litellm.ai

LiteLLM can auto select the best model for a request based on rules you define.

1

0

0

Today we're launching @LiteLLM Auto Router - LiteLLM can now auto-select the best model for a given request . On LiteLLM you can now define a set of key words to route to a specific model. LiteLLM will select the best model for the given request (Powered by @AurelioAI_ )

1

1

5

[QA] Allow viewing redacted standard callback dynamic params. [Docs] - litellm load test benchmarks from latest release

docs.litellm.ai

Benchmarks for LiteLLM Gateway (Proxy Server) tested against a fake OpenAI endpoint.

0

0

0

✨ New Image Generation API on @LiteLLM - today we're launching support for @recraftai . This API is great for designers + developers looking to get use image generation. You can get started with Recraft Image Generation here: Other improvements👇

1

1

5

Docs to start here: Other improvements on this release:. [Refactor] Vector Stores - Use class VectorStorePreCallHook for all Vector Store Integrations. [Feat] Proxy - New LLM API Routes /v1/vector_stores and /v1/vector_stores/vs_abc123/search.

docs.litellm.ai

1

0

0