Lisa Alazraki

@LisaAlazraki

Followers

1K

Following

2K

Media

10

Statuses

94

PhD student @ImperialCollege. Research Scientist Intern @AIatMeta prev. @Cohere, @GoogleAI. Interested in generalisable learning and reasoning. She/her

London, UK

Joined January 2016

Just arrived at #EMNLP2025 in Suzhou. Looking forward to meeting with everyone! Will be giving an oral presentation of our paper No Need For Explanations: LLMs Can Implicitly Learn from Mistakes In Context this Friday 7th November at 11.30 am in Hall A108 🎤

1

8

84

We’re looking to hire four Assistant/Associate Professors to our Department. Our priority areas are: 🔵 Programming Languages 🔵 Systems 🔵 Security 🔵 Software Engineering 🔵 Computer Architecture 🔵 Theoretical Computer Science Deadline: 15 Dec 2025 https://t.co/VwBxJpJQpe

imperial.ac.uk

Please note that job descriptions are not exhaustive, and you may be asked to take on additional duties that align with the key responsibilities ment...

0

1

6

At Vmax, we are automating the construction of RL environments and the post-training of agents. We are hiring members of technical staff and research fellows. Come join us in SF! (link to apply in comments).

5

30

69

Cohere Labs x EMNLP 2025: "No Need for Explanations: LLMs can implicitly learn from mistakes in-context" This work demonstrates that eliminating explicit corrective rationales from incorrect answers improves Large Language Models' performance in math reasoning tasks,

0

3

12

Our @imperial_nlp community is ready for #EMNLP2025 ✈️ Check out the papers we’ll be presenting next week in Suzhou and come say hi!

0

6

25

Introducing Global PIQA, a new multilingual benchmark for 100+ languages. This benchmark is the outcome of this year’s MRL shared task, in collaboration with 300+ researchers from 65 countries. This dataset evaluates physical commonsense reasoning in culturally relevant contexts.

2

55

106

🚨 New Paper! 🚨 We want ~helpful~ LLMs, and RLHF-ing them with user preferences and reward models will get us there, right? WRONG! 🙅❌⛔️ Our #EMNLP2025 paper finds a major helpfulness-preferences gap: user/LLM judgments + agent simulations can totally miss what helps users

7

20

69

🚨 New Paper: The Art of Scaling Reinforcement Learning Compute for LLMs 🚨 We burnt a lot of GPU-hours to provide the community with the first open, large-scale systematic study on RL scaling for LLMs. https://t.co/49REQZ4R6G

Wish to build scaling laws for RL but not sure how to scale? Or what scales? Or would RL even scale predictably? We introduce: The Art of Scaling Reinforcement Learning Compute for LLMs

2

17

76

Thanks for having me! Fantastic to see such innovative research happening at @imperialcollege and inspiring to meet so many brilliant students shaping the future of AI research!

0

3

25

Post-training methods like RLHF improve LLM quality but often collapse diversity. Check out DQO, a training objective using DPPs that directly optimizes for semantic diversity and quality.

🚀Excited to introduce 𝐃𝐐𝐎 (𝐃𝐢𝐯𝐞𝐫𝐬𝐢𝐭𝐲 𝐐𝐮𝐚𝐥𝐢𝐭𝐲 𝐎𝐩𝐭𝐢𝐦𝐢𝐳𝐚𝐭𝐢𝐨𝐧), a principled method for post-training Large Language Models to generate diverse high-quality responses 📖 https://t.co/xJsvsVz6i1

0

1

6

Arjun and Vagrant will be presenting our work at Session 3 (11AM 🕚 poster session) at @COLM_conf. If you're interested in LLM evaluation practices, probability vs. generation-based evals, harms and misgendering, please go say hi 👋! Link to paper 📜:

arxiv.org

Numerous methods have been proposed to measure LLM misgendering, including probability-based evaluations (e.g., automatically with templatic sentences) and generation-based evaluations (e.g., with...

0

1

8

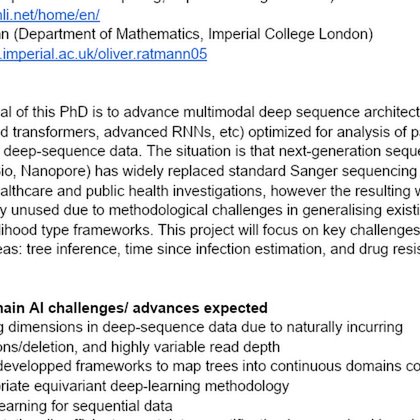

Available by Dec 2025: Oliver Ratmann and I are looking for 1 PhD student on AI for pathogen deep-sequence analytics. Scholarship includes UK home fee + stipend. Contact my work email if interested. RT 🙏 https://t.co/TGg6a1NL52

docs.google.com

AI for pathogen deep-sequence analytics An AI4Health CDT PhD project (student to be recruited by Dec 2025) Scholarship: Home fee + stipend Supervisors (50:50 split) Yingzhen Li (Department of...

0

8

17

Delighted to announce that our work, ShiQ: Bringing back Bellman to LLMs has been accepted to NeurIPS 2025 🥳🥳🎉🎉🎊🎊 Details of the paper in the thread below 👇👇

I'm excited to share our new pre-print ShiQ: Bringing back Bellman to LLMs! https://t.co/yWMT6M0nuT In this work, we propose a new, Q-learning inspired RL algorithm for finetuning LLMs 🎉 (1/n)

3

3

41

🚨New Meta Superintelligence Labs Paper🚨 What do we do when we don’t have reference answers for RL? What if annotations are too expensive or unknown? Compute as Teacher (CaT🐈) turns inference compute into a post-training supervision signal. CaT improves up to 30% even on

13

85

542

VUD’s gonna be at #NeurIPS2025 🎉🥳 Special thanks to my labmates that made this collaboration especially enjoyable!

Wanna understand the sources of uncertainty in LLMs when performing in-context learning 🤔? 🚀 We introduce a variational uncertainty decomposition framework for in-context learning without explicitly sampling from the latent parameter posterior. 📄 Paper:

0

5

32

✨ Accepted as a Spotlight at #NeurIPS2025! Huge thanks to my coauthors and everyone who supported us. Check out the details below 👇

Thrilled to share our new preprint on Reinforcement Learning for Reverse Engineering (RLRE) 🚀 We demonstrate that human preferences can be reverse engineered effectively by pipelining LLMs to optimise upstream preambles via reinforcement learning 🧵⬇️

12

34

273

This will be an oral! 🎤 See you at #EMNLP25

1

4

28

I was recently asked during an interview why LLMs were not deterministic even when temperature is 0. This very carefully answers it! My interviewer’s answer was different though, that there likely is non determinism in MOE routing for load balancing

Today Thinking Machines Lab is launching our research blog, Connectionism. Our first blog post is “Defeating Nondeterminism in LLM Inference” We believe that science is better when shared. Connectionism will cover topics as varied as our research is: from kernel numerics to

1

1

26

ChatGPT doesn't understand why I keep beating it at rock paper scissors😅

1

1

9

Wanna understand the sources of uncertainty in LLMs when performing in-context learning 🤔? 🚀 We introduce a variational uncertainty decomposition framework for in-context learning without explicitly sampling from the latent parameter posterior. 📄 Paper:

We show how to make LLM in-context learning approximately Bayesian & decompose uncertainty IMO this is proper approximate inference 🥰 applied to LLMs Led by awesome students @shavindra_j @jacobyhsi88 Filippo & Wenlong 👍 Example👇by prompting, bandits & NLP examples in paper

2

9

24