yingzhen

@liyzhen2

Followers

4K

Following

800

Media

42

Statuses

567

teaching machines🤖 to learn🔍 and fantasize🪄 now 🇬🇧@ImperialCollege @ICComputing ex @MSFTResearch @CambridgeMLG helping @aistats_conf 24-26

Joined April 2012

Computing @ Imperial are hiring four Ass. / Assoc. Profs! Priority areas: - PL - Systems - Security - Software Eng. - Computer Architecture - Theoretical Computer Science Applications from individuals from underrepresented groups especially welcome! https://t.co/6LFgJXvMUw

imperial.ac.uk

Please note that job descriptions are not exhaustive, and you may be asked to take on additional duties that align with the key responsibilities ment...

0

9

14

TMLR is looking for additional reviewers and action editors! Please sign up to keep the initiative strong and vibrant.

As Transactions on Machine Learning Research (TMLR) grows in number of submissions, we are looking for more reviewers and action editors. Please sign up! Only one paper to review at a time and <= 6 per year, reviewers report greater satisfaction than reviewing for conferences!

2

15

59

Available by Dec 2025: Oliver Ratmann and I are looking for 1 PhD student on AI for pathogen deep-sequence analytics. Scholarship includes UK home fee + stipend. Contact my work email if interested. RT 🙏 https://t.co/TGg6a1NL52

docs.google.com

AI for pathogen deep-sequence analytics An AI4Health CDT PhD project (student to be recruited by Dec 2025) Scholarship: Home fee + stipend Supervisors (50:50 split) Yingzhen Li (Department of...

0

8

15

We are delighted to announce the #EurIPS 2025 Workshops 🎉: https://t.co/IJtQVm7azJ We received 52 proposals, which were single-blind reviewed by more than 35 expert reviewers, leading to 18 accepted workshops (acceptance rate 34.6%).

1

4

24

Wanna understand the sources of uncertainty in LLMs when performing in-context learning 🤔? 🚀 We introduce a variational uncertainty decomposition framework for in-context learning without explicitly sampling from the latent parameter posterior. 📄 Paper:

We show how to make LLM in-context learning approximately Bayesian & decompose uncertainty IMO this is proper approximate inference 🥰 applied to LLMs Led by awesome students @shavindra_j @jacobyhsi88 Filippo & Wenlong 👍 Example👇by prompting, bandits & NLP examples in paper

2

7

23

We show how to make LLM in-context learning approximately Bayesian & decompose uncertainty IMO this is proper approximate inference 🥰 applied to LLMs Led by awesome students @shavindra_j @jacobyhsi88 Filippo & Wenlong 👍 Example👇by prompting, bandits & NLP examples in paper

7

18

166

Huge thanks to Laura Manduchi, Clara Meister & Kushagra Pandey, who led the 2-year effort of writing “On the Challenges and Opportunities in Generative AI” involving 27 authors. Coming out of a 2023 Dagstuhl Seminar I co-organized with @vincefort, @liyzhen2 & @sirbayes.

Exciting news! Our paper "On the Challenges and Opportunities in Generative AI" has been accepted to TMLR 2025. 📄

0

2

14

#AISTATS2026 call for papers is out! We welcome solid Stats and AI/ML work from you 🤗 (2026 conference will have further exciting initiatives, watch this space 😇

📣 Please share: We invite submissions to the 29th International Conference on Artificial Intelligence and Statistics (#AISTATS2026) and welcome paper submissions at the intersection of AI, machine learning, statistics, and related areas. @aistats_conf [1/3]

0

1

15

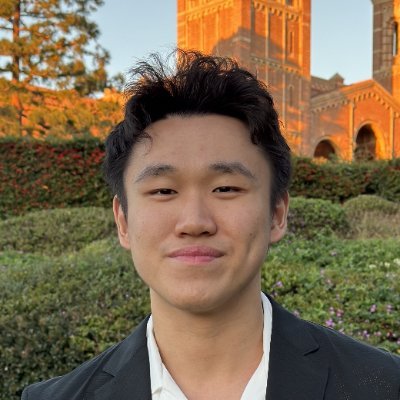

long overdue, but we finally have a team photo! 😊 (some of them also on this site @JzinOu @jacobyhsi88 @BalsellsRodas

0

3

31

I read the first 4 books extensively during my PhD, highly recommended 👍 I'd also highlight the 5th book as my first read re deep learning. Mind-blowing for a young math undergrad (me) at the time, made me decide to go for ML

14

153

1K

My craziest ever conference + holiday trip is now done 😆 Lots of flying, research discussions, and Mt Fuji climbing, all within 1 month 🗓️ Now back to support my team re winter party conf rebuttals 💪

0

0

86

learning many intriguing sampling ideas at a Bayesian computation workshop now, some ideas really blowed my mind 🤯 I guess the recent LLM + sampling research is just using the 101 versions of them 😅

5

0

25

Statistical machine learning is powering groundbreaking advances, yet uncertainty quantification remains one of its biggest challenges. Join this lunchtime panel! 🎙️ Live panel 🗓️ 24 June 11.30 📍 White City 🫰 £6 (incl refreshments) ✏️ To book visit👇 https://t.co/SxFYK1YGdq

0

4

9

Update: now we can deal with multi-dim inputs!😆 The trick is just like how you build token sequences for SOTA RNNs applied to e.g., vision problems. If I were to shamelessly brag to deep learning people 😉: this could potentially become a Bayesian/Kernel version of S4/Mamba

RNN memory (HiPPO 🦛 style, predecessor to S4/Mamba 🐍) for posterior over functions When my awesome students told me you can build a memory for random functions that you don’t even observe I was like 🤯 Preliminary but exciting, feedback welcome 🤗

1

1

13

The next seminar is this Friday (May 23rd) and starts at 12pm midday UK time! Professor @liyzhen2 from Imperial College London is going to talk about “On Modernizing Sparse Gaussian Processes ”! https://t.co/WIZ32HifSe This seminar is hybrid. More info

ucl.zoom.us

Zoom is the leader in modern enterprise cloud communications.

0

4

8

#AISTATS2025 day 3 keynote by Akshay Krishnamurthy about how to do theory research on inference time compute 👍 @aistats_conf

1

10

139

Congrats to @_Daniel_Marks_ and @DarioPaccagnan! 👍 Daniel was an MEng student at @ICComputing and this paper came from his MEng thesis.

And last but not least... the Best Student Paper Award at #AISTATS 2025 goes to Daniel Marks and Dario Paccagnan for "Pick-to-Learn and Self-Certified Gaussian Process Approximations". Congratulations!

0

1

17

#AISTATS2025 is off to a strong start! First keynote: Chris Holmes rethinks Bayesian inference through the lens of predictive distributions—introducing tools like martingale posteriors. 🌴🌴🤖🎓

0

7

54

This paper took @BalsellsRodas ~5 years in making: 2020/21: MSc proj, toy exp🐣 2022: added more exp, rejected due to weak theory 😥 2023/24: invented a new proof tech in another proj 🤔 2024/25: revisit, apply new proof tech, resubmit -> accepted 🥳 Persistence pays off indeed👍

Excited to share that our paper "Causal discovery from Conditionally Stationary Time Series" has been accepted to ICML 2025!🥳 Pre-print: https://t.co/tPlw7p5Ja4 Thank you very much to all my collaborators, persistence pays off! #icml #icml2025

1

1

42