Matthieu Meeus

@matthieu_meeus

Followers

229

Following

455

Media

36

Statuses

127

PhD student @ImperialCollege Privacy/Safety + AI https://t.co/UBo5kgRqbU

London, England

Joined September 2022

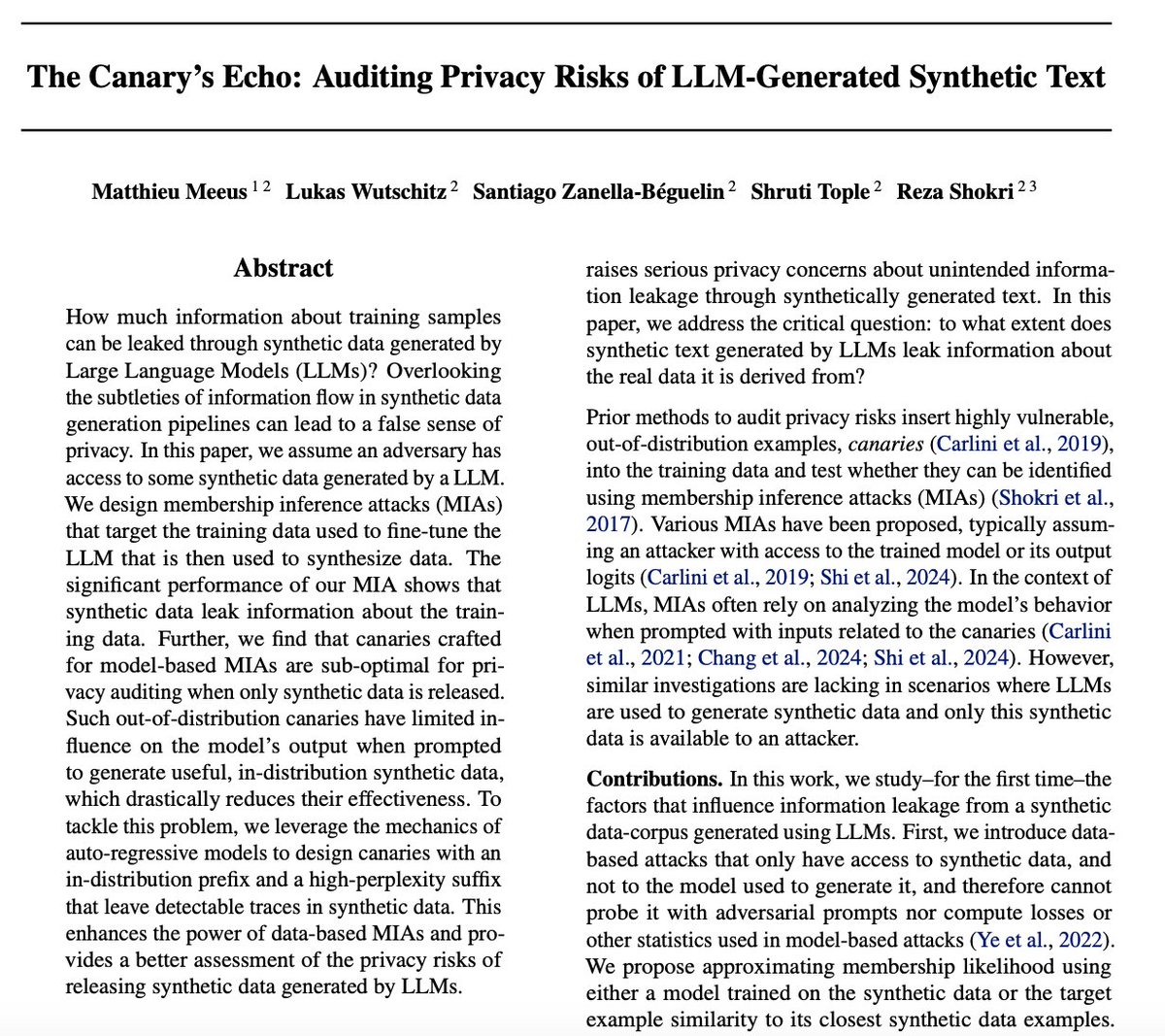

(1/9) LLMs can regurgitate memorized training data when prompted adversarially. But what if you *only* have access to synthetic data generated by an LLM?. In our @icmlconf paper, we audit how much information synthetic data leaks about its private training data 🐦🌬️

3

5

25

RT @_igorshilov: If you are at USENIX, come to our presentation and poster!. 9am PDT in Ballroom 6A.

0

2

0

RT @l2m2_workshop: L2M2 is happening this Friday in Vienna at @aclmeeting #ACL2025NLP! We look forward to the gathering of memorization re….

sites.google.com

Program

0

12

0

Presenting two papers at the MemFM workshop at ICML! . Both touch upon how near duplicates (and beyond) in LLM training data contribute to memorization. - - @_igorshilov @yvesalexandre

4

10

56

Lovely place for a conference! . Come see my poster on privacy auditing of synthetic text data at 11am today! . East Exhibition Hall A-B #E-2709

(1/9) LLMs can regurgitate memorized training data when prompted adversarially. But what if you *only* have access to synthetic data generated by an LLM?. In our @icmlconf paper, we audit how much information synthetic data leaks about its private training data 🐦🌬️

0

1

17

Will be at @icmlconf in Vancouver next week! 🇨🇦. Will be presenting our poster on privacy auditing of synthetic text. Presentation here And will also be presenting two papers at the MemFM workshop! Hit me up if you want to chat!.

(1/9) LLMs can regurgitate memorized training data when prompted adversarially. But what if you *only* have access to synthetic data generated by an LLM?. In our @icmlconf paper, we audit how much information synthetic data leaks about its private training data 🐦🌬️

1

5

34

RT @_igorshilov: New paper accepted @ USENIX Security 2025!. We show how to identify training samples most vulnerable to membership inferen….

computationalprivacy.github.io

Estimate training sample vulnerability by analyzing loss traces - achieving 92% precision without shadow models

0

1

0

Check out our recent work on prompt injection attacks! . Tl;DR: aligned LLMs show to defend against prompt injection; yet with a strong attacker (GCG on steroids), we find that successful attacks (almost) always exist, but are just harder to find.

Have you ever uploaded a PDF 📄 to ChatGPT 🤖 and asked for a summary? There is a chance the model followed hidden instructions inside the file instead of your prompt 😈. A thread 🧵

2

5

18

(9/9) This was work done during my internship @Microsoft Research last Summer, now accepted at @icmlconf 🇨🇦 . Was a great pleasure to collaborate with and learn from @LukasWutschitz, @xEFFFFFFF, @shrutitople and @rzshokri.

0

0

1

How good can privacy attacks against LLM pretraining get if you assume a very strong attacker? Check it out in our preprint ⬇️.

Are modern large language models (LLMs) vulnerable to privacy attacks that can determine if given data was used for training? Models and dataset are quite large, what should we even expect? Our new paper looks into this exact question. 🧵 (1/10)

1

5

20

RT @_akhaliq: Google presents Strong Membership Inference Attacks on Massive Datasets and (Moderately) Large Language Models .

0

19

0