Guy Bar-Shalom

@GuyBarSh

Followers

73

Following

14

Media

6

Statuses

21

ML PhD student @TechnionLive - Learning under symmetries | Ex Research Intern @Verily Working with @HaggaiMaron

Israel

Joined February 2023

📢 Introducing:. Learning on LLM Output Signatures for Gray-box LLM Behavior Analysis [.A joint work with @ffabffrasca (co-first author) and our amazing collaborators:. @dereklim_lzh @yoav_gelberg @YftahZ @el_yaniv @GalChechik @HaggaiMaron .🧵Thread.

1

6

28

RT @ffabffrasca: “Balancing Efficiency and Expressiveness: Subgraph GNNs with Walk-Based Centrality” is at #ICML2025!. Drop by our poster o….

0

13

0

We believe LOS-Net is a step toward transparent, and scalable (gray-box) LLM analysis. Inspired by works by: @WeijiaShi2 , @anirudhajith42 , @LukeZettlemoyer , @OrgadHadas , @boknilev and more. 🔗 Paper: [. 💻 Code: [.#AI #LLMs.

0

0

5

Proud to see our work honored with @neur_reps best paper award!.This was a joint work with @ytn_ym, and a collaboration with @ffabffrasca @HaggaiMaron.

The best paper award for the Topology and Graphs track goes to "Efficient Subgraph GNNs via Graph Products and Coarsening," presented by @GuyBarSh.

1

1

11

RT @neur_reps: Our last contributed talk is from @GuyBarSh on "Efficient Subgraph GNNs via Graph Products and Coarsening". .

0

1

0

Inspired by the works of: @chrsmrrs @muhanzhang93 @mmbronstein @PanLi90769257 @bohang_zhang @beabevi_ @brunofmr .[8/8].

0

0

7

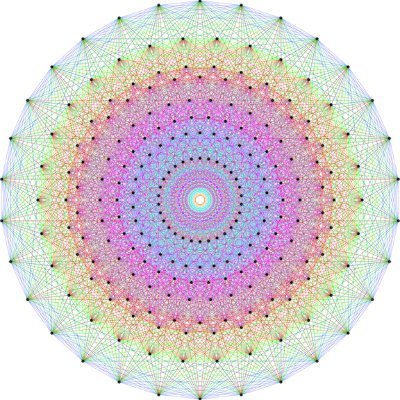

🎉``A Flexible, Equivariant Framework for Subgraph GNNs via Graph Products and Graph Coarsening'' is accepted to #NeurIPS2024!🎉. ➡️This is a joint effort with @ytn_ym and was made possible by amazing collaborators: @ffabffrasca @HaggaiMaron .[1/8]

4

15

72