Bruno Ribeiro

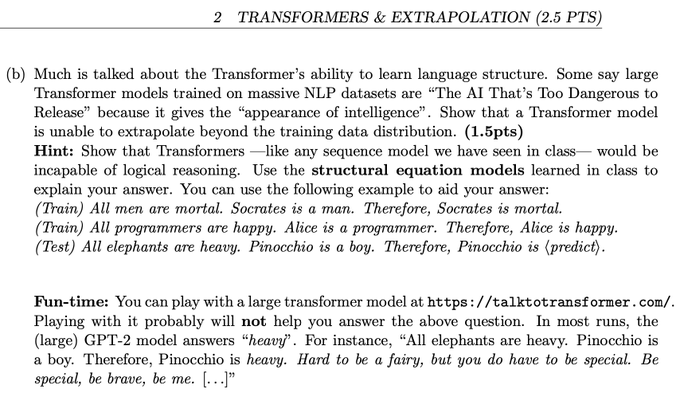

@brunofmr

Followers

2,040

Following

273

Media

32

Statuses

770

(Currently on sabbatical @ Stanford) Associate Professor of Computer Science Purdue University; Causal & Invariant Representation Learning

West Lafayette, IN

Joined December 2009

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

FOTFOT TOMMY BOY

• 194399 Tweets

Mourinho

• 111345 Tweets

WIN AT GQ SHANGHAI

• 90328 Tweets

マギレコ

• 59305 Tweets

田中さん

• 41123 Tweets

#يوم_الجمعه

• 38424 Tweets

感想ポスト

• 32337 Tweets

水着セリカ

• 30999 Tweets

ロケットランチャー

• 24738 Tweets

フュリオサ

• 17626 Tweets

#جمعه_مباركه

• 15616 Tweets

サヨナラ

• 14855 Tweets

ぷす逮捕

• 13564 Tweets

佑芽実装

• 12978 Tweets

北九州市

• 11921 Tweets

エスコン

• 11372 Tweets

StopCampurTanganIndustri

• 10089 Tweets

Last Seen Profiles

🚀 What are knowledge graphs? Are they just attributed graphs? Or are KGs more? (i.e., new equivariances)

Gao,

@YangzeZhou

, & I postulate KGs have a different equivariance

The consequences are astounding🤯, including 100% test accuracy in KGC...

1/🧵

4

22

159

Excited to be spending my post-tenure sabbatical year (until July 2024) in the Bay Area (at SNAP hosted by

@jure

). Spending time with old friends in the area has been a blast. If the in the Bay Area and interested in graph, OOD and causal representation learning, reach out!

3

0

118

😅

The view that GNNs are cool but not super-useful seems somewhat prevalent in the Bay Area 🙃

Parts of the graph ML community now see invariances as unnecessary (note: without them the inputs are sequences not graphs) 🙃

The truth is, GeomDL needs to make a stronger case...🧵

4

6

104

Thanks to the efforts of my students! Looking forward to what comes next.

Congratulations to

@LifeAtPurdue

Purdue Professor Bruno Ribeiro (

@brunofmr

) on receiving your CAREER Award.

0

2

25

15

2

86

What

@nvidia

is not quite seeing it yet, is that their prices to academia are putting us under intense pressure to move away from their hardware.

And we will start seeing this move at the acknowledgment sections at

#ICLR2025

…

2

7

68

Really awesome tutorial on set representation learning! Has everything one needs to know 🚀! Great job 👏🏼

0

3

53

Entering

#ICLR2020

reviews. Witnessing some harsh words by other reviewers,

#DontBeMean

. Why not assume authors make mistakes, miss prior work, or overstate their claims in good faith? The goal is to reward good papers, we are not the Spanish Inquisition.

3

6

52

What is the OOD generalization capability of (structural) message-passing GNNs for link prediction?

"OOD Link Prediction Generalization Capabilities of Message-Passing GNNs in Larger Test Graphs"

@YangzeZhou

w/

@GittaKutyniok

@brunofmr

A 🧵

1/n

2

15

51

@docmilanfar

I am sorry, but industry really needs to rethink this. If Stanford had sold its 8000 acres to Baldwin Locomotive Works in order to get their best Locomotives for study in the 1800s, we today would look at that with regret.

Universities plan for centuries, not the next quarter.

1

0

40

"Structural representations (GNNs,...) are to positional node embeddings (matrix factorization,...) as distributions are to samples."

@balasrini32

new preprint:

Implications? They can do the same tasks. A thread: 1/6

1

6

37

Research news: Want to boost the expresiness of your favorite GNN architecture? Collective learning can help: a hybrid GNN representation with Hang &

@ProfJenNeville

GCNs (

@thomaskipf

,

@wellingmax

) benefit the most (up to +15% in accuracy)!

3

10

38

Accepted at

#NeurIPS2022

. Congrats

@YangzeZhou

!

What is the OOD generalization capability of (structural) message-passing GNNs for link prediction?

"OOD Link Prediction Generalization Capabilities of Message-Passing GNNs in Larger Test Graphs"

@YangzeZhou

w/

@GittaKutyniok

@brunofmr

A 🧵

1/n

2

15

51

4

0

33

Excited to give a plenary talk on the *GNNs for the Sciences Workshop* this Thursday and Friday 🚀.

Algorithmic alignment is *key* for OOD robustness in AI4Science applications.

Our

#ICLR2024

spotlight will be one of the topics

0

1

34

Thanks

@AmazonScience

for the support! Very excited about this. Counterfactual inference with direct (measurable) impact at the scale of millions of subjects. Work jointly with the amazing Sanjay Rao

Is it possible to improve video streaming without using paying-customers as test subjects?

➡️

@LifeAtPurdue

's Prof. Bruno Ribeiro shares a novel approach of using

#ML

tools to answer counterfactual questions for streaming services.

#PurdueCS

#Purdue

1

2

5

1

5

32

Slides of my invited talk at

#SIAMCSC20

on the equivalence between Matrix Factorization and GNNs

0

8

32

To anyone receiving

#ICML2023

scores. My AC batch has pretty low scores all around.

I am not sure if ACs having to aggressively chase reviewers this year (all papers were assigned only 3 reviewers) correlates with low scores. Or maybe an influence of the job market tightening

8

2

31

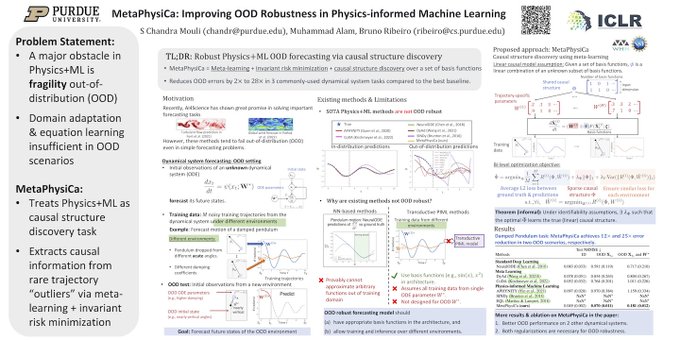

How can Physics+ML become robust ODD? *Causal structure discovery offers a promising direction*

✨Check out

@mouli_sekar

‘s

#ICLR2024

Spotlight✨

Today poster

#24

at 4:30pm

-

1

0

28

Same here,

@LogConference

review quality/timeliness much superior to ICML, NeurIPS, ICLR.

Now it seems inevitable that these top 3 ML conferences will eventually become federated conferences like ACM's FCRC, where specialized conference papers (e.g., LOG papers) are presented.

Amazed by both the review timeliness and quality on my AC stack for this year's

@LogConference

!

All papers have 3 reviews, on time, with lots of detail, and no pushes from my side.

@NeurIPSConf

@icmlconf

@iclr_conf

take note: offering monetary reviewer prizes can mean a lot!

2

10

96

0

3

28

Looking forward!

Excited to announce our next speaker

@brunofmr

from

@PurdueCS

at the 2nd GCLR workshop at AAAI 2022

@RealAAAI

.

Join us to listen to Bruno's work.

For more details:

@ravi_iitm

@PhilChodrow

@kerstingAIML

@Sriraam_UTD

@gin_bianconi

@rbc_dsai_iitm

0

7

18

0

2

26

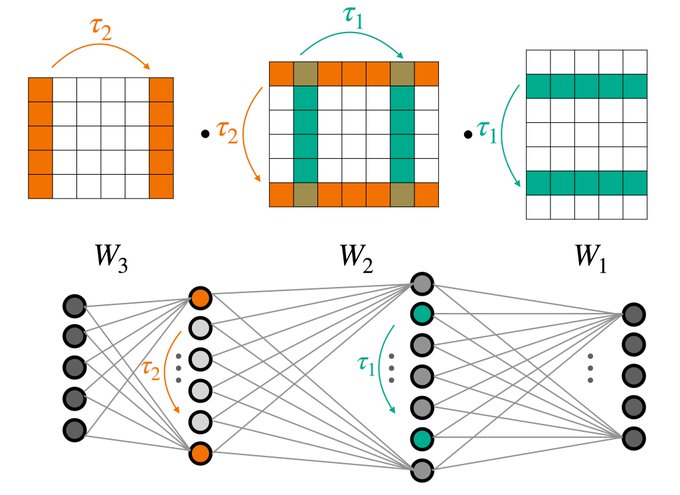

Fun easter egg in Appendix G.1:

Q: Do OGBG graph classification tasks really need more expressive GNN representations than WL? 🤔

A: No, WL power is enough

Reconstruct to empower! 🚀

Our new work (w/

@chrsmrrs

and

@brunofmr

) accepted at

#NeurIPS2021

shows how graph reconstruction —an exciting field of (algebraic) combinatorics— can build expressive graph representations and empower existing (GNNs) ones! 👉 1/3

4

11

99

3

2

25

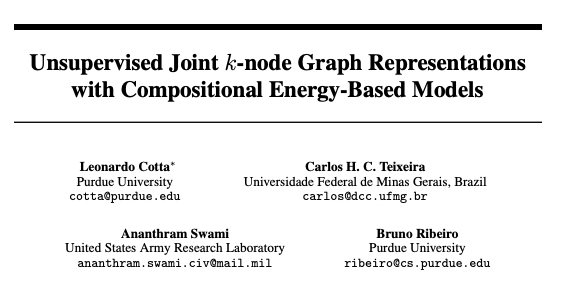

This is an energy-based model (EBM) using GNNs

Our new work accepted at NeurIPS (w/

@carloshct

, Ananthram,

@brunofmr

) introduces unsupervised joint k-node graph representations!

1/3

3

6

43

0

1

24

Congrats

@PetarV_93

,

@beabevi_

and co-authors! Neural algorithmic reasoning drastically improves with causal graph ML!

Two papers accepted

#ICML2023

🎉

We use causality to drastically improve neural algorithmic reasoning (as foretold by

@beabevi_

@YangzeZhou

@brunofmr

two ICMLs ago) 🔢 and upsample graphs to slow down message passing and stop over-smoothing in its tracks 🐌

See you in Honolulu 🏝️

1

6

153

1

1

24

Just in case anyone is wondering 🤔, out-of-distribution tasks are *far* from solved in ML‼️

On graphs there is lots to do. We can't even reliably generalize on graph sizes yet (and mostly on graphons)!

1

1

24

Really interesting! Reinforces our observations on graph tasks (such as graph classification, link prediction) where OOD with larger graphs will obliterate methods learning spurious associations

1

2

23

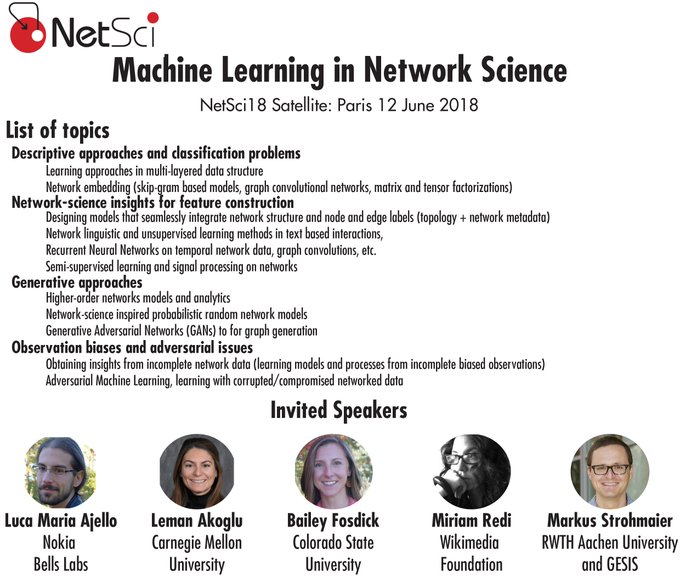

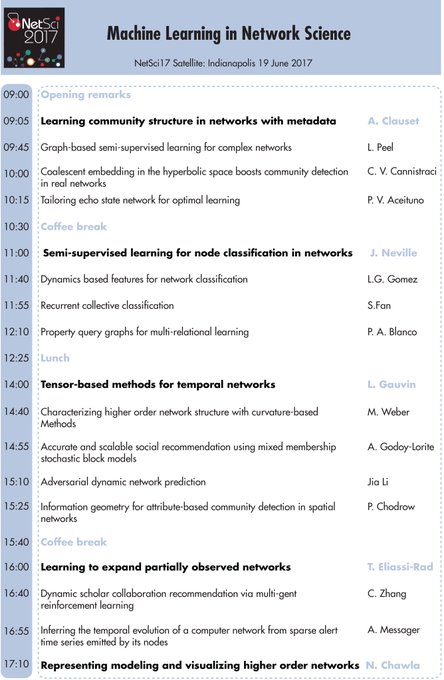

(updated) Machine Learning in Network Science,

@netsci2018

satellite. 5 amazing keynote speakers! Deadline for abstracts 30/04. Info and links

@net_science

@MartonKarsai

@ciro

0

11

21

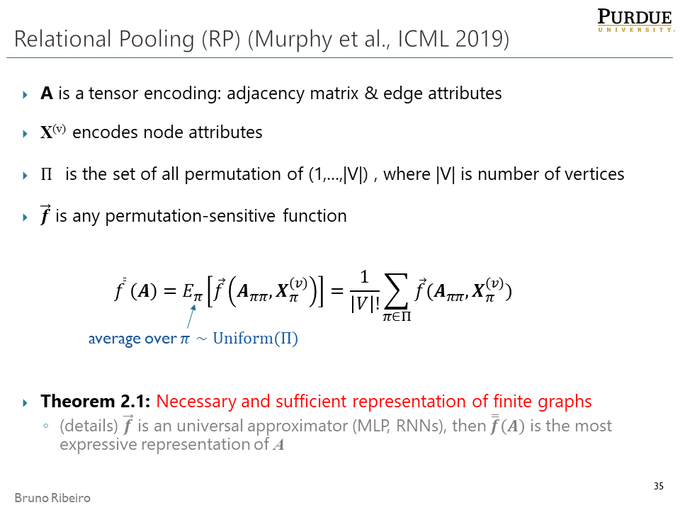

Can optimization alone make GNNs more powerful than the Weisfeiler-Lehman test? The answer is YES!

(w/

@RyanLMurphy1

@balasrini32

& Rao)

0

10

22

Yet another example why controls are so important. Very interesting read for folks working on graphs and misinformation.

1

3

22

It was a privilege to have worked with

@cottascience

. Follow his next steps because he has really deep insights and bold projects

Last Tuesday I defended and ended my PhD journey

@PurdueCS

! I'll join

@VectorInst

as a postdoc fellow in the Fall, working w/

@cjmaddison

and others in more aspects of ML ∩ Combinatorics ∩ Invariant theory. +

11

7

134

0

1

21

Can optimization alone make GNNs more powerful than the Weisfeiler-Lehman test? The answer is YES!

(w/

@RyanLMurphy1

@balasrini32

& Rao)

0

10

22

1

0

21

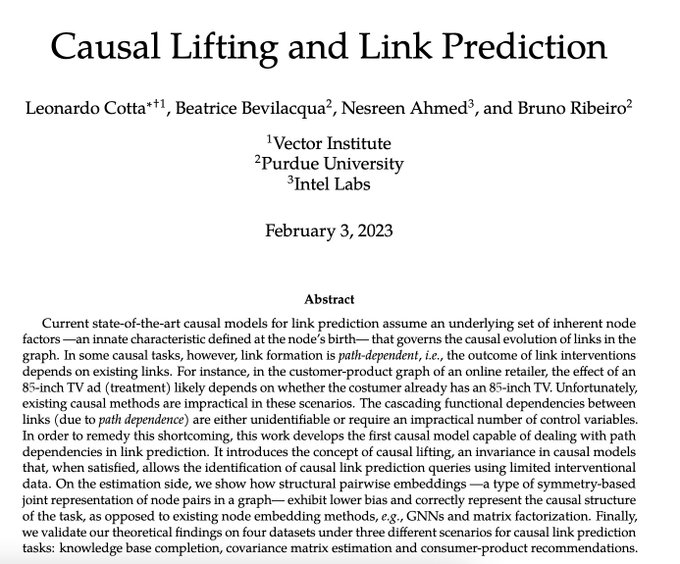

Excellent work on causal link prediction by

@cottascience

and

@beabevi_

🗞️ Exciting news! This is now published in the proceedings of

@royalsociety

A (w/ open access).

Make sure to check the original thread and drop me a line if you have any questions or feedback 😊

0

12

39

0

1

21

This is going to be a fun course!

.

@brunofmr

&

@jure

will teach the

#SINSA2020

course in Machine Learning in Networks:

@NUnetsi

@IUNetSci

0

5

14

0

1

21

Excellent post on how we can overcome the limitations of GNNs

Physics-inspired continuous learning models on graphs can overcome the limitations of traditional GNNs,

@mmbronstein

explains.

0

17

67

0

2

20

Inspiration for

#ICML2024

discussion week

Reviewer 2: Percolation is just a fancy name for diffusion! Reject

Frisch & Hammersley (1963): We thank the reviewer for their insightful comment. We will add a paragraph explaining the difference...

Be kind to each other out there

0

0

20

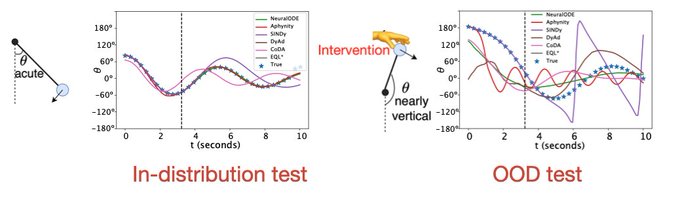

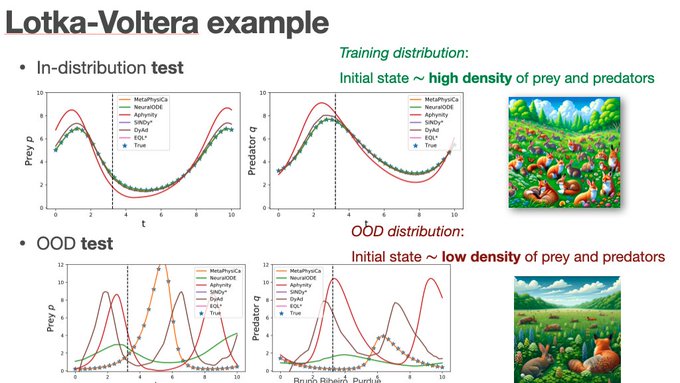

👇OOD is a fundamental challenge in AI4Science but metalearning+causality may help:

@mouli_sekar

's

#ICLR24

spotlight shows scenarios where Physics-ML performs poorly OOD. And how a causal-equivalent method (MetaPhysiCA) can help. 1/n

1

3

20

Slides of my keynote at GrAPL (GABB + GraML)

@IPDPS

#ipdps2019

in Rio. Thanks

@ananth_k

, Manoj Kumar, Antonino Tumeo , & Tim Mattson for the invitation! I had a great time.

0

5

18

After buying a keyboard, matrix-factorization recommenders think you were born to buy keyboards, and will recommend more

There are mitigation strategies to “update the factors” but the factorization approach fundamentally gets the causal task wrong

0

1

17

@PetarV_93

Surprising folks still don't know the transformer architecture is equivariant.

Also surprising: How many people still think symmetries are useful only for reducing sample complexity (in-distribution). An OK use case but that is not what makes symmetries exciting in ML.

1

0

19

Can we learn counterfactually-invariant representations with counterfactual data augmentation?🧐

‼️ Maybe not if the augmentation was done by human annotators (a context-guessing machine)

Relevant to NLP efforts

by

@mouli_sekar

&

@YangzeZhou

@crl_uai

0

6

19

Awesome post by

@mmbronstein

explaining how subgraph representations can help improve GNNs!

Subgraph-based representations are extremely interesting (with applications even in counterfactual invariance,

@beabevi_

@YangzeZhou

)

PS:

@mmbronstein

's viz game is just another level

New blog post coauthored with

@cottascience

@ffabffrasca

@HaggaiMaron

@chrsmrrs

Lingxiao Zhao on a new class of "Subgraph GNN" architectures that are more expressive than WL test

3

51

234

1

3

17

A friendly reminder from your neighborhood

#NeurIPS2022

AC. Please add a clear causal model (with a DAG encompassing all variables) if talking about causal (graph) representation learning 💕

1

2

16

@docmilanfar

It is not a criticism of industry at all. Or envy. It is just a statement of facts.

Happens the same way at Purdue or Stanford. We go to the university admin (myself, Fei-Fei) and ask for resources, they say we can't have it.

1

1

16

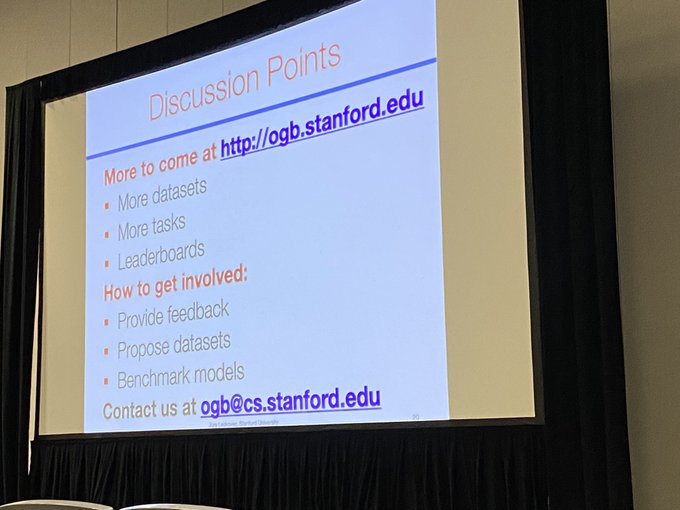

Great to see many good benchmarks!

1. It is disheartening to see GNNs requiring 48GB GPUs for medium-sized graphs.

2. The node classification tasks could be harder: GNNs achieve only up to 35% accuracy on this Friendster dataset

0

0

13

@deaneckles

Maybe this counts: Herman Rubin once simplified one of Chernoff's proofs in a manuscript with an inequality. Chernoff thought it was "so trivial that I did not trouble to cite his contribution"... this is now known as Chernoff's inequality

1

0

15

I am really excited about this work! 🚀So far the focus has been on learning symmetries ❄️. But in a way, the asymmetries are where the (causal) information is for OOD robustness (Wed noon eastern)

#ICLR2022

How can we build OOD robust classifiers when test inputs are transformed differently from training inputs?

Our paper at

#ICLR2022

introduces *asymmetry learning* to solve such OOD tasks (need counterfactual reasoning). Come attend our oral presentation:

0

6

10

0

2

14

Excellent resource for Brazilian students applying for PhDs in CS. Highly recommended!

0

2

14

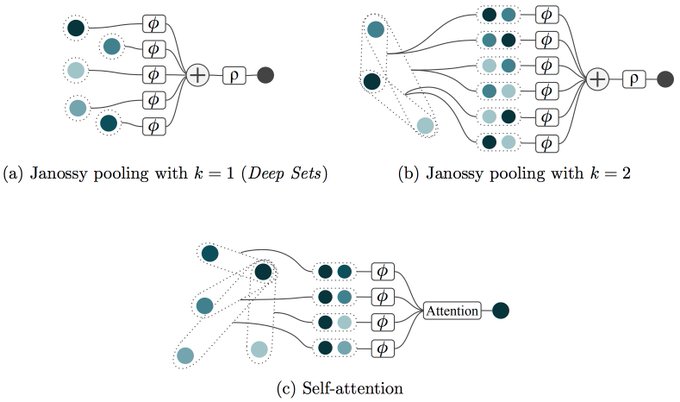

Janossy Pooling: Learnable pooling layers for deep neural networks , w/

@RyanLMurphy1

, Srinivasan, and Rao. Generalizes Deep Sets (Zaheer et al.

@rsalakhu

) and others. LSTMs as Graph Neural Net aggregators is theoretically sound!

@ProfJenNeville

@jure

2

6

14

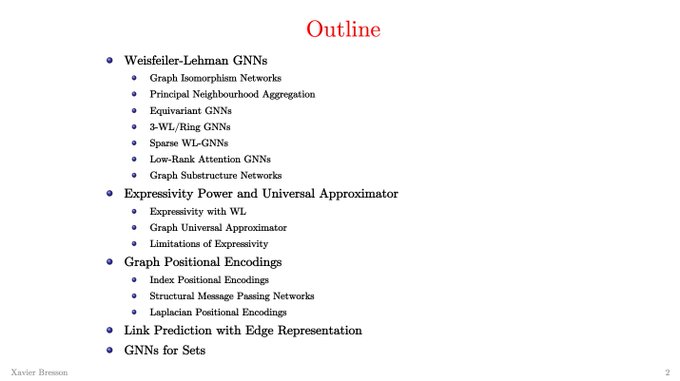

Great resource for students trying to understand a bit more about GNNs

Sharing my lecture slides on "Recent Developments of Graph Network Architectures" from my deep learning course. It is a review of some exciting works on GNNs published in 2019-2020.

#feelthelearn

12

254

1K

0

1

13

This is really cool work! Highly recommend

0

0

13

@docmilanfar

At Purdue, the answer I get is student affordability. We froze tuition for 13 years now. All endowment interest goes to cover the gap.

I am sure Fei-Fei also gets some answer. Her plea is probably also partially directed at Stanford brass.

1

1

13

This is an awesome resource on temporal graph learning! Highly recommended

Check out our new blog post summarising key advances in Temporal Graph Learning over the last 12 months!

Written with amazing co-authors

@shenyangHuang

@michael_galkin

3

87

422

1

1

13

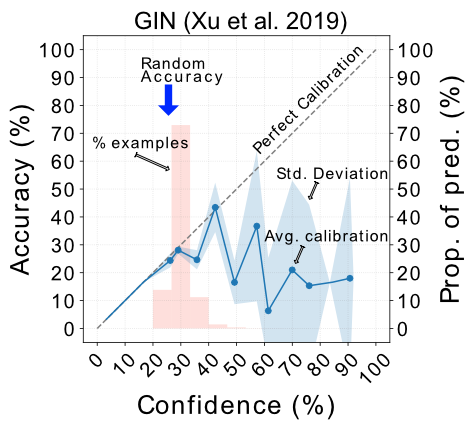

In need of a harder classification task for your GNN? Also, useful to check the calibration of the GNN

#calibrationalsomatters

(w/

@leolvt

@BJalaian

)

#NeurIPS2019

1

7

13

@docmilanfar

Just for scale, since many students are curious:

Purdue expenses are 2.2B. Endowment interest is ~0.13B/yr. If we spend on GPUs, we need to make up the gap. I.e, raise tuition.

Purdue gets ~390M/yr from state, that is equivalent to an endowment of 14.4B. Not as far from 36B

3

1

11

Great commentary by O'Bray, Horn,

@Pseudomanifold

,

@kmborgwardt

on the evaluation of Graph Generative Models (unlike images, we understand graph topology a lot better)

There should be a workshop with the ERGM folks like Krista Gile, Handcock, Snijders

2

2

12

@jure

is proposing a promising direction with relbench () but there are also many other unexplored directions...

0

0

12

Same here for Graph representation learning: 5.5 is top 10%

To those disappointed with low neurips scores in their submissions, it may be reassuring for the rebuttal period to know that, in my batch as SAC, papers with an average score of 5.5 make it to the top 10%!

#neurips2022

3

10

183

2

2

12

This is definitely one of the most interesting works I have ever been involved 👇

In 1927, Spearman (inventor of factorization) warned us not to use matrix factorization for (causal) recommendations… how should we do it then? Via 👉Causal Lifting👈

See

@cottascience

‘s thread

0

1

12

For the folks writing follow-up work. Some updates:

1. New task to showcase the fully-inductive nature of the double equivariance link prediction approach

2. Theorem 4.10 was (obviously) showing the reverse relation (thx Jincheng Zhou!). This is now fixed

🚀 What are knowledge graphs? Are they just attributed graphs? Or are KGs more? (i.e., new equivariances)

Gao,

@YangzeZhou

, & I postulate KGs have a different equivariance

The consequences are astounding🤯, including 100% test accuracy in KGC...

1/🧵

4

22

159

1

0

11

@zhu_zhaocheng

@iclr_conf

Just leave a public comment when it opens. Make sure to mention the AC in the comment.

1

0

11

Thanks

@michael_galkin

for the invitation. This is indeed an exciting new research direction in KG research!

There is still much to do in double equivariant architectures.

In our new Medium blog post with

@XinyuYuan402

@zhu_zhaocheng

and special guest

@brunofmr

we explore

- the theory of inductive reasoning

- foundation models for KGs

- and explain our recent ULTRA in more detail!

1

14

73

0

0

11

Excited to be spending the next two days at the workshop on Foundations of Fairness, Privacy and Causality in Graphs! 🚀

An exciting lineup of speakers at the workshop on Foundations of Fairness, Privacy and Causality in Graphs. Looking forward to hearing from

@kaltenburger

@bksalimi

@berkustun

@luchengSRAI

@gfarnadi

@brunofmr

@KrishnaPillutla

@yangl1u

and others!

0

0

22

0

1

11

@dereklim_lzh

@PetarV_93

For me at least,

1. How symmetries allow us to transfer from training to OOD test zero-shot.

E.g., adding an extra equivariance to Knowledge Graphs models allows zero-shot domain transfer (to be presented @ GLFrontiers

#NeurIPS2023

)

1

0

11

If at

@netsci2017

this Monday, come check out our workshop Machine Learning in Network Science.

The final program for the workshop on Machine Learning in Network Science

@netsci2017

is now available. Great lineup of speakers!

0

11

25

0

4

11

Congrats to my students

@RyanLMurphy1

(co-advised with Vinayak Rao) and

@balasrini32

for their

#ICLR2019

paper on learnable pooling

0

0

10

@eliasbareinboim

@tdietterich

1. 100% with

@eliasbareinboim

. Planck's principle cannot be the only way forward

2. Real progress: Tasks grounded in real scientific progress, not only immediate industry applications

3. Education: Deep Learning courses must teach causality. So students understand limitations:

2

4

10

Great step by Xia et al. 🚀 formalizing the connection between neural nets that follow an SCM and whether they work for different causal tasks

The key challenge is creating representations that don’t feel like feature engineering… for GNNs we have clues on what it looks like

1

0

10

👇must-read

My experience performing proper experiments in ML papers: Reviewers will reject the paper, since it looks strange to them and doesn’t match prior results or how everybody does it.

0

0

9

My favorite Herb Simon quote. And it has deep implications on how FB and Twitter must use AI... to keep a potential attention cycle going (). Can we design a better attention market for social media? Any interesting recent papers?

1

0

10

PSA:

It is surprising how many junior researchers don't know this. Your (New) Definitions & Theory statements *should* be self-contained.

It is not "useless repetition" to reintroduce all relevant variables in every statement. It is how it is supposed to be.

1

0

10

In an overly simplistic way, think of ChatGPT as a "conditional GAN" (Generative Adversarial Network).

You are the classifier it is trying to fool.

I imagine it would have the same failures modes as conditional GANs (mode collapse, etc.).

2

1

10

Cool work by

@xbresson

et al.! Hybrid (positional + structural) methods to get GNNs to be more expressive. Awesome to see

#eigenvectors

mixed with GNNs.

Making hybrids inductive is a challenge. Why is it hard to make positional embeddings inductive? Too much we don’t know

1

1

10

Thanks

@bksalimi

for hosting me in this incredible workshop! Really amazing work that you and your group are doing. I had a lot of fun!

Had the pleasure of hosting Bruno

@brunofmr

, Harsh

@parikh_harsh_

, and Benjie

@benjiewang_cs

at

@HDSIUCSD

for a mini workshop on causal inference from relational data. Special thanks to Bruno for his insightful talk and excellent tutorial on invariant theory and graph learning.

1

1

16

0

0

10

Consider submitting your work. Its previous versions have been a great forum to exchange ideas.

3rd edition of "Machine Learning in Network Science"

@2019NetSci

! Submit your abstracts! Information about the invited speakers will come over the next days

@ciro

@MartonKarsai

@brunofmr

@chanmageddon

0

31

54

0

3

9

What if unlearn G-invariances?

1. NN starts w/multiple G-invariances as priors

2. Given data, learning makes NN sensitive to G-invariances inconsistent with data

Occam's razor: "As G-invariant as possible but not more"

@mouli_sekar

poster today 12pm ET, 4pm GMT

#ICLR2021

1/ 🧵

1

2

9

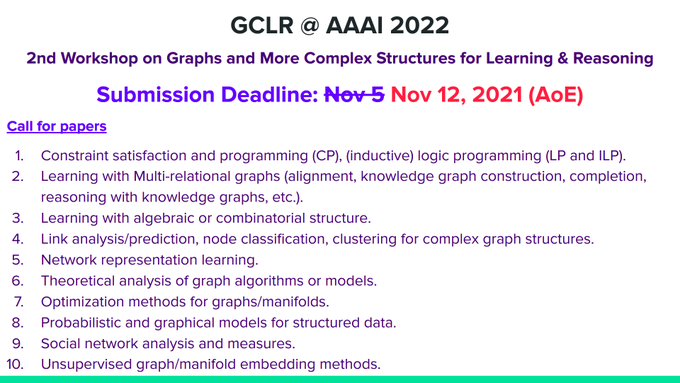

👇 Deadline extended

Call for papers is open for the workshop on Graphs & more Complex structures for Learning and Reasoning at AAAI-22

@RealAAAI

Extended submission deadline: Nov 12, 2021

More details at

@ravi_iitm

@kerstingAIML

@Sriraam_UTD

@PhilChodrow

@gin_bianconi

0

8

11

0

0

9

@ZakJost

This means equivariant/invariant representations for eigenvectors must come from set representations. There are a few papers on procedures to get set representations from eigenvectors without having to resample:

E.g.:

1

1

9

Today’s tutorial w/

@mouli_sekar

(text 📜 +videos 📺) is a step-by-step guide to G-invariant neural networks,

#eigenvectors

+ invariant subspaces + transformation

#groups

+ Reynolds operator

We welcome any feedback

0

0

9

I will be at the GLFrontiers workshop

#NeurIPS2023

if anyone wants to chat about this.

I can imagine a graph ML paper rejected in the future because a reviewer says "equivariances are irrelevant for graphs since there are no symmetries on real-world graphs, reject".

0

2

9