Aakash Kumar Nain

@A_K_Nain

Followers

9,152

Following

676

Media

170

Statuses

11,808

Sr. ML Engineer | Keras 3 Collaborator | @GoogleDevExpert in Machine Learning | @TensorFlow addons maintainer l ML is all I do | Views are my own!

New Delhi, India

Joined October 2016

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Madrid

• 1659365 Tweets

Champions

• 711131 Tweets

Bayern

• 660763 Tweets

Joselu

• 348556 Tweets

مدريد

• 224155 Tweets

Neuer

• 172326 Tweets

Vini

• 154238 Tweets

Tuchel

• 141522 Tweets

Harry Kane

• 82551 Tweets

Luka

• 81657 Tweets

FURIA ES GRAN HERMANO

• 79017 Tweets

De Ligt

• 73404 Tweets

Uruguai

• 72604 Tweets

Steve Albini

• 65650 Tweets

Kimmich

• 55304 Tweets

Joker

• 51592 Tweets

共同親権

• 50007 Tweets

#虎に翼

• 41848 Tweets

Shai

• 38382 Tweets

Jokic

• 38124 Tweets

نوير

• 37042 Tweets

Venus

• 27594 Tweets

Shaq

• 22999 Tweets

#SalvemOCavaloDeCanoas

• 22248 Tweets

DPVAT

• 21914 Tweets

アイスクリームの日

• 20083 Tweets

Soto

• 19404 Tweets

twenty one pilots

• 17828 Tweets

Talleres

• 12579 Tweets

もちづきさん

• 10225 Tweets

Last Seen Profiles

Pinned Tweet

After contributing and maintaining TF-addons for a while, here is the next big thing I always wanted to work on. I have been a Keras power user since 2016. Always wanted to build it along with the core devs. I finally got the chance to do it, and that too for all three major…

2

5

71

As promised, here is the first super clean notebook showcasing

@TensorFlow

2.0. An example of end-to-end DL with interpretability.

Cc:

@fchollet

@random_forests

@DynamicWebPaige

PS: Wait for more!

11

284

1K

How quickly can we build a captcha reader using deep learning? Check out yourself the Captcha cracker built

@TensorFlow

2.0 and

#Keras

6

120

464

I don’t like to brag about my code but I think I did a good job on this one. Apart from code quality, the mental model that an API provides plays a very important role, and Keras does that for me.

PS:

@fchollet

Thanks for the support and guidance 🙏🏼🙏🏼

4

19

284

A recent paper *Augmix* from Google and DeepMind people showed huge improvements in accuracy as well as robustness. The original code is in PyTorch, and I ported it to TF2.0. Check it out:

Cc:

@DanHendrycks

@balajiln

@barret_zoph

et al.

2

57

255

The diffusion models series is now on a proper blog making it easier for people to access the material because Github still has issues rendering notebooks. Enjoy reading! More DDPMs posts coming soon! 🍻🍻

cc:

@rishabh16_

@RisingSayak

2

30

194

I am happy to announce that I have successfully completed the

#PoweredByTF

challenge by building a skin cancer detection app using

#TensorFlow

2.0 and

#tflite

Check it out:

3

28

179

AugMix in TF2.0

1. Fully modular code

2. Custom train/eval loops with tf.function

3. **Custom EarlyStopping for the custom train loop.**

4. Checkpoint manager

5. Parallelized data generators

@fchollet

@random_forests

2

30

151

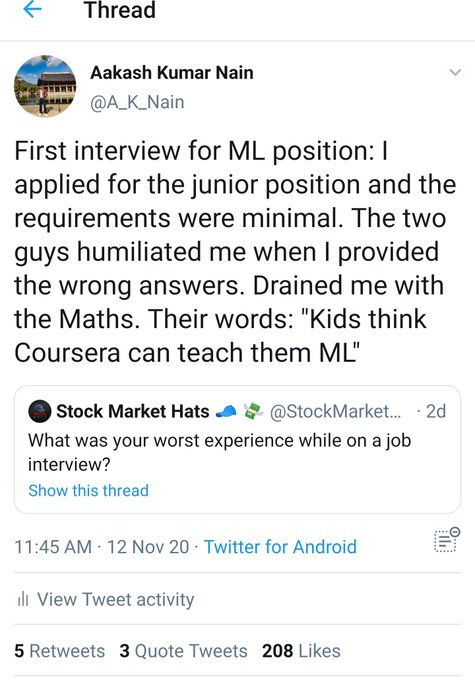

This arrived today! I absolutely love Pytest and always try to incorporate it in my workflow. Wanna read it right away but I have a blogpost to finish first. Thanks

@brianokken

for such a wonderful resource

4

7

144

With no MS/PhD and having not published any paper yet, I can totally relate these points. Don't know how it will turn out in the end but the stress, the anxiety is real. To prove that you are good enough, one has to invest a lot of time in it apart from day job.

Not good IMHO.

5

6

145

🔥 Tutorial alert! 🔥

As promised, here is the latest tutorial on parallelization and distributed training in JAX powered by Keras core !

💪😍

cc:

@SingularMattrix

@fchollet

2

21

129

Implementing Deeplab_v3 in TF2.0 was fun. As I said earlier also, functional API in tf.keras is all we need to do amazing things. Check it out:

cc:

@random_forests

@DynamicWebPaige

@fchollet

1

31

126

1/n: I think I never got a chance to thank those people properly who helped me in my ML journey. Given that the world is going through a difficult time, I think I should it now Thanks

1.

@AndrewYNg

for that ML course on coursera. (2015-16)

2.

@karpathy

for the exceptional CS231n

2

11

121

One of the most important concepts for which I have never found an intuitive explanation is the “Lipschitz continuity”. It would be great if

@3blue1brown

can make a video on that , but I doubt he is going to notice this tweet. So RT! 😅😅

6

33

122

No matter what people say, IMO research papers from

@GoogleAI

and

@facebookai

are among the best ones. Why?

1. Simple ideas

2. Explain most of the things clearly

3. Relatively short

4. Good ablation studies

The one I am reading now is 🔥

5

5

121

As promised, here is the first annotated paper. This would give you an overview of what all goes in my mind when I am reading a paper (maymay not be useful for you). cc:

@alisher_ai

@suzatweet

@bhutanisanyam1

PS: My handwriting isn't bad, I swear!😂

13

13

117

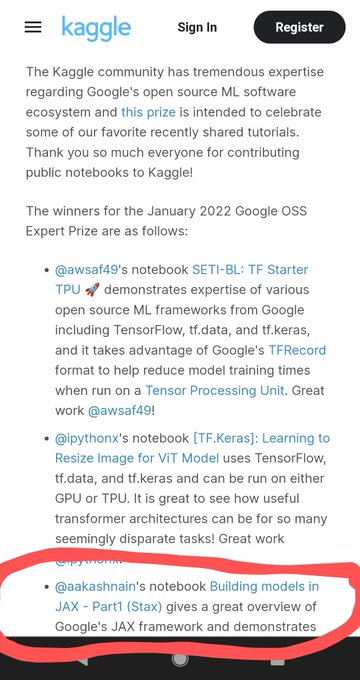

If you really want to learn about DS/ML then instead of following frauds like

@sirajraval

, do

@kaggle

You will learn so much within a few months.

Best part: People there really care about community and share knowledge selflessly

2

14

116

My maths isn't that bad but had there been someone like

@3blue1brown

to teach us during my school time, I bet I would have chosen Mathematician as my career.

PS: I have always loved Maths deeply. And ML/DL is something that I deeply care about, especially DL for vision

4

4

114

1/

Last month

@rishabh16_

and I thought to provide educative material for Diffusion Models.

Today we are happy to share the first notebook with you. This notebook is an optional read, and its purpose is to serve as a refresher on random variables.

Repo:

1

20

115

Back to

@kaggle

and here is another notebook where you can find how to override `train` and `test` steps in

@TensorFlow

(Keras) model to avoid writing functionalities like custom callbacks, etc for a custom training loop.

3

20

111

Keras 3 is another reminder why mental model matters. Developing an APi is one thing, developing an API with a good mental model is a whole another level of engineering.

PS: It's always amazing to collaborate with

@fchollet

on OSS. Glad I have contributed to this! Go Keras! 💥

1

7

110

Keras was the first API where I placed my big bets (you can read that blogpost from 2017 on medium) when I started my ML journey. Keras is ❤️

Keras: The best high-level

JAX: The best low-level

Thanks

@fchollet

🍻🍻

0

7

98

This paper was one of the best papers I read a while ago. I annotated it as well. You can find the annotation here:

0

9

97

Nice work

@TensorFlow

team for cleaning all the mess in the documentation and putting Keras and Eager first. Thanks

@fchollet

for such a great API for DL. Love for Keras will never end.

1

9

94

After trying out many many things for many years, I realized that spending time on data pipelines(collection, cleaning, etc.) In real world scenario Is far more imp than replacing ResNet50 with something like EfficientNet and doing more and more hparams tuning

2

4

90

I love doing ML research but I also wish to be as good as

@fchollet

@SingularMattrix

someday to be able to develop amazing libraries. Deep dive into Keras and JAX has been a wonderful ride so far 🥳🥳

4

1

87

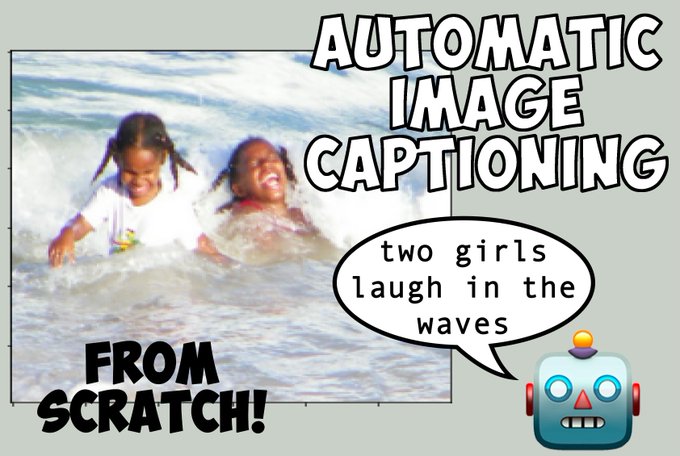

This is one of the most fun exercises I did last week. The best thing about a good API with a great mental model is that it makes the implementation of even complex tasks very easy. Keras is ❤️

7

12

85

Implemented **Integrated Gradients** in

@TensorFlow

. It was a fun exercise with lots of learnings involved from the original implementation. Also, submitted a PR to for the same. Check it out:

cc:

@fchollet

@GokuMohandas

0

13

84

100+ github stars on the diffusion models repo. Thank you everyone for the support. I hope you liked the content! 🙏🙏🥳🥳🍻

cc:

@RisingSayak

@rishabh16_

1

6

83

I have updated the TF-JAX tutorials repo. We have covered all the fundamentals blocks that you need to learn to understand the two frameworks better. Also, this is the first repo which you can treat as one stop to learn about

@TensorFlow

and JAX.

2

12

75

As promised, here is a

@kaggle

notebook showcasing the use of an **Endpoint** layer for CTC loss function used for building a **Captcha Reader** in

@TensorFlow

cc:

@fchollet

@random_forests

@DynamicWebPaige

PS: PR for kerasio on the way!

0

11

76