Shuaicheng Zhang

@zshuai8_az

Followers

54

Following

118

Media

2

Statuses

53

PhD candidate at VT #GNN#LLMonGRPAHS

Blacksburg, VA

Joined March 2015

This is just absurd, how can this happen in Neurips?

Mitigating racial bias from LLMs is a lot easier than removing it from humans! Can’t believe this happened at the best AI conference @NeurIPSConf We have ethical reviews for authors, but missed it for invited speakers? 😡

0

0

0

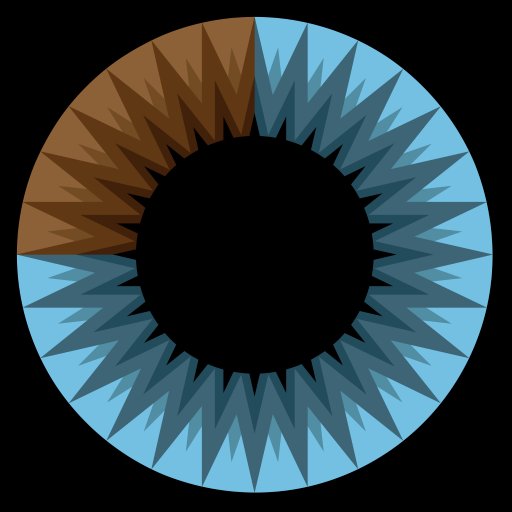

Best attention visualization ever

The next chapter about transformers is up on YouTube, digging into the attention mechanism: https://t.co/TWNXiWM2az The model works with vectors representing tokens (think words), and this is the mechanism that allows those vectors to take in meaning from context.

0

0

0

Vision-Flan Scaling Human-Labeled Tasks in Visual Instruction Tuning Despite vision-language models' (VLMs) remarkable capabilities as versatile visual assistants, two substantial challenges persist within the existing VLM frameworks: (1) lacking task diversity in pretraining

2

41

138

Have you ever done a dense grid search over neural network hyperparameters? Like a *really dense* grid search? It looks like this (!!). Blueish colors correspond to hyperparameters for which training converges, redish colors to hyperparameters for which training diverges.

272

2K

10K

Introducing Sora, our text-to-video model. Sora can create videos of up to 60 seconds featuring highly detailed scenes, complex camera motion, and multiple characters with vibrant emotions. https://t.co/7j2JN27M3W Prompt: “Beautiful, snowy

9K

30K

132K

Announcing Sora — our model which creates minute-long videos from a text prompt: https://t.co/SZ3OxPnxwz

1K

3K

20K

❗❗❗Workshop Call for Paper❗❗❗ Interested in trustworthy learning on graphs? We are inviting contributions to the 2nd Workshop on Trustworthy Learning on Graphs (TrustLOG), colocated with WWW2024. Workshop website: https://t.co/uYElfQIBtH Deadline: February 13, 2024

1

9

23

New 𝐬𝐮𝐫𝐯𝐞𝐲 paper about "𝐋𝐋𝐌𝐬 𝐨𝐧 𝐆𝐫𝐚𝐩𝐡𝐬"! We provide a comprehensive overview of LLMs on graphs. We systematically summarize scenarios where LLMs are utilized on graphs and discuss specific techniques. paper:

3

25

109

🚀 Introducing MMMU, a Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI. https://t.co/vPw4beOeha 🧐 Highlights of the MMMU benchmark: > 11.5K meticulously collected multimodal questions from college exams, quizzes, and textbooks >

29

179

739

We published the list of papers accepted to #NeurIPS2023 here: https://t.co/TWLudQGKJE

5

89

366

Our new work ✨The Art of SOCRATIC QUESTIONING: Recursive Thinking with Large Language Models✨ is accepted to #EMNLP2023. Inspired by the human cognitive process, we propose SOCRATIC QUESTIONING, a divide-and-conquer style algorithm that mimics the 🤔recursive thinking process.

2

36

162

Nice work by Zhiyang, please give it a try if you want to benchmark multimodal instruction tuning!

Today we officially release ✨Vision-Flan✨, the largest human-annotated visual-instruction tuning dataset with 💥200+💥 diverse tasks. 🚩Our dataset is available on Huggingface https://t.co/XqFrpudysl 🚀 For more details, please refer to our blog

0

0

1

Today we officially release ✨Vision-Flan✨, the largest human-annotated visual-instruction tuning dataset with 💥200+💥 diverse tasks. 🚩Our dataset is available on Huggingface https://t.co/XqFrpudysl 🚀 For more details, please refer to our blog

huggingface.co

2

16

48

Dive into ICML 2023 papers effortlessly! 🧐 Introducing a handy toy tool for paper collection and filtering. Whether you want the full list or targeted topics, this GitHub repo has got you covered:

github.com

List of papers on ICML2023. Contribute to XiaoxinHe/icml2023_learning_on_graphs development by creating an account on GitHub.

1

12

62

🤔 How to let Large Language Models (LLMs) agent utilize diverse tools via Tree Search 🔍? In AVIS, we enable LLM Agent to dynamically traverse a transition graph with self-critic (when one path is not informative, backtrack to previous state). This achieves SOTA VQA result.

Today on the blog, read all about AVIS — Autonomous Visual Information Seeking with Large Language Models — a novel method that iteratively employs a planner and reasoner to achieve state-of-the-art results on visual information seeking tasks → https://t.co/LJuewikzJG

2

24

125