Cameron Witkowski

@witkowski_cam

Followers

1K

Following

2K

Media

99

Statuses

1K

Co-Founder @ai_bread 🍞

San Francisco, CA

Joined April 2023

It’s public! Grateful to be with a team attempting and performing the impossible day in day out

Announcing Bread Technologies. We’re building machines that learn like humans. We raised a $5 million seed round led by Menlo Ventures and have been building in stealth for 10 months. Today, we rise 🍞

2

1

13

We’re finally reaching the era of everyone training their own models based on open-source (versus relying on black box generalist APIs) and it is glorious!

55

76

886

Very interesting read, lots of fruit in on-policy distillation. My 2c: working on-policy teaches the student what *not* to do as much as what *to* do, thus correcting common & easy-to-make errors. I've found these ideas fascinating since I read the original GKD paper by Agarwal

Our latest post explores on-policy distillation, a training approach that unites the error-correcting relevance of RL with the reward density of SFT. When training it for math reasoning and as an internal chat assistant, we find that on-policy distillation can outperform other

1

0

4

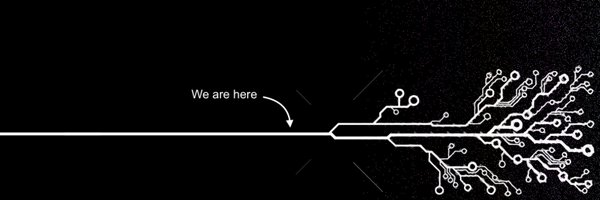

People think “machines that learn like humans” is as bold and ambitious as AGI. It’s actually way less. You don’t need a single model that knows everything and aces every benchmark. You just need a stepwise improvement with each little bit of practice. Remember, humans start

2

2

8

As part of our recent work on memory layer architectures, I wrote up some of my thoughts on the continual learning problem broadly: Blog post: https://t.co/HNLqfNsQfN Some of the exposition goes beyond mem layers, so I thought it'd be useful to highlight separately:

26

170

1K

Had not heard of context distillation when we wrote the paper back in 2024 but this is great stuff & way ahead of its time! Our initial paper showed us that prompts could in principle be converted into weight updates — and surprisingly fast with new advances like LoRA, and

Just read their paper. Looks like they re-invented an existing method known as context distillation (or merely re-branded it for their startup). No mention of prior work, sadly. Links to papers in thread.

6

0

31

AGI is a mirage No single, static model can handle the full demands of the real world, which is messy and always changing. A paradigm shift is a necessary. As the AI world wakes up to this reality, it’s worth looking back at Sutton’s *original* words from the Bitter lesson,

0

0

7

I love Andrej’s clarification that the final AGI recipe includes an RL stage, but we still need new layers of breakthroughs to get there. AGI is still a research problem, not an engineering problem. Scaling compute 100× won’t magically make it happen. The lab that invents the

48

48

725

Why Prompt Baking is the only known method for sample-efficient, on-the-job learning. Our new blog post explores why no other method can achieve the same sample efficiency and composability as baking in prompts, and why we’re placing our long-term bets on this approach for

4

3

18

This baking technology from @ai_bread is worth checking out. Models should be able to update their weights based on prompting alone, and baking does that. The implications for ongoing change of internal representation based on experience is particularly interesting.

Announcing Bread Technologies. We’re building machines that learn like humans. We raised a $5 million seed round led by Menlo Ventures and have been building in stealth for 10 months. Today, we rise 🍞

9

5

111

an incredibly cracked team dedicated to solving continual learning for artificial intelligence with some of the coolest research demos i've seen! super honored to be building with them to fix a problem i've long been thinking about - the best ideas don't get spoken about the

Announcing Bread Technologies. We’re building machines that learn like humans. We raised a $5 million seed round led by Menlo Ventures and have been building in stealth for 10 months. Today, we rise 🍞

4

6

146

We stumbled upon the idea of Prompt Baking as a direct consequence of thinking about LLMs through the lens of LLM control theory. Baking has since emerged as a practically useful tool for actualizing control over inherently probabilistic LLM systems. Baking puts YOU in control.

@ai_bread Congratulations for the strong work. This sounds like it will make the LLM trustable for use and the operator also accountable. The last time we heard from the two of you was the podcast https://t.co/vPKdvoHEBH You are delivering on your research, my eyes are on you team Bread

1

1

9

Excited to be working with this team and revolutionizing what it means to make AI smarter and better. The days of one-size-fits-all LLMs will be gone, time to build YOUR model.

Announcing Bread Technologies. We’re building machines that learn like humans. We raised a $5 million seed round led by Menlo Ventures and have been building in stealth for 10 months. Today, we rise 🍞

6

2

13