pun

@trenchie

Followers

2K

Following

676

Media

12

Statuses

481

dropped another small kernel for flash-attention 2. Missing some features (i.e. no persistent kernel launch, no jagged sequences, no causal masking), but the core concepts of the algorithm (while using the full power of tensor cores and TMAs) is covered in ~500 lines of code for.

4

2

20

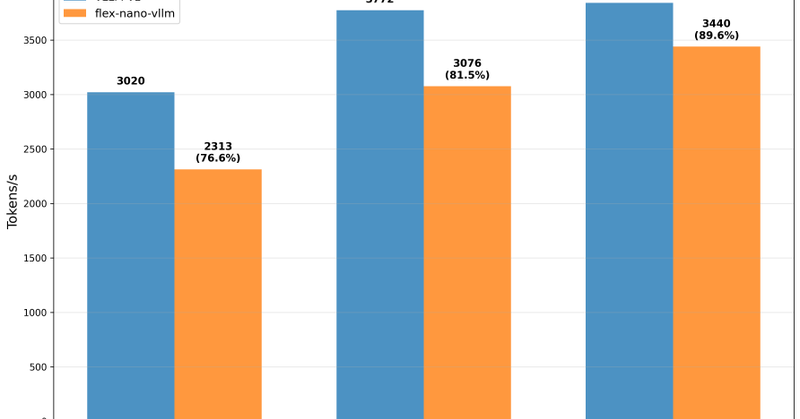

RT @ChangJonathanC: while we wait for gpt-5 to drop. Here is a flex attention tutorial for building a < 1000 LoC vllm from scratch. https://….

jonathanc.net

PyTorch FlexAttention tutorial: Building a minimal vLLM-style inference engine from scratch with paged attention

0

37

0

RT @peterboghossian: One deliverable from Peter Thiel’s talk: . If it’s forbidden to be spoken about, it’s likely true. .

0

671

0

there is no philosophy behind oxytocin, dopamine, and the myriads of other random shit that gets released in your brain during random events. all you can quantify is how much overall joy or pleasure it gives you and at what cost. we are animals.

Sex > Philosophy. This is what philosophers have been hiding all along. - Letter from friend to Machiavelli

0

0

3

so pretty.

📢 Incredibly excited to announce I signed with a publisher!. My retro tycoon game 'Car Park Capital' will be published by @micro_prose!.The one behind classics like Transport Tycoon and Civilization. Go watch the trailer!. Thanks for the support so far! 🙏

0

0

0