Tiago Pimentel

@tpimentelms

Followers

2K

Following

2K

Media

80

Statuses

1K

Postdoc at @ETH_en. Formerly, PhD student at @Cambridge_Uni.

Brasília, Brazil

Joined November 2009

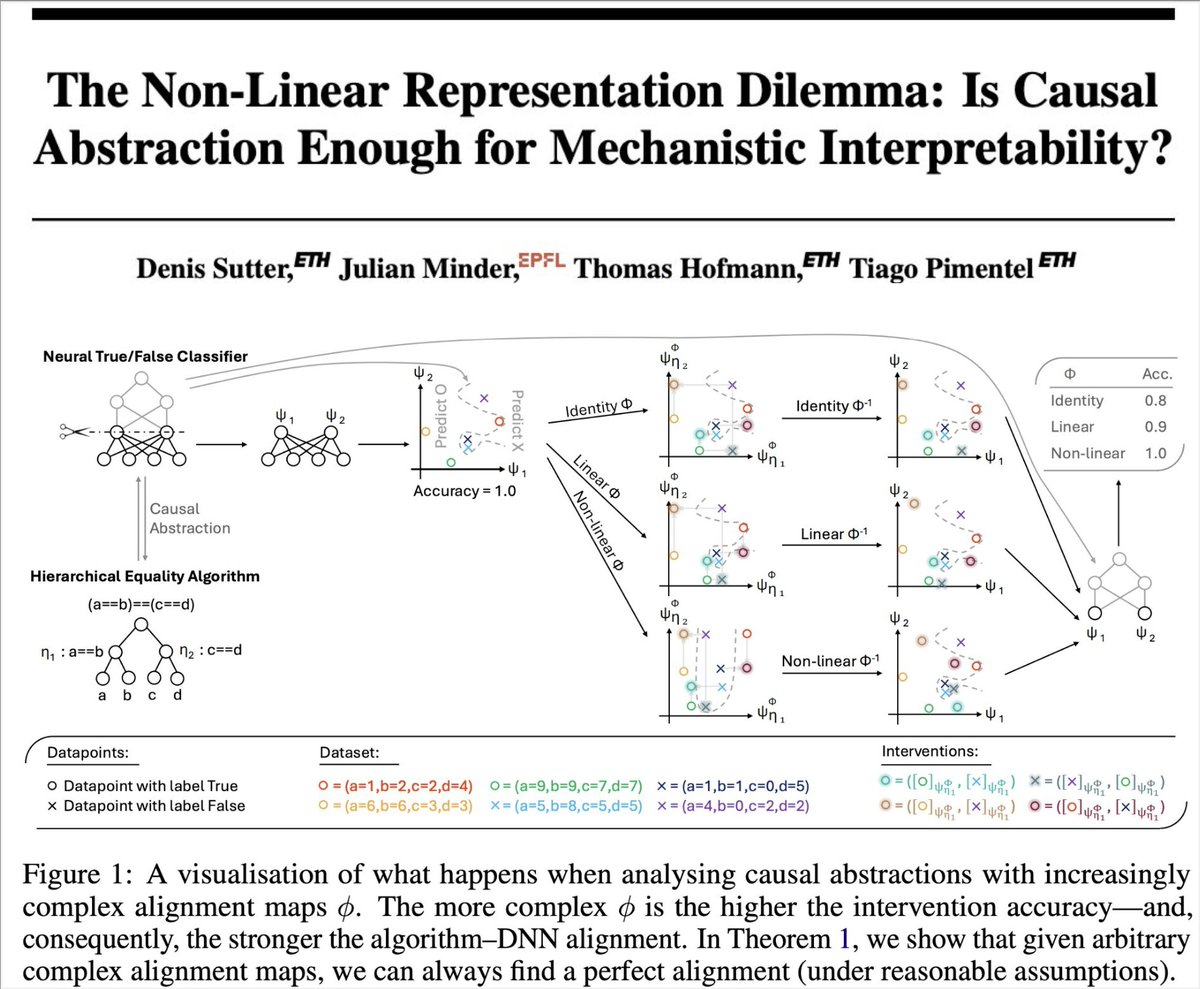

Mechanistic interpretability often relies on *interventions* to study how DNNs work. Are these interventions enough to guarantee the features we find are not spurious? No!⚠️ In our new paper, we show many mech int methods implicitly rely on the linear representation hypothesis🧵

8

29

211

I've recently been fascinated by tokenization, a research area in NLP where I still think there's lots of headway! In an effort to encourage research, I made a small tokenizer eval suite (intrinsic metrics) with some features I found missing elsewhere:

github.com

Contribute to cimeister/tokenizer-analysis-suite development by creating an account on GitHub.

4

15

159

My first NLP lectures at Columbia are in the books! In our first two lectures, we went over (1) learning from text with a simple word vector language model, and (2) tokenization of text. Lecture notes are brand new and freely available on my website (links in thread.)

17

72

1K

I’m excited to share that I'm joining Bar-Ilan University as an assistant professor!

110

21

523

Where can I cash in my $700k a year? :)

🎉 Double honors at #ACL2025! Tiago Pimentel @tpimentelms received two Senior Area Chair awards — a rare feat that speaks volumes about his contribution to computational linguistics. DINQ sees you.👀

2

0

23

Starting in one hour at 11:00! See you in Room 1.32

And I'm giving a talk at the @l2m2_workshop on Distributional Memorization, next Friday! Curious what's that all about? Make sure to attend the workshop!

0

2

17

Super excited for our new #ACL2025 workshop tomorrow on LLM Memorization, featuring talks by the fantastic @rzshokri @yanaiela and @niloofar_mire, and with a dream team of co-organizers @johntzwei @vernadankers @pietro_lesci @tpimentelms @pratyushmaini @YangsiboHuang !

L2M2 will be tomorrow at VIC, room 1.31-32! We hope you will join us for a day of invited talks, orals, and posters on LLM memorization. The full schedule and accepted papers are now on our website:

0

11

51

Honoured to receive two (!!) Senior Area Chair awards at #ACL2025 😁 (Conveniently placed on the same slide!) With the amazing Philip Whittington, @GregorBachmann1 and @weGotlieb, @CuiDing_CL, Giovanni Acampa, @a_stadt, @tamaregev

1

4

68

Looking forward to attending #cogsci2025! I’m especially excited to meet students who will be applying to PhD programs in Computational Ling/CogSci in the coming cycle. Please reach out if you want to meet up and chat! Email is best, but DM also works if you must quick🧵:

1

23

74

What a wonderful project experience with a great team!

Honored to have received a Senior Area Chair award at #ACL2025 for our Prosodic Typology paper. Huge shout out to the whole team: @CuiDing_CL, @tpimentelms, @a_stadt, @tamaregev!

1

1

11

Honored to have received a Senior Area Chair award at #ACL2025 for our Prosodic Typology paper. Huge shout out to the whole team: @CuiDing_CL, @tpimentelms, @a_stadt, @tamaregev!

4

4

56

I'll be at #ACL2025 next week! Catch me at the poster sessions, eating sachertorte, schnitzel and speaking about distributional memorization at the @l2m2_workshop

1

9

90

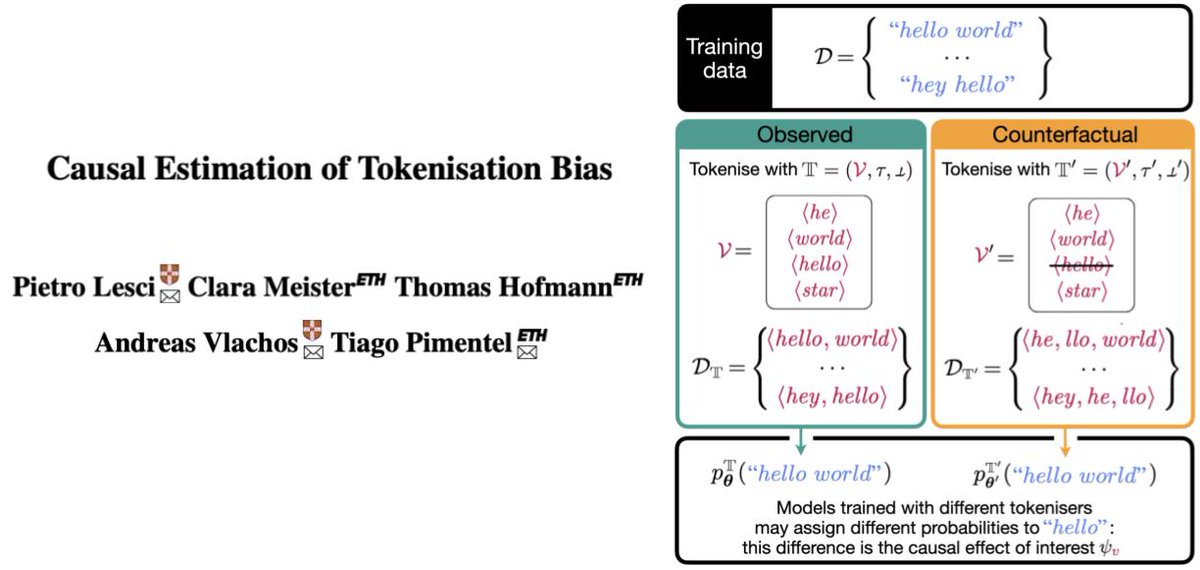

We are presenting this paper at #ACL2025 😁 Find us at poster session 4 (Wednesday morning, 11h~12h30) to learn more about tokenisation bias!

A string may get 17 times less probability if tokenised as two symbols (e.g., ⟨he, llo⟩) than as one (e.g., ⟨hello⟩)—by an LM trained from scratch in each situation! Our #acl2025nlp paper proposes an observational method to estimate this causal effect! Longer thread soon!

0

2

20

Philip will be presenting our paper "Tokenisation is NP-Complete" at #ACL2025 😁 Come to the language modelling session (Wednesday morning, 9h~10h30) to learn more about how challenging tokenisation can be!

BPE is a greedy method to find a tokeniser which maximises compression! Why don't we try to find properly optimal tokenisers instead? Well, it seems this is a very difficult—in fact, NP-complete—problem!🤯 New paper + P. Whittington, @GregorBachmann1 :)

4

1

16

Headed to Vienna for #ACL2025 to present our tokenisation bias paper and co-organise the L2M2 workshop on memorisation in language models. Reach out to chat about tokenisation, memorisation, and all things pre-training (esp. data-related topics)!

All modern LLMs run on top of a tokeniser, an often overlooked “preprocessing detail”. But what if that tokeniser systematically affects model behaviour? We call this tokenisation bias. Let’s talk about it and why it matters👇 @aclmeeting #ACL2025 #NLProc

1

4

18

Life update: I’m excited to share that I’ll be starting as faculty at the Max Planck Institute for Software Systems(@mpi_sws_) this Fall!🎉 I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: https://t.co/K2LGYAEkSa

82

46

570

I'm in Vancouver for TokShop @tokshop2025 at ICML @icmlconf to present joint work with my labmates, @tweetByZeb, @pietro_lesci and @julius_gulius, and Paula Buttery. Our work, ByteSpan, is an information-driven subword tokenisation method inspired by human word segmentation.

1

6

19

@tokshop2025 @icmlconf @tweetByZeb @pietro_lesci @julius_gulius @cambridgenlp I will also be sharing more Tokenisation work from @cambridgenlp at TokShop– this time on Tokenisation Bias by @pietro_lesci and @vlachos_nlp, @clara__meister, Thomas Hofmann and @tpimentelms.

0

2

5

Causal Abstraction, the theory behind DAS, tests if a network realizes a given algorithm. We show (w/ @DenisSutte9310, T. Hofmann, @tpimentelms) that the theory collapses without the linear representation hypothesis—a problem we call the non-linear representation dilemma.

1

4

26

@DenisSutte9310 We're working on some interesting follow-up works :) So, make sure to follow @DenisSutte9310 if you are interested! Also, Denis is completing his master's soon and is considering what to do next. I'd definitely hire him as a PhD student if I could!

0

0

2

Interventions are not a silver bullet for mechanistic interpretability research: assuming models can encode information nonlinearly, we can prove any model implements (or causally abstracts) any algorithm, making the statement vacuous! Check out @DenisSutte9310 🧵 for details :)

1/9 In our new interpretability paper, we analyse causal abstraction—the framework behind Distributed Alignment Search—and show it breaks when we remove linearity constraints on feature representations. We refer to this problem as the Non-Linear Representation Dilemma.

1

0

3