Tom Dupré la Tour

@tomdlt10

Followers

551

Following

286

Media

16

Statuses

131

LLM interpretability @openai, previously neuron and fMRI interpretability @gallantlab, neurophysiology @agramfort, machine-learning for @scikit_learn

San Francisco, CA

Joined December 2014

RT @MilesKWang: We found it surprising that training GPT-4o to write insecure code triggers broad misalignment, so we studied it more. We f….

0

424

0

RT @nabla_theta: Excited to share what I've been working on as part of the former Superalignment team!. We introduce a SOTA training stack….

0

85

0

RT @cathychen23: Do brain representations of language depend on whether the inputs are pixels or sounds?. Our @CommsBio paper studies this….

0

39

0

RT @davederiso: Made an ultra fast DTW solver w Stephen Boyd @StanfordEng 🚀. Contributions:.1. Linear time.2. Continuous time formulation.3….

0

24

0

RT @tomamoral: 📢NEW PAPER ALERT📢. Benchopt: Reproducible, efficient and collaborative optimization benchmarks 🎉. I….

0

28

0

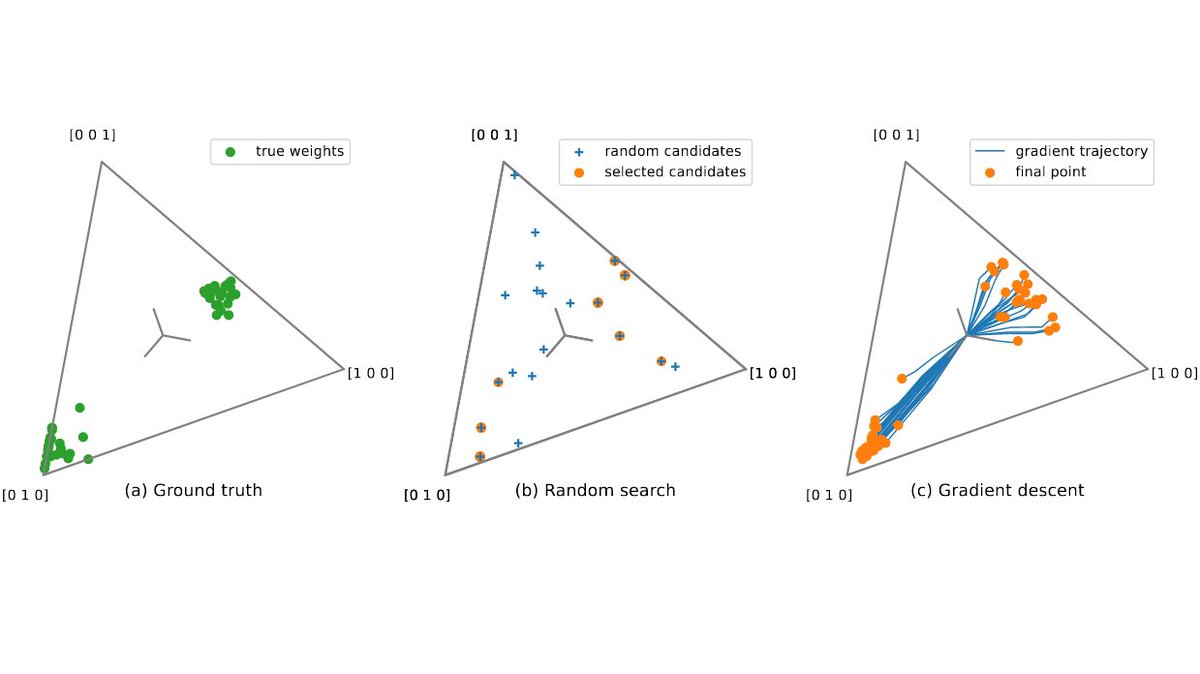

New preprint (with @meickenberg and @gallantlab): Feature-space selection with banded ridge regression.Choose your preferred thread depending on your interest and technical background:.- Neuroimaging 🧵 below.- ML 🧵 at

1

5

12