tomaarsen

@tomaarsen

Followers

4K

Following

4K

Media

311

Statuses

1K

Sentence Transformers, SetFit & NLTK maintainer Machine Learning Engineer at 🤗 Hugging Face

Netherlands

Joined December 2023

‼️Sentence Transformers v5.0 is out! The biggest update yet introduces Sparse Embedding models, encode methods improvements, Router module for asymmetric models & much more. Sparse + Dense = 🔥 hybrid search performance! . Details in 🧵

6

65

479

For those who just want the full release notes: Otherwise, keep reading 🧵.

github.com

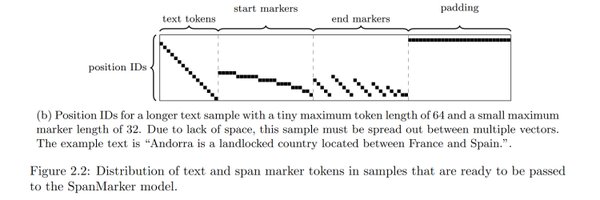

This release introduces 2 new efficient computing backends for SparseEncoder embedding models: ONNX and OpenVINO + optimization & quantization, allowing for speedups up to 2x-3x; a new "n-...

1

1

6

RT @dylan_ebert_: OpenAI just released GPT-OSS: An Open Source Language Model on Hugging Face. Open source meaning:.💸 Free.🔒 Private.🔧 Cust….

0

37

0

Check out SetFit here:

github.com

Efficient few-shot learning with Sentence Transformers - huggingface/setfit

0

0

2

There's a new, strong multilingual ColBERT model! Trained for English, German, Spanish, French, Italian, Dutch, and Portuguese. I think this'll be my new recommendation for a multilingual Late Interaction/ColBERT model currently.

SauerkrautLM-Multi-Reason-ModernColBERT. Multilingual, reasoning-capable late interaction retriever family. - First ColBERT-style retriever to apply LaserRMT for low-rank approximation .- Distilled from Qwen/Qwen3-32B-AWQ using 200K synthetic query-document pairs, scored by a

2

15

101

RT @lvwerra: Excited to share the preview of the ultra-scale book! . The past few months we worked with a graphic designer to bring the blo….

0

21

0