emi

@technoabsurdist

Followers

799

Following

10K

Media

43

Statuses

2K

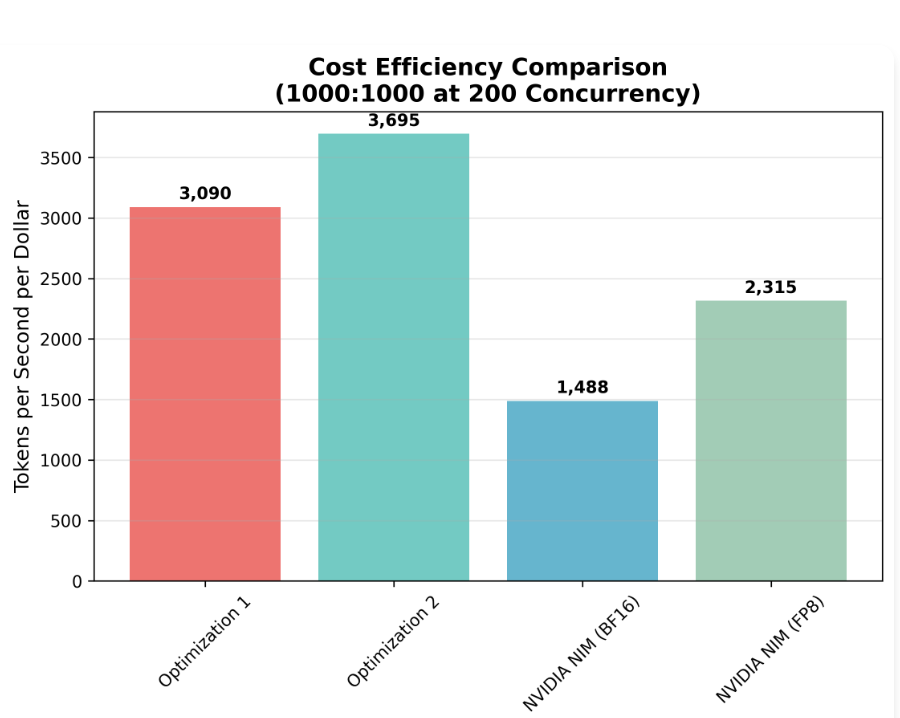

we built herdora because writing cuda sucks and hiring gpu engineers is impossible. we turn slow pytorch into fast gpu code. automatically. please reach out emilio [at] herdora [dot] com if you want faster/cheaper inference .

Herdora (@herdora_ai) is the Cursor for CUDA. It automatically turns your PyTorch code into optimized GPU kernels so you don't have to write CUDA. Congrats on the launch, @technoabsurdist & @gpusteve!.

3

3

25

RT @finbarrtimbers: Someone’s gonna release an actual “RL for kernel development” paper without measurement errors at some point and no one….

0

2

0

RT @tryfondo: 🚀 @herdora_ai launched! Cursor for CUDA. "Herdora turns your slow PyTorch into fast GPU code, automatically.". 🌐 https://t.co….

tryfondo.com

👑 Herdora Launches: Cursor for CUDA

0

2

0

RT @gpusteve: 📜 ai doesn't run on just NVIDIA anymore - it’s running on many different chips, each with different quirks, tradeoffs, and sc….

0

2

0

RT @ycombinator: Herdora (@herdora_ai) is the Cursor for CUDA. It automatically turns your PyTorch code into optimized GPU kernels so you d….

0

15

0

RT @kenbwork: Reminds me a lot of the recent wave of (very successful) systems companies that rewrote popular frameworks like Kafka to take….

0

2

0

what's next at @herdora_ai: deeper kernel optimizations, advanced quantization techniques, and improved memory management. our ultimate goal: build the best hardware-agnostic tools for programming accelerators. break CUDA's software moat to lower industry costs, accelerate.

0

0

2

RT @tenderizzation: “let’s see what happens if I bump the project to the next major CUDA release”

0

7

0