Hongyao Tang

@tanghyyy

Followers

61

Following

28

Media

14

Statuses

27

Associate Research Fellow at @TJU1895. Postdoc at @Mila_Quebec and REAL @MontrealRobots working with @GlenBerseth. Working on RL, Continual Learning.

Joined November 2021

RT @johanobandoc: Thank you all for stopping by our poster session at ICML! Due to visa issues, @tanghyyy couldn’t join us in person; howev….

0

4

0

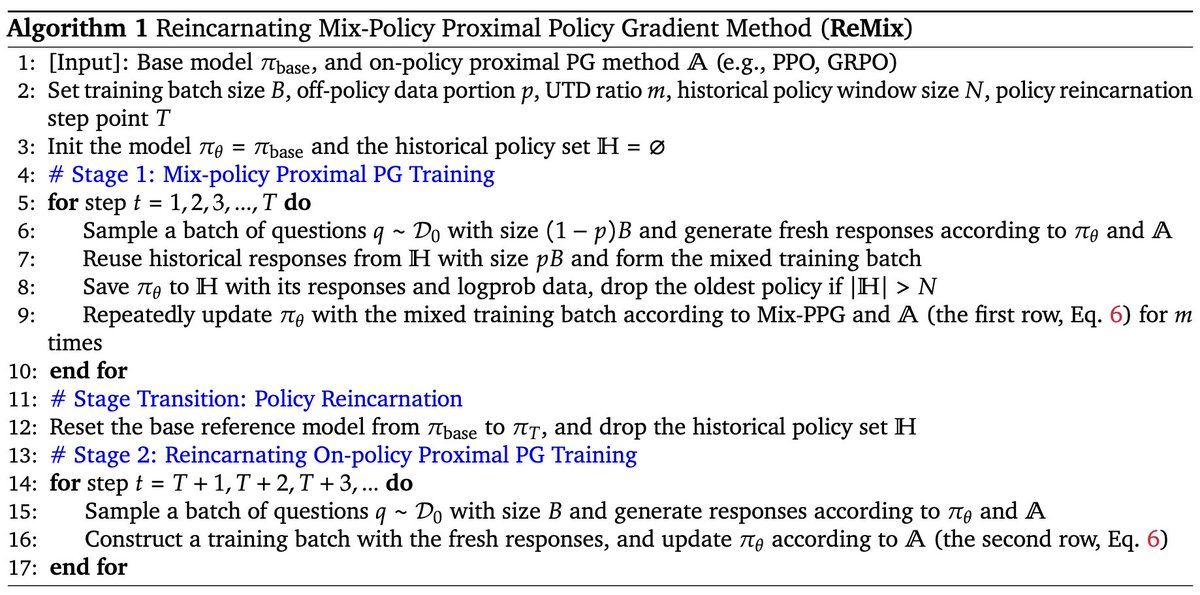

(1/8) ⚡️Glad to share our paper “Squeeze the Soaked Sponge: Efficient Off-policy Reinforcement Finetuning for LLM”! #LLMs. LLM RFT is expensive. Popular methods are on-policy in nature, thus being data inefficient. Off-policy RL is a natural and intriguing choice. 👇🧵

2

1

2

RT @GlenBerseth: Being unable to scale #DeepRL to solve diverse, complex tasks with large distribution changes has been holding back the #R….

0

10

0

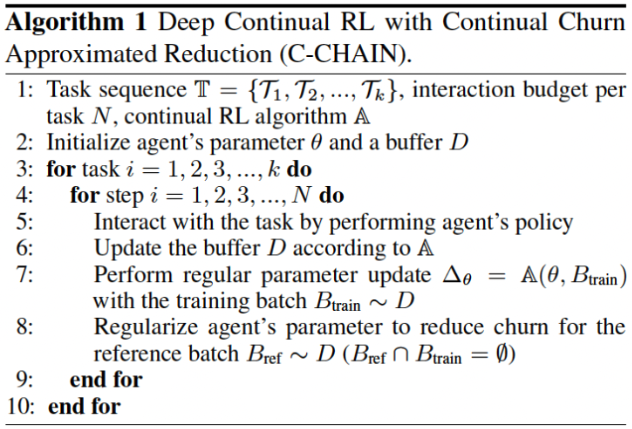

(8/8) C-CHAIN is simple, effective, and plug-and-play for any RL agent. 📘 Paper: This work was a fantastic collaboration with @johanobandoc, @AaronCourville, @pcastr and @GlenBerseth 🙌. #ICML2025 #RL #ContinualLearning #DeepRL.

arxiv.org

Plasticity, or the ability of an agent to adapt to new tasks, environments, or distributions, is crucial for continual learning. In this paper, we study the loss of plasticity in deep continual RL...

0

0

1

(5/8)📊C-CHAIN improves performance across 24 continual RL environments:.🎮 Gym Control, ProcGen, DeepMind Control Suite, MinAtar. It beats TRAC, L2 Init, Weight Clipping, LayerNorm, and AdamRel in most cases. ❤️Acknowledgement to the public code of TRAC

⭐New Paper Alert ⭐.How can your #RL agent quickly adapt to new distribution shifts ? And without ANY tuning?🤔. We suggest you get on the Fast TRAC🏎️💨, our new Parameter-free Optimizer that surprisingly works. Why?. Website:1/🧵

1

0

1

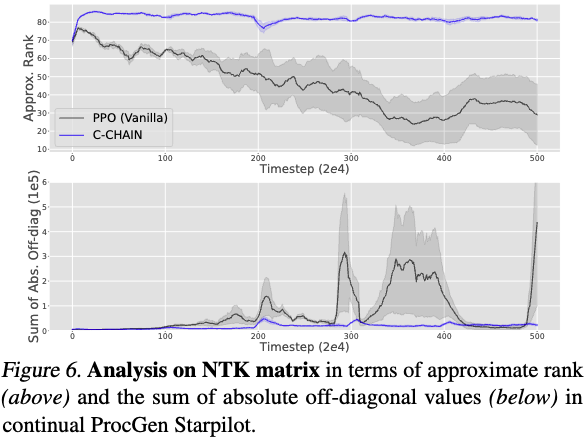

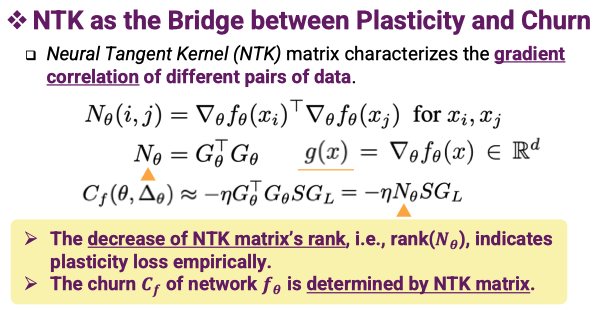

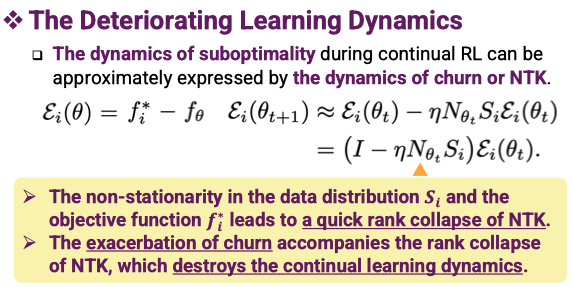

(1/8)🔥Excited to share that our paper “Mitigating Plasticity Loss in Continual Reinforcement Learning by Reducing Churn” has been accepted to #ICML2025!🎉. RL agents struggle to adapt in continual learning. Why? We trace the problem to something subtle: churn. 👇🧵@Mila_Quebec

1

13

53

RT @FanJiajun67938: 🔥 AI Model Can Self-evolve Without Collapse 🔥.Our ICLR 2025 paper introduces ORW-CFM-W2: the first theoretically sound….

0

10

0

A super great experience working with Prof. @GlenBerseth !.

This work has been a huge effort by @tanghyyy. Link to the paper: Improving Deep Reinforcement Learning by Reducing the Chain Effect of Value and Policy Churn.Paper:

0

0

3