Daehwa Kim

@talking_kim

Followers

1K

Following

2K

Media

12

Statuses

88

PhD student @cmuhcii | Prev @Apple Robotics, @Meta @RealityLabs, @hcikaist. 🦾 Making sense of sensing, for people!

Joined April 2018

Research is done in amazing collaboration with @nneonneo @hciprof ✨ You can find more details from our recently published paper at #CHI2025. Here are the preprint, full video, and code:

figlab.com

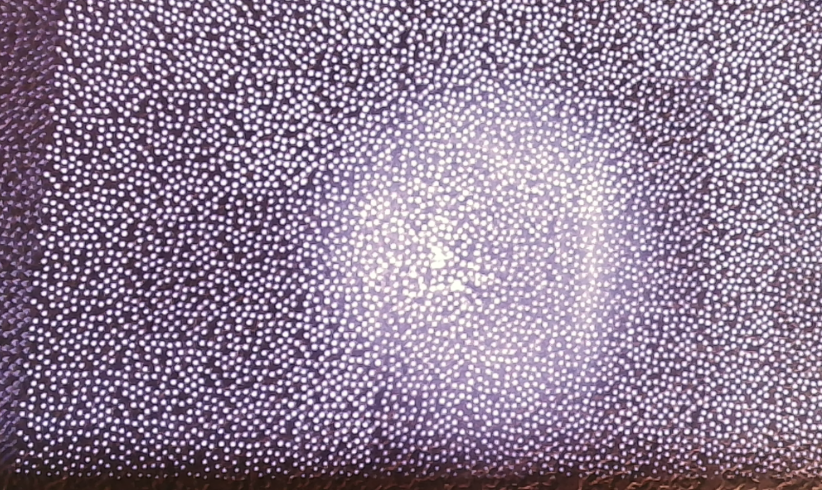

PatternTrack: Multi-Device Tracking Using Infrared, Structured-Light Projections from Built-in LiDAR

0

0

6

Yes, your iPhone already has a projector—using invisible light! 📱✨ In my latest research at @acm_chi, we repurpose its LiDAR dot pattern as a visual marker, enabling paired AR experiences without any online communication or pre-registration.

Anyway people never bought the "cell phones with projectors" idea. but your iPhone does have a laser in it, for producing a fixed dot pattern that it used with a low-resolution LiDAR for face ID. it's just infrared so you don't see it

4

16

70

RT @huang_peide: 🚀 New Research on Human-Robot Interaction! 🤖. How can humanoid robots communicate beyond words? Our framework, EMOTION, le….

0

89

0

RT @seeedstudio: How can humanoid robots move smarter in crowded spaces? 🦾 Meet ARMOR —an egocentric perception system developed by @talkin….

0

2

0

RT @lukas_m_ziegler: 🚨 Apple joins the robotics race!. Researchers from @CarnegieMellon and @Apple have developed a robot collision avoidan….

0

175

0

RT @simonkalouche: Occlusion will be a big challenge for robots operating in dense, obstacle rich environments common in manufacturing. U….

0

46

0

RT @fly51fly: [RO] ARMOR: Egocentric Perception for Humanoid Robot Collision Avoidance and Motion Planning.D Kim, M Srouji, C Chen, J Zhang….

0

2

0

RT @OWW: ARMOR: Egocentric Perception for Humanoid Robot Collision Avoidance and Motion Planning.

0

1

0

RT @CarnegieMellon: Say goodbye to dead batteries!⚡️ New tech being developed at @SCSatCMU uses your body to charge wearable gadgets. The….

fastcompany.com

A new Power-over-Skin technology invented at Carnegie Mellon University could change the way we charge our wearables.

0

3

0

This work was done at Carnegie Mellon and Apple, partially during my internship at Apple Robotics. I deeply appreciate my internship manager Mario Srouji and amazing collaborators Chen Chen and Jian Zhang. @SCSatCMU.Paper, videos and code (coming soon!) at

0

0

4

RT @realkaranahuja: 🔬 My lab at @NorthwesternU has a new website! Visit to see our latest research from CHI, ECCV &….

spice-lab.org

Explore our latest research projects and news at SPICE Lab.

0

68

0