Swaroop Nath

@swaroopnath6

Followers

502

Following

1K

Media

48

Statuses

614

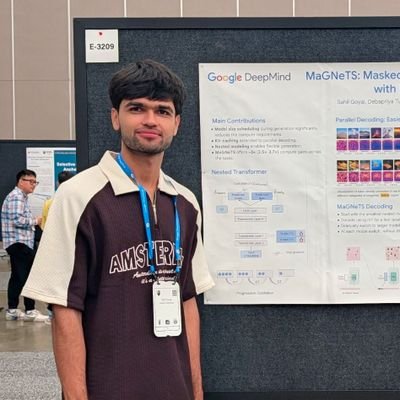

Pre-Doctoral Researcher @GoogleDeepMind India | Ex-AI Researcher @LinkedIn | NLP @cfiltnlp @CSE_IITBombay Tweets about RL, NLP, RLHF, and general AI-ML

Joined March 2020

🚀New Paper Alert💥 Gathering Human Preferences for RLHF is costly (>20K in size). Preference is contextual - creative writing --> 👍, otherwise --> 👎. How to achieve #alignment cheaply and quickly, in contextual setup? paper🔗at the end ❓ Can Domain Knowledge help? ✅ Yes ⬇️

1

7

52

Please consider applying to the program. Over two years, my research skills, perspective on research have all been broadened and sharpened. This is an exceptional group, in the way they groom you, and allow you a room for exploring wild ideas. Pls reach out if you have questions!

Thrilled to note that we are keeping the tradition of the awesome AI residency program alive in a new avatar: pre-doc researcher program at GDM-Blr -- with some amazing work done by our recent predocs including @gautham_ga_ @pranamyapk @puranjay1412 @sahilgo6801 @swaroopnath6

0

1

6

Heading out to @NeurIPSConf at San Diego in a couple of days. Would love to meet researchers around Reasoning, Post Training, etc. DM, if you want to meet! If you have some kickass parties, please do send invites. Would love to attend and meet people :) #neurips25

0

0

1

While the following might sound like victim blaming, but part of the problem is people submitting too many papers. Can we impose a fee on submitters conditioned on # resubmits of the manuscript, # submits in the past k conferences, ..? Let us as a whole stop paper mills #ICLR2026

0

0

0

This was my experience in grad school, and now I've seen some evidence to suggest a trend 🤔

11

46

850

Seems like people are catching on to some of Transformers' shortcomings. We showed roughly 1.5 years back how Transformers are bad at approximating smooth functions. Read more at

arxiv.org

Transformers have become pivotal in Natural Language Processing, demonstrating remarkable success in applications like Machine Translation and Summarization. Given their widespread adoption,...

Why Can't Transformers Learn Multiplication? This paper found that plain training never builds long-range links of multiplications. So by adding a new auxiliary loss that predicts the “running sum”, it enables the model to successfully learn multi-digit multiplication!

0

0

0

Professor Pushpak Bhattacharyya passed away this morning. This world has lost a great human being and a researcher. May he rest in peace.

39

30

587

Man the whole thread 😆

Just read this new research paper from Google AI called "Attention is All You Need" and I think my brain is actually broken 🤯 All our best AI models are stuck processing language one word at a time, in order. It's this huge sequential bottleneck. These researchers just... threw

0

0

3

"There's no language out there in nature"? A bit unbelievable! Language, or rather communication, forms the basis of collective intelligence. What I do agree on that next-token prediction is probably not teaching the model actual procedural knowledge.

Fei-Fei Li says, "There's no language out there in nature....There is a 3D world that follows laws of physics." Quite a few papers says similar things. AI models trained on linguistic signals fail when the task requires embodied physical common sense in a world with real

0

0

1

The lesson of the week is: Slow is smooth, smooth is fast. Probably one of the best productivity tips I have incorporated this year 🤩

0

0

2

Earlier, I curated this list of resources for the niche field of "Aesthetic Assessment of Graphic Designs". https://t.co/xKgfD2ZyDa I try to update it, as I think this area has good directions for future research and is very underexplored.

github.com

Collection of Aesthetics Assessment Papers for Graphic Designs. - sahilg06/Awesome-Aesthetics-Assessment

1

1

5

Not citing my work? Fine! Not citing my jokes? Unforgivable 🥷

0

0

3

If you're fine-tuning LLMs, Gemma 3 is the new 👑 and it's not close. Gemma 3 trounces Qwen/Llama models at every size! - Gemma 3 4B beats 7B/8B competition - Gemma 3 27B matches 70B competiton Vision benchmarks coming soon!

19

55

497

Great paper! But at the risk of seeming like reviewer 2, just two questions: 1. The specific instantiation of pass@k (max of rewards). Won't it degenerate to having just one out of k correct. I am curious why this degeneration doesn't happen!

1

0

0

I didn't know version was a measure of evaluation 🫨 Waiting for reviewer #3 to ask for this plot now 🫠

0

0

0

Excited about our new work: Language models develop computational circuits that are reusable AND TRANSFER across tasks. Over a year ago, I tested GPT-4 on 200 digit addition, and the model managed to do it (without CoT!). Someone from OpenAI even clarified they NEVER trained

24

81

523

Whoever is this reviewer, please change your profession :) The aura debt on this unreal

1

0

4

I’m building a new team at @GoogleDeepMind to work on Open-Ended Discovery! We’re looking for strong Research Scientists and Research Engineers to help us push the frontier of autonomously discovering novel artifacts such as new knowledge, capabilities, or algorithms, in an

job-boards.greenhouse.io

92

259

3K