Simon Jegou

@simon_jegou

Followers

559

Following

372

Media

23

Statuses

181

Senior LLM Technologist @NVIDIA Views and opinions are my own

Joined June 2016

RT @devoto_alessio: 🏆 Our @nvidia KV Cache Compression Leaderboard is now live! . Compare state-of-the-art compression methods side-by-sid….

0

36

0

RT @igtmn: We've released a series of OpenReasoning-Nemotron models (1.5B, 7B, 14B and 32B) that set new SOTA on a wide range of reasoning….

0

47

0

RT @gonedarragh: AIMO-2 Winning Solution: Building State-of-the-Art Mathematical Reasoning Models with OpenMathReasoning dataset.abs: https….

0

15

0

Implementation is based on the excellent paper "An Optimal Strategy for Yahtzee" by @DrGlennNFA (2006): (2/2).

1

0

0

🎲 Did you know Yahtzee can be solved optimally in less than 100 lines of Python and under 5 minutes with 2 vCPU? I built a @Gradio app so you can try it yourself: (1/2).

huggingface.co

3

6

24

RT @a_erdem4: logits-processor-zoo: New version (0.1.3) is out 🎉. You can now tune the thinking duration of R1 models and force them to sta….

0

7

0

RT @NVIDIAAIDev: The growing context windows of LLMs unlock new possibilities but pose significant memory challenges with linearly scaling….

0

45

0

RT @giffmana: This actually reproduces as of today. In 5 out of 8 generations, DeepSeekV3 claims to be ChatGPT (v4), while claiming to be D….

0

198

0

RT @pcuenq: Great post by nvidia (published in Hugging Face ❤️) about KVPress, a library to compress transformers KV Cache and thus reduce….

0

32

0

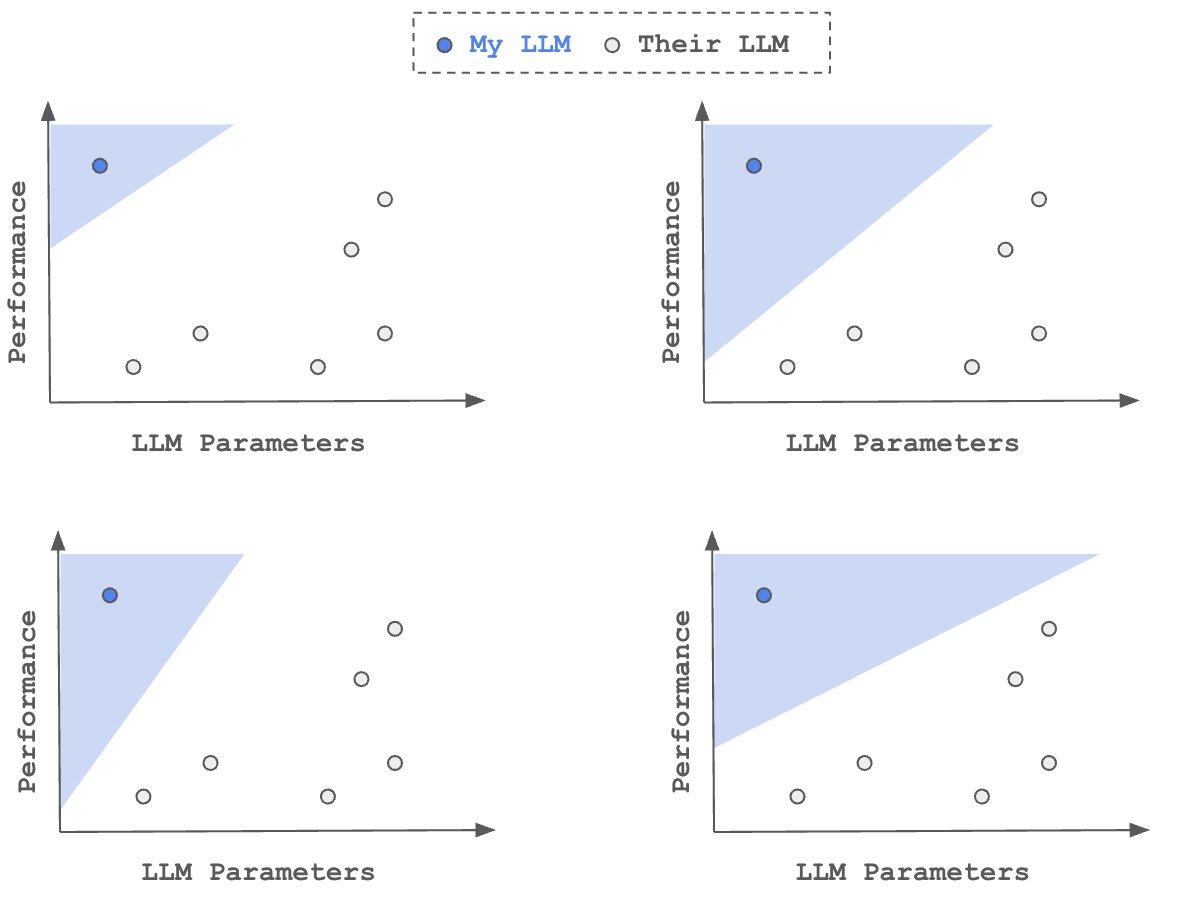

Fresh news from kvpress, our open source library for KV cache compression 🔥. 1. We published a blog post with @huggingface.2. We published a Space for you to try it.3. Following feedback from the research community, we added a bunch of presses and benchmarks. Links👇(1/2)

2

8

45

RT @ariG23498: ⚠️1 million tokens on Llama 3-70B running in bfloat16 precision would take ~ 470 GB of memory. The KV cache alone accounts….

0

4

0

RT @ariG23498: Controlling text generation has always been a problem. What if I wanted my LLM to always end with a particular phrase, or wa….

0

32

0

New version of the notebook using the sinkhorn operator for a fully differentiable relaxation:

kaggle.com

Explore and run machine learning code with Kaggle Notebooks | Using data from multiple data sources

0

1

5

How do you find the permutation of words that minimize their perplexity as measured by an LLM ? In this year @kaggle Santa, I shared an approach to move to a continuous space where you can use gradient-descent using REINFORCE:

kaggle.com

Explore and run machine learning code with Kaggle Notebooks | Using data from multiple data sources

1

3

15

Thanks @MichaelHassid for your contribution to kvpress, we hope other researchers will follow your footsteps 🔥.

Great KV compression repo from @nvidia and @simon_jegou. Our TOVA policy from "Transformers are Multi-State RNNs" ( was just added there.

0

1

3