Shiry Ginosar

@shiryginosar

Followers

1K

Following

332

Media

38

Statuses

188

Moving to @shiryginosar.bsky.social Assistant Professor at TTIC Visiting Faculty Researcher at Google DeepMind Understanding intelligence, one pixel at a time.

Joined December 2018

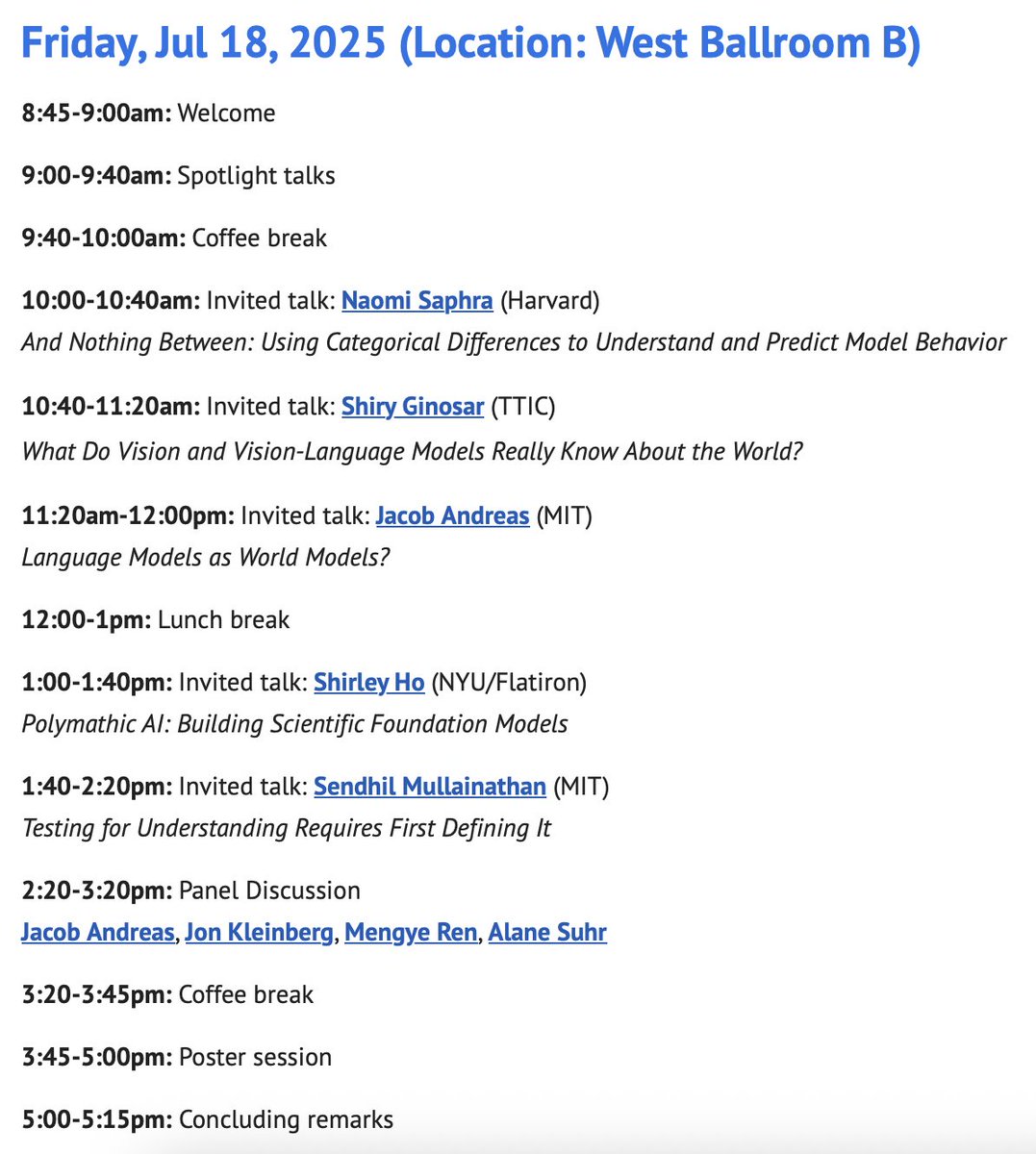

I am giving a talk this morning at 10:40AM PST as part of @WorldModelsICML at #ICML2025 . Title: "What Do Vision and Vision-Language Models Really Know About the World?". Come join us!.

worldmodelworkshop.org

Date: Friday, July 18 2025 Time: 8:45am - 5:15pm (Pacific Time) Location: West Ballroom B at ICML 2025 in Vancouver, Canada (Same Floor as Registration)

1

0

5

RT @dimadamen: Join us for 3rd Perception Test Workshop &Challenge @ICCVConference #iccv2025 .*NEW* this year: .- 3 unified tracks.- novel….

0

11

0

Held @ICCVConference in conjunction with GoogleDeepMind 3rd Perception Test Challenge: Amazing speakers: Ali Farhadi, @AlisonGopnik, @phillip_isola, Philipp Krähenbühl. Fantastically organized by. @eunice_yiu_ and co.!.

0

1

2

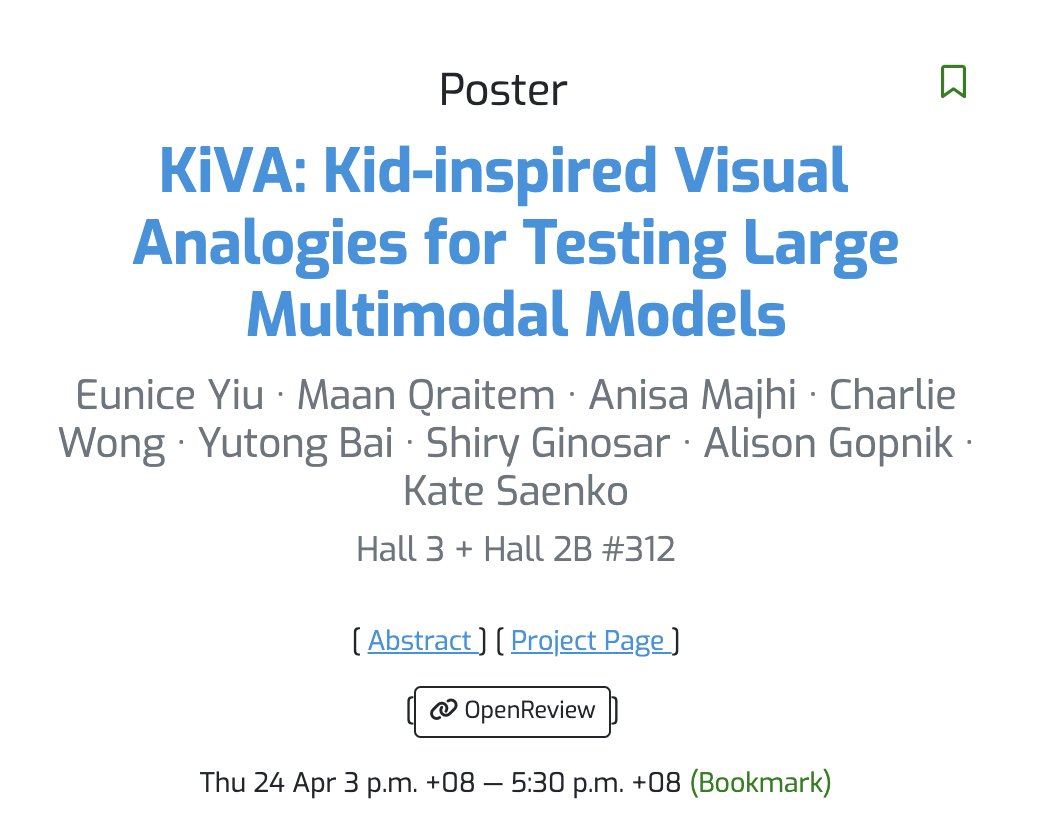

🧠How “old” is your model?.Put it to the test with the KiVA Challenge: a new benchmark for abstract visual reasoning, grounded in real developmental data from children and adults. 🏆Prizes:.🥇$1K to the top model.🥈🥉$500.📅Deadline: 10/7/25.🔗#ICCV2025.

This year we have:.1) New "unified tracks": joint object/point tracking, joint action/sound localisation, and unified MC VideoQA; .2) A VLM interpretability track.3) 2 guest tracks: KiVA image understanding ( and Physics-IQ video (.

1

2

11

When it comes to goal-directed work, people prioritize controllable variability (a.k.a. empowerment!). But in undirected play, we shift toward embracing pure variability. Check out our forthcoming Phil. Trans. A (2026) paper!

Children & adults don't just explore—they seek empowerment: controllable and variable interventions. This intrinsic drive supports causal learning & generalization. 📄 Forthcoming in Phil. Trans. A (2026) @AlisonGopnik @KelseyRAllen @shiryginosar.

0

0

4

Our Poly-Autoregressive prediction framework is at @CVPR!. Come learn how to use our framework to model your favorite problem as an multi-agent interaction!. Talk: Agents in Interaction workshop 4:15pm, Thurs. June 12. Poster #167, ExHall D, 10:30-12:30, Sat. June 14.#CVPR2025.

Can we systematically generalize AR "word models" into "world models”? Our CVPR 2025 paper introduces a unified, general framework designed to model real-world, multi-agent interactions by disentangling task-specific modeling from behavior prediction.

0

0

2

The Artificial Social Intelligence Workshop is BACK at @ICCVConference in Hawaii 🌴!. Join us for talks from amazing speakers & submit your papers for oral/poster presentations!. 🗓️ Deadlines:. Archival: 6/27. Extended Abstracts: 8/1. #AI #ICCV #Research.

Excited to announce the Artificial Social Intelligence Workshop @ ICCV 2025 @ICCVConference. Join us in October to discuss the science of social intelligence and algorithms to advance socially-intelligent AI! Discussion will focus on reasoning, multimodality, and embodiment.

0

7

15

Think LMMs can reason like a 3-year-old?. Think again!. Our Kid-Inspired Visual Analogies benchmark reveals where young children still win: Catch our #ICLR2025 poster today to see where models still fall short!. Thurs. April 24.3-5:30 pm.Halls 3 + 2B #312

New paper: KiVA: Kid-inspired Visual Analogies for Testing Large Multimodal Models . We present KiVA, a simple benchmark for visual analogies that even 3-year-olds can pass, but LMMs cannot. Paper: Benchmark: 1/7.

0

6

15

I completely agree - neuroscience must move to naturalistic stimuli in naturalistic settings.

My former PhD advisor Nachum Ulanovsky's new book, *Natural Neuroscience*, masterfully lays out the case that to truly understand brains we must study them in real-world, natural behaviors. A must-read for neuroscientists! @mitpress

0

0

0

RT @CarlDoersch: We're very excited to introduce TAPNext: a model that sets a new state-of-art for Tracking Any Point in videos, by formula….

0

57

0

Welcome, Nick!! We are so excited to have you join us!.

I'm incredibly excited to share that I'll be joining @TTIC_Connect as an assistant professor in Fall 2026!. Until then, I'm wrapping up my PhD at Berkeley, and after that I'll be a faculty fellow at @NYUDataScience.

0

0

1

RT @hila_chefer: VideoJAM is our new framework for improved motion generation from @AIatMeta. We show that video generators struggle with m….

0

201

0

RT @hxiao: Letter-dropping physics comparison: o3-mini vs. deepseek-r1 vs. claude-3.5 in one-shot - which is the best? Prompt:.Create a Jav….

0

273

0

RT @QianqianWang5: Introducing CUT3R! .An online 3D reasoning framework for many 3D tasks directly from just RGB. For static or dynamic sce….

0

113

0

RT @TTIC_Connect: Just steps from TTIC, Midway Plaisance Skate Park is the perfect spot to enjoy winter! ❄️ Enjoy the charm of Hyde Park an….

0

1

0

Fantastic work from @brjathu!. With @endernewton, @ruilong_li, @cfeichtenhofer, and @JitendraMalikCV.

0

0

4

RT @JitendraMalikCV: I'm happy to post course materials for my class at UC Berkeley "Robots that Learn", taught with the outstanding assist….

youtube.com

Robot learning lecture series by Professor Jitendra Malik at University of California, Berkeley. Course TA: Toru Lin. Lecture slides and reading materials av...

0

251

0