Russell Kaplan

@russelljkaplan

Followers

11,052

Following

652

Media

79

Statuses

513

Past: director of engineering @Scale_AI , startup founder, ML scientist @Tesla Autopilot, researcher @StanfordSVL .

San Francisco

Joined January 2013

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Robert Fico

• 260560 Tweets

Newcastle

• 75519 Tweets

Assassin's Creed

• 61588 Tweets

Amad

• 59062 Tweets

Brighton

• 54978 Tweets

Antony

• 51073 Tweets

Ubisoft

• 44842 Tweets

Gordon

• 44578 Tweets

Yasuke

• 40215 Tweets

Nkunku

• 39380 Tweets

#MUNNEW

• 37897 Tweets

Cole Palmer

• 35860 Tweets

Sevilla

• 27448 Tweets

Mainoo

• 25755 Tweets

Cádiz

• 25529 Tweets

#AtalantaJuve

• 23614 Tweets

Romney

• 22305 Tweets

Nitro

• 19813 Tweets

Bruno Fernandes

• 18057 Tweets

Reece James

• 17855 Tweets

Celta

• 17006 Tweets

Hojlund

• 16423 Tweets

Amrabat

• 16071 Tweets

Rasmus

• 15593 Tweets

Mudryk

• 15333 Tweets

#CoppaItalia

• 12991 Tweets

Reims

• 10528 Tweets

Last Seen Profiles

I recently left

@scale_AI

. I'm so thankful to the team there and for

@alexandr_wang

's bet to acquire our startup nearly 4 years ago.

When I joined Scale, it was a single-product company building the data engine for autonomous vehicles. It's amazing to see how far Scale has come:

45

7

429

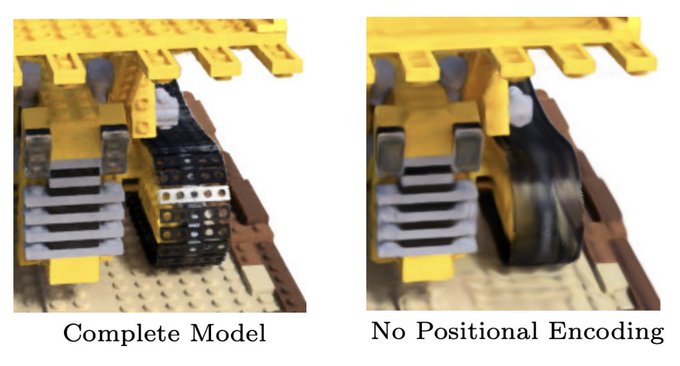

Hosting a Clubhouse tonight 8pm PT with

@karpathy

@RichardSocher

@jcjohnss

on recent breakthroughs in AI. Will discuss image transformers, CLIP/DALL-E, and some other cool recent papers. Join at:

20

49

356

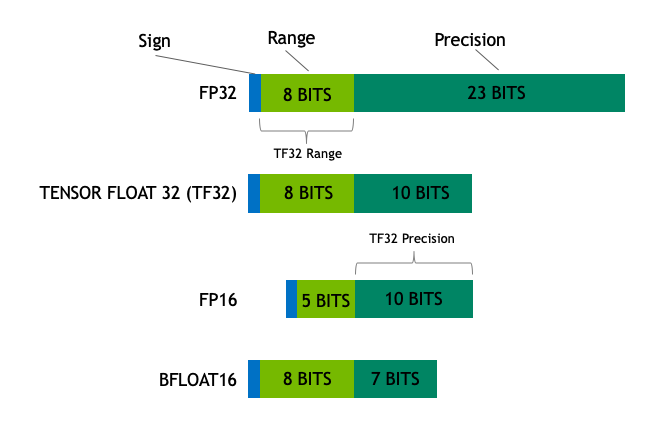

The new TF32 float format

@NVIDIAAI

announced today is a big deal. Same dynamic range as fp32, same precision as fp16, with only 19 bits total and hw-accelerated. Will be default mode of cuDNN going forward. 6x training speedup for BERT on new Ampere GPUs with no code changes!

9

77

341

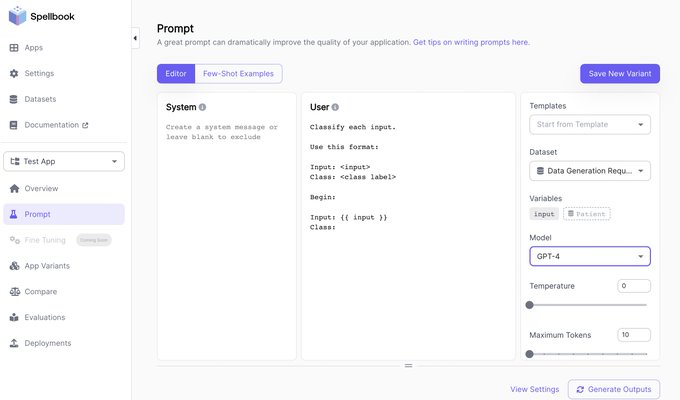

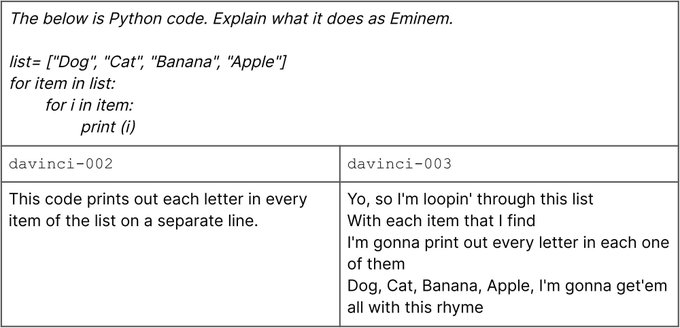

Excited to share what I’ve been working on lately: Scale Spellbook — the platform for large language model apps! Some fun things I learned about LLMs while building this product: 🧵

Large language models are magical. But using them in production has been tricky, until now.

I’m excited to share ✨Spellbook🪄✨— the platform for LLM apps from

@scale_AI

. 🧵

49

132

1K

6

13

200

@sjwhitmore

and team are building a key-value store to enable long-term memory in language model conversations

6

1

150

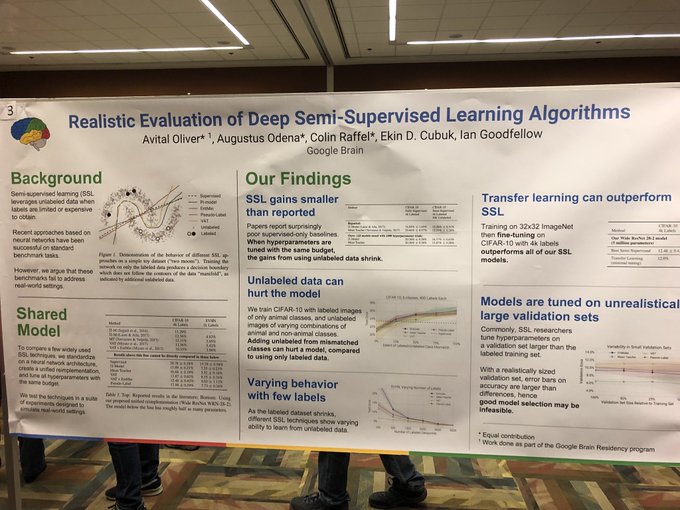

“Realistic Evaluation of Deep Semi-Supervised Learning Algorithms” — turns out that many SSL papers undertune their baselines! With equal hyperparam budget and fair validation set size, SSL gains are often smaller than claimed.

#ICLR2018

3

54

138

@ESYudkowsky

@rmcentush

This play-money market has very little depth at the moment. As I write this, a single trade from a new account's free starting balance can swing the market more than 20 absolute percentage points.

3

0

133

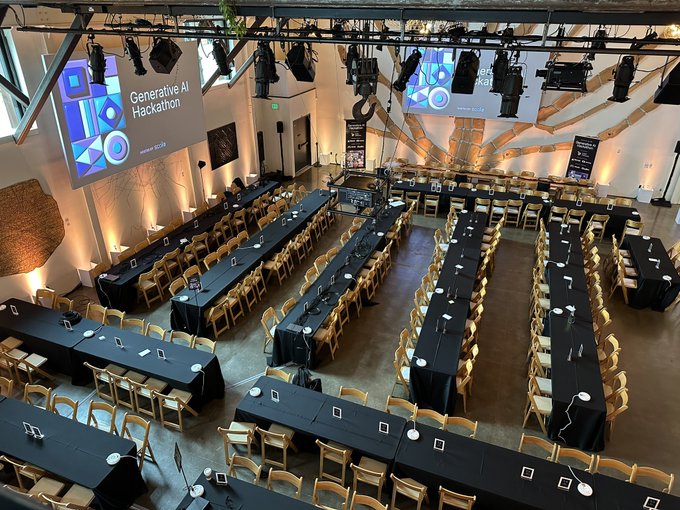

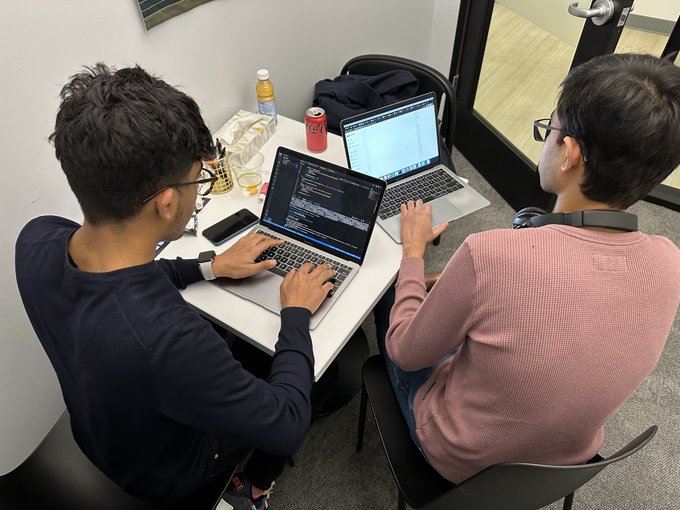

Seems like >50% of hackers have ChatGPT open writing code alongside them at the

@scale_AI

hackathon. A new era for hackathon productivity.

6

7

123

1/ Excited to share that Helia has been acquired by

@scale_AI

! Scale’s mission—to accelerate the development of AI applications—is an exceptional fit with Helia’s ML infrastructure tech and expertise. I’m so proud of team Helia for what we’ve accomplished in such a short time.

9

3

118

So cool to see Tesla and

@karpathy

reveal HydraNet to the world. What started as my Autopilot internship project two years ago has come a long way...

3

14

111

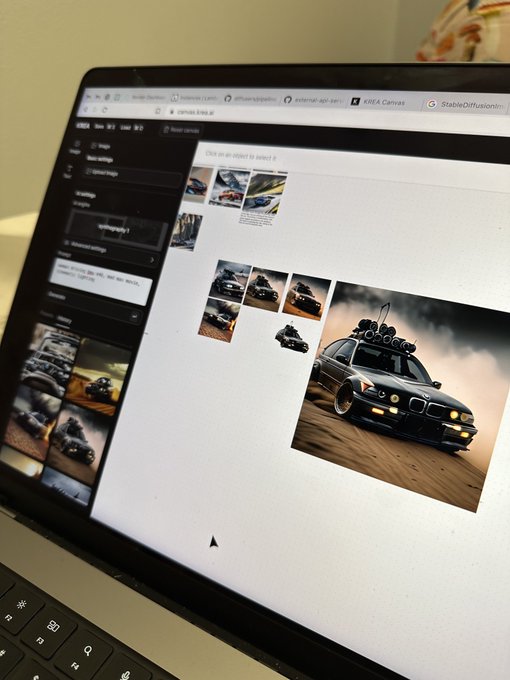

The

@krea_ai

team is building the Game of Life, where each alive cell is a whimsical happy Stable Diffusion image and each dead cell is an eerie, dark Stable Diffusion image, all of which evolve over time. Built on a generative AI version of Canva they made.

3

3

102

Avirath and

@highchinar1

are building a personalized learning curriculum generator on top of Spellbook

1

3

99

Next gen voice assistant with Whisper for transcription and LLMs doing document retrieval + question answering.

@mov_axbx

brought his GPU workstation for extra-low-latency edge inference 🔥

3

3

93

🌳🌍 Automatic permit application generation for climate tech companies & carbon dioxide removal, by

@helenamerk

@douglasqian

@PadillaDhen

4

1

75

9/ (This is also why Scale is starting a dedicated effort to create or collect language-aligned datasets for new problem domains. If you want help collecting language alignment data for your domain, reach out: language-models

@scale

.com)

9

5

66

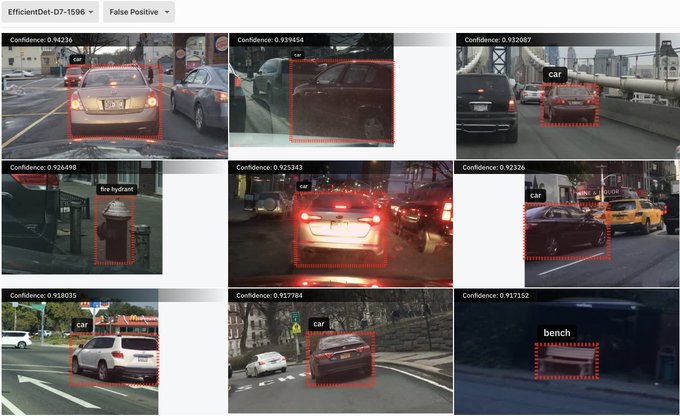

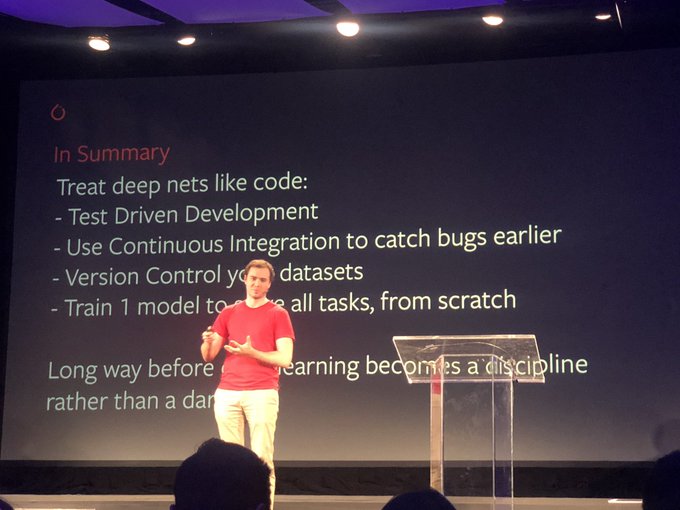

Some applied deep learning principles on the Autopilot team, presented at

@Pytorch

dev summit:

- Every edit to the dataset is a new commit. Track carefully

- Curate hard test sets; random isn’t good enough

- Train all tasks jointly from scratch whenever possible

1

11

65

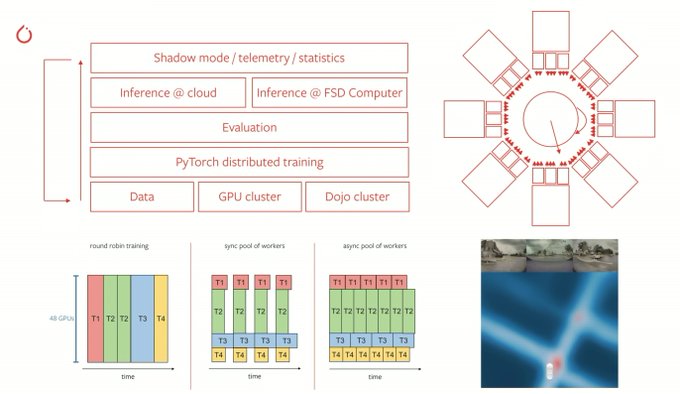

@karpathy

Talk highlights:

- 70,000 GPU hours to "compile the Autopilot"

- Tesla building custom neural net training HW

- Data & model parallelism for a giant multitask, multicamera NN

- Predictions made directly in the top-down frame

2

8

60

@HamelHusain

LLM Engine is free & open source, self-hostable via Kubernetes, with <10s cold start times including for fine-tuned models:

4

6

61

Quite impressed with my first

@scale_AI

data labeling job submission. Uploaded 1k image annotation tasks before going to sleep last night. Woke up and they are all labeled. With good quality!

1

7

56

2/ Historically, the

#1

obstacle to adopting synthetic data has been the reality gap — the small differences between real and synthetic data that models may fixate on incorrectly, harming generalization.

1

2

44

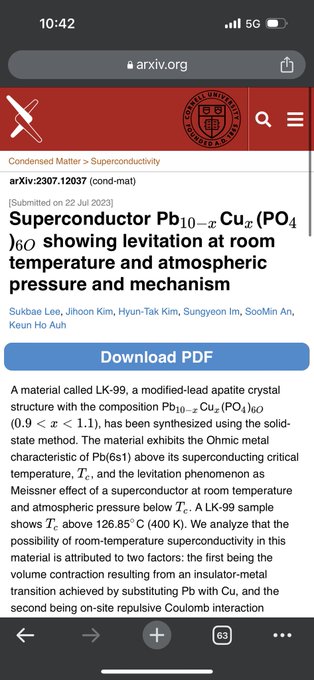

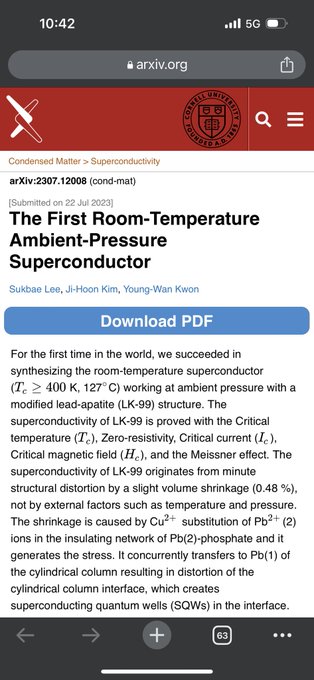

We are so back.

Rolling admissions for

@scale_AI

's second generative AI hackathon are now open. Happening July 15th in San Francisco. Apply by June 12th for a spot.

Application link below.

2

12

42