Roei Herzig

@roeiherzig

Followers

1K

Following

8K

Media

120

Statuses

2K

Researcher @IBMResearch. Postdoc @berkeley_ai. PhD @TelAvivUni. Working on Compositionality, Multimodal Foundation Models, and Structured Physical Intelligence.

Berkeley, CA

Joined March 2017

What happens when vision🤝 robotics meet? Happy to share our new work on Pretraining Robotic Foundational Models!🔥 ARM4R is an Autoregressive Robotic Model that leverages low-level 4D Representations learned from human video data to yield a better robotic model. @berkeley_ai😊

9

73

437

Big personal news: I've traded my "extraordinary alien"👽 status for a much cooler one--just a very standard permanent resident! Yay!🎉🥳 My green card has officially arrived! 💚 🇺🇸🗽

5

0

24

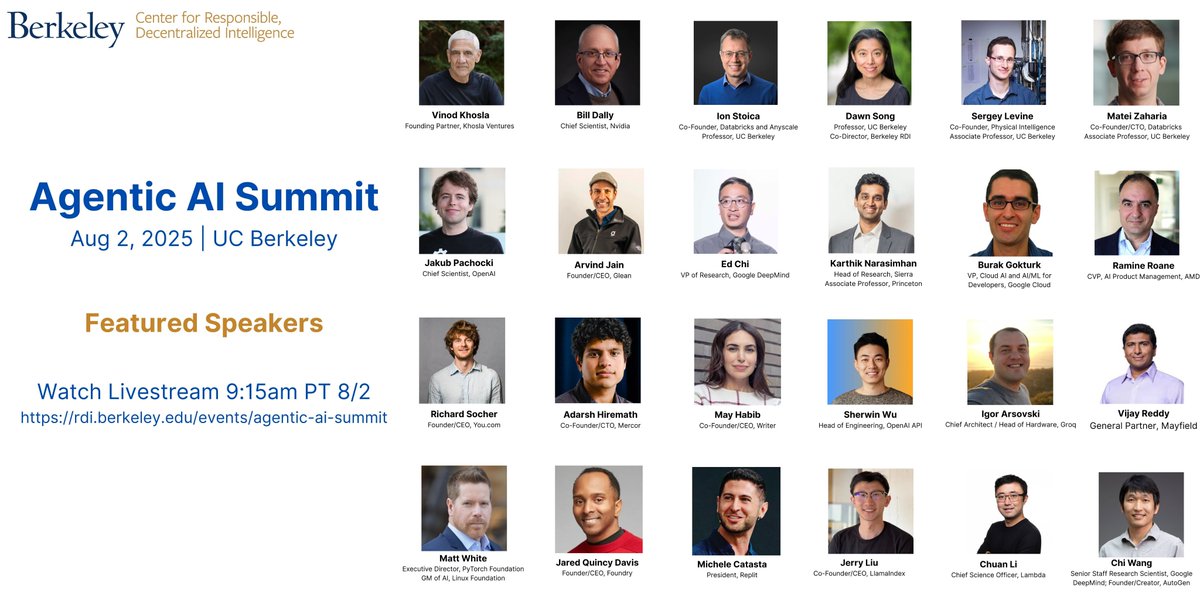

🚀 Excited to speak at the Agentic AI Summit NOW! 📍Frontier Stage - Session 4: Foundations of Agents I’ll give a 5-min spotlight on my research: 𝑺𝒕𝒓𝒖𝒄𝒕𝒖𝒓𝒆𝒅 𝑷𝒉𝒚𝒔𝒊𝒄𝒂𝒍 𝑰𝒏𝒕𝒆𝒍𝒍𝒊𝒈𝒆𝒏𝒄𝒆 — and why it matters for the future of vision & robotics

Really excited for the Agentic AI Summit 2025 at @UCBerkeley—2K+ in-person attendees and ~10K online! Building on our 25K+ LLM Agents MOOC community, this is the premier global forum for advancing #AgenticAI. 👀 Livestream starts at 9:15 AM PT on August 2—tune in!

0

3

14

🚀 Excited to speak tomorrow at the Agentic AI Summit! I’ll give a 5-min spotlight on my research: 𝑺𝒕𝒓𝒖𝒄𝒕𝒖𝒓𝒆𝒅 𝑷𝒉𝒚𝒔𝒊𝒄𝒂𝒍 𝑰𝒏𝒕𝒆𝒍𝒍𝒊𝒈𝒆𝒏𝒄𝒆 — and why it matters for the future of vision & robotics 🤖🧠 🎯 https://t.co/4Fyv90sThN

#AgenticAI #AI #Robotics

0

1

5

זה כמובן לא נכון. התקרת זכוכית קיימת בכל המקרי קצה, שבהם אין ולא יהיה לנו מספיק דיאטה. בין אם מדובר בבעיות שלא ניתן להשיג בהם דאטה (למשל רובוטים), או בין אם מדובר על בעיות מראש שמעולם לא יהיה לנו מלא דגימות. למשל לגנרט חיפושית נדירה מהאמזונס.

מעבר לכל הפונקצינליות המדהימה, על גבול הקסם, של מודלי הוידאו/תמונה טמון שינוי טכנולוגי מרגש כל כך, וזה המעבר מרשתות קונבולוציה (CNNים) למודלי תמונה מבוססי טרנספורמרים, שהתבססו על אותו מאמר היסטורי מ-2017. בסוף, מה שצריך להבין זה שהדרך הקודמת לאמן AI הייתה חסומה. בשלב מסוים, ככל

1

0

1

Thanks @IlirAliu_ for highlighting our work!🙌 🌐 Project page: https://t.co/gmPJqMLGNE 🔗 Code: https://t.co/7XgQVSPDhI More exciting projects on the way—stay tuned!🤖

github.com

Official Implementation of ARM4R ICML 2025. Contribute to Dantong88/arm4r development by creating an account on GitHub.

Robots usually need tons of labeled data to learn precise actions. What if they could learn control skills directly from human videos… no labels needed? Robotics pretraining just took a BIG jump forward. A new Autoregressive Robotic Model, learns low-level 4D representations

0

1

7

🚀 Our code for ARM4R is now released! Check it out here 👉

github.com

Official Implementation of ARM4R ICML 2025. Contribute to Dantong88/arm4r development by creating an account on GitHub.

What happens when vision🤝 robotics meet? Happy to share our new work on Pretraining Robotic Foundational Models!🔥 ARM4R is an Autoregressive Robotic Model that leverages low-level 4D Representations learned from human video data to yield a better robotic model. @berkeley_ai😊

0

14

70

🚀 Excited that our ARM4R paper will be presented next week at #ICML2025! If you’re into 4D representations and robotic particle-based representations, don’t miss it! 🤖✨ I won’t be there in person, but make sure to stop by and chat with Yuvan! 🙌

What happens when vision🤝 robotics meet? Happy to share our new work on Pretraining Robotic Foundational Models!🔥 ARM4R is an Autoregressive Robotic Model that leverages low-level 4D Representations learned from human video data to yield a better robotic model. @berkeley_ai😊

1

13

60

Love the core message here! Predictions ≠ World Models. Predictions are task-specific, but world models can generalize across many tasks.

Can an AI model predict perfectly and still have a terrible world model? What would that even mean? Our new ICML paper formalizes these questions One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

1

0

3

Final chances to #ICCV2025 🌴🌺 Submit your best work to the MMFM @ ICCV workshop on all things multimodal: vision, language, audio and more. 🗓️ Deadline: July 1 🔗

openreview.net

Welcome to the OpenReview homepage for ICCV 2025 Workshop MMFM4

🚨 Rough luck with your #ICCV2025 submission? We’re organizing the 4th Workshop on What’s Next in Multimodal Foundation Models at @ICCVConference in Honolulu 🌺🌴 Send us your work on vision, language, audio & more! 🗓️ Deadline: July 1, 2025 🔗

0

1

4

🚨 Rough luck with your #ICCV2025 submission? We’re organizing the 4th Workshop on What’s Next in Multimodal Foundation Models at @ICCVConference in Honolulu 🌺🌴 Send us your work on vision, language, audio & more! 🗓️ Deadline: July 1, 2025 🔗

sites.google.com

News: 🐈 Submissions are on OpenReview (Open Now!): https://openreview.net/group?id=thecvf.com/ICCV/2025/Workshop/MMFM4 MMFM has two paper tracks. More information under Call for Papers. Proceedings...

2

8

24

Re "vision researchers move to robotics"-they’re just returning to the field’s roots. Computer vision began as "robotic vision", focused on agents perceiving & interacting within the world. The shift to "internet vision" came later with the rise of online 2D data. Org CV book👇

”why are so many vision / learning researchers moving to robotics?” Keynote from @trevordarrell #RSS2025

0

0

6

Overall, I think the move from CMT to OpenReview was a great decision. Now if only we can improve the paper-reviewer matching system!

2

4

20

🚀 Excited to share that our latest work on Sparse Attention Vectors (SAVs) has been accepted to @ICCVConference — see you all in Hawaii! 🌸🌴 🎉 SAVs is a finetuning-free method leveraging sparse attention heads in LMMs as powerful representations for VL classification tasks.

🎯 Introducing Sparse Attention Vectors (SAVs): A breakthrough method for extracting powerful multimodal features from Large Multimodal Models (LMMs). SAVs enable SOTA performance on discriminative vision-language tasks (classification, safety alignment, etc.)! Links in replies!

0

5

32