Ritvik Singh

@ritvik_singh9

Followers

935

Following

486

Media

17

Statuses

102

PhD student @berkeley_ai. prev. @NvidiaAI, @UofT

Berkeley, CA

Joined February 2022

Our latest work performs sim2real dexterous grasping using end-to-end depth RL.

14

48

395

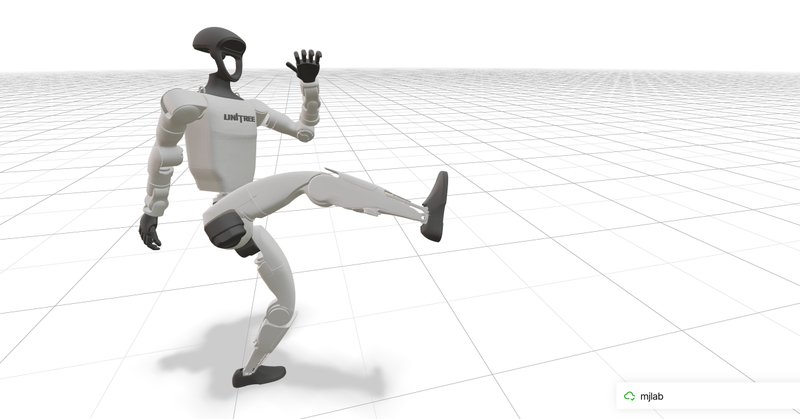

We open-sourced the full pipeline! Data conversion from MimicKit, training recipe, pretrained checkpoint, and deployment instructions. Train your own spin kick with mjlab: https://t.co/KvNQn0Edzr

github.com

Train a Unitree G1 humanoid to perform a double spin kick using mjlab - mujocolab/g1_spinkick_example

6

74

385

Our most recent work on multi-fingered manipulation was built on the back of these features including tiled rendering which enabled direct end-to-end pixels to action based sim-to-real transfer. https://t.co/GJXVmmBGpr

1

3

6

I'm super excited to announce mjlab today! mjlab = Isaac Lab's APIs + best-in-class MuJoCo physics + massively parallel GPU acceleration Built directly on MuJoCo Warp with the abstractions you love.

33

140

851

Very happy to share our new Isaac Lab white-paper that @yukez just announced at CoRL! https://t.co/D5QbzXRVct This is the product of a tremendous amount of work from many teams at NVIDIA plus many folks from RAI and ETH. Congrats to the whole team!

1

10

52

cool work. recommend digging into details -- they show how throughput can be increased by changing the arrangement of envs and RL training across a node. this will become especially important as policy size increases with pre-trained models..

0

5

44

This project wouldn't have been possible without my collaborators, Karl Van Wyk, @JitendraMalikCV, @pabbeel, @robot_trainer, @ankurhandos. For more information and videos, check out our website:

0

1

8

In the real world, we also find that the distilled E2E teacher policies lead to the best performance.

1

0

8

We train end-to-end policies with depth and then distill them into RGB. Compared to state-based teachers, we find our depth policies lead to better students.

1

1

7

We find batch size is a critical bottleneck for the vision-based policies. Training with our disaggregated setup reliably produces better policies.

1

0

8

Our method is able to double the number of environments on the exact same hardware (4xL40S).

1

0

5

We propose to train our policies with a new method that disaggregates simulation and RL training on separate GPUs. In this way, we have several GPUs solely running the environment simulation, whereas policy inference, PPO, and experience buffers are hosted on another GPU.

1

0

7

This is done mainly because of the difficulty to scale up the batch size for vision RL. Most traditional simulators scale up to multiple GPUs using data parallelism. While serviceable for state-based training, it is not an efficient use of GPU memory for vision RL.

1

0

8

Many prior works factorize vision policies into a privileged teacher training phase and then distill into a vision student.

1

0

9

Should robots have eyeballs? Human eyes move constantly and use variable resolution to actively gather visual details. In EyeRobot ( https://t.co/iSL7ZLZcHu) we train a robot eyeball entirely with RL: eye movements emerge from experience driven by task-driven rewards.

8

55

272

Check out our full code release!!! And I am also glad to see that VideoMimic is in the best paper finalist at CoRL 2025. Seoul!! https://t.co/jKGM3lzprY

github.com

Visual Imitation Enables Contextual Humanoid Control. arXiV, 2025. - hongsukchoi/VideoMimic

excited that VideoMimic was just nominated for an award at CoRL, see you soon in Seoul! 🇰🇷 we have also now also released our sim/real-robot deployment pipeline, including checkpoints and nice viser web viz -- check it out https://t.co/HhqnbE0F0S

1

2

37

excited that VideoMimic was just nominated for an award at CoRL, see you soon in Seoul! 🇰🇷 we have also now also released our sim/real-robot deployment pipeline, including checkpoints and nice viser web viz -- check it out https://t.co/HhqnbE0F0S

20

55

429

Awesome research and I'm glad it's now available to the community. Sim to real learning is a tool with a ton of potential, but it's so hard to get started building the right environments and task setup!

Happy to announce that we have finally open sourced the code for DextrAH-RGB along with Geometric Fabrics: https://t.co/v7QPGgtyDi

https://t.co/fHyvvKU9IA

0

3

25

Happy to announce that we have finally open sourced the code for DextrAH-RGB along with Geometric Fabrics: https://t.co/v7QPGgtyDi

https://t.co/fHyvvKU9IA

github.com

FABRICS. Contribute to NVlabs/FABRICS development by creating an account on GitHub.

Excited to announce our latest work: DextrAH-RGB where we successfully train visuomotor policies in sim to perform dexterous grasping of arbitrary objects end-to-end from RGB input!

3

37

216