Randall Balestriero

@randall_balestr

Followers

4K

Following

299

Media

185

Statuses

571

AI Researcher: From theory to practice (and back) Postdoc @MetaAI with @ylecun PhD @RiceUniversity with @rbaraniuk Masters @ENS_Ulm @Paris_Sorbonne

USA

Joined April 2020

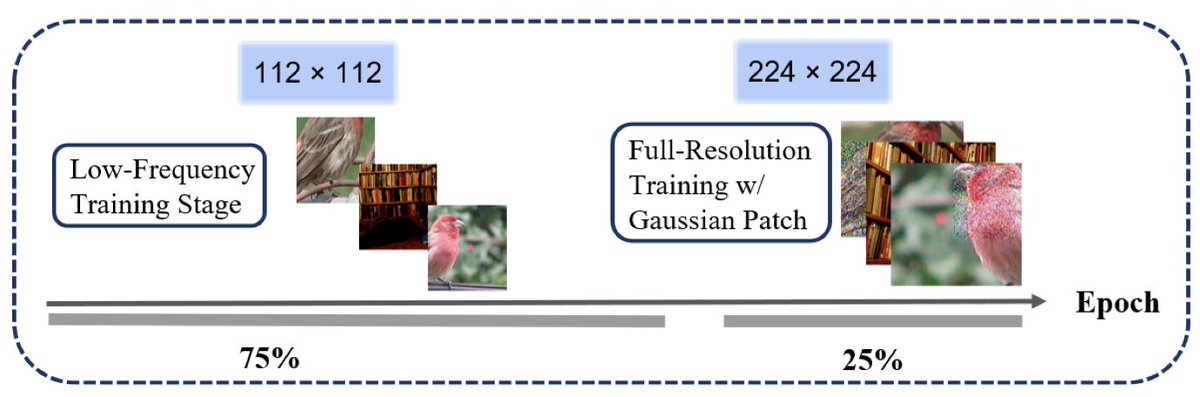

Impressed by DINOv2 perf. but don't want to spend too much $$$ on compute and wait for days to pretrain on your own data? Say no more! Data augmentation curriculum speeds up SSL pretraining (as it did for generative and supervised learning) -> FastDINOv2!.

4

32

190

In case a full day of splines isn't enough to make you book your trip to JMM26. Perhaps a 90min session with @ylecun (+ TBD speakers) on self supervised learning/world models WITH math will close the deal!.No matter your background, come in number to discuss research together!

Interested in splines for AI theory (generalization, Grokking, explainability, generative modeling, . )? Wait no more! We are organizing a dedicated session at JMM26 (the largest math conf.): Consider submitting your abstract! Deadline in 2 weeks!

0

0

5

But this makes me wonder: if different MAE hparams make you learn different features about your input, can there exist a more universal reconstruction-based pretraining solution? Huge congrats to the MVPs @Abisulco @RahulRam3sh and Pratik from UPenn for making us wonder!.

0

0

6

Learning by input-space reconstruction is often inefficient and hard to get right (compared to joint-embedding). While previous theory explains why in the linear/kernel setting, we now take a deep dive into MAEs specifically!.Now on arxiv + #ICCV2025 !

6

15

82

RT @NYUDataScience: Congratulations to CDS PhD Student @vlad_is_ai, Courant PhD Student Kevin Zhang, CDS Faculty Fellow @timrudner, CDS Pro….

0

7

0

RT @rpatrik96: I am heading to @icmlconf to present our position paper with @randall_balestr @klindt_david @wielandbr on what we believe ar….

0

7

0

Huge congrats to the amazing @JiaqiZhang82804 Juntuo Wang, Zhixin Sun and John Zou! If you are interested in follow ups for other modalities and/or CLIP, please DM me or shoot me an email!.

1

0

8

We also have theoretical justifications of CT, in particular, CT does a Sobolev space projection of your DN! This is only a first step towards provable methods improving SOTAs. Huge congrats to @leonleyanghu and Matteo Gamba!

1

0

2

Huge congrats to MVP @thomas_M_walker @imtiazprio and @rbaraniuk ! Ping us with questions or comments!.

0

0

6