Andrei Mircea

@mirandrom

Followers

165

Following

189

Media

18

Statuses

100

PhD student @Mila_Quebec ⊗ mechanistic interpretability + systematic generalization + LLMs for science ⊗ https://t.co/xg8aE8CWM3

Montreal, QC

Joined December 2017

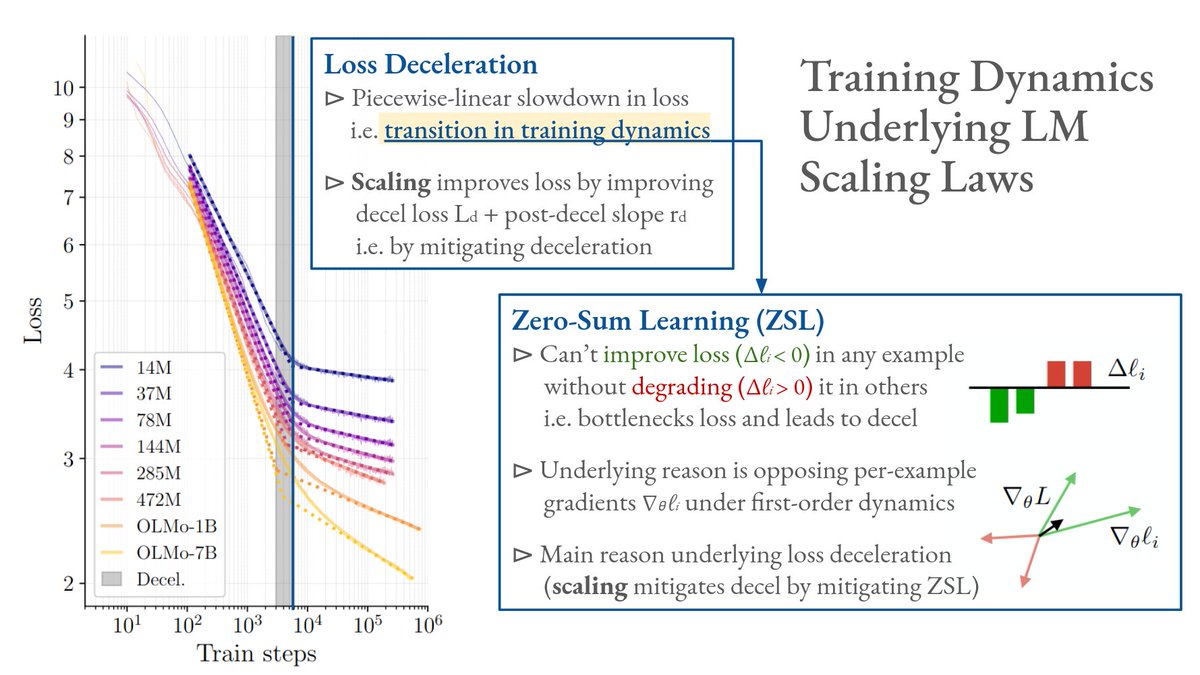

Interested in LLM training dynamics and scaling laws? Come to our #ACL2025 oral tomorrow!.⏰ Tuesday 2:55pm.📍 Hall C (Language Modeling 1).🌐 . If you're in Vienna and want to chat, let me know!.@Mila_Quebec.

Step 1: Understand how scaling improves LLMs. Step 2: Directly target underlying mechanism. Step 3: Improve LLMs independent of scale. Profit. In our ACL 2025 paper we look at Step 1 in terms of training dynamics. Project: .Paper:

0

6

12

RT @ziling_cheng: Our paper on reasoning × interpretability × evaluation has been accepted to EMNLP main! . Excited because this marks the….

0

13

0

RT @darshilhdoshi1: Big news! Congratulations to the brilliant members of the collaboration!. Very excited to participate in the research t….

0

1

0

Outcome over process.

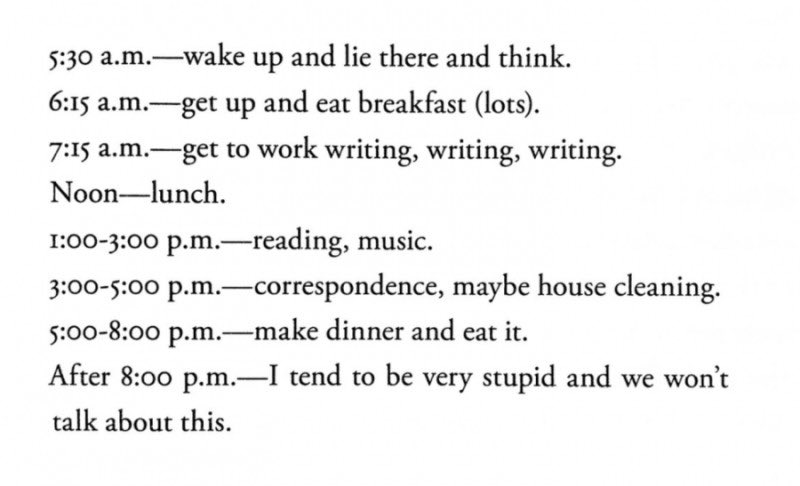

correlation i have observed: . the most talented people i know (who also happen to be high agency) have the shittiest workflow organization. - no notion organization hell, usually messy apple notes and google docs.- regular users of pen and paper .- no superhuman or fancy email.

0

0

0

I love this idea; it's ambitious but grounded, and it breaks the mould of typical discourse on AI for science I've seen. But I'm not sure that recording without replicating is enough. Here's a rough analogy with language modeling I think clarifies my point 👇.

I do think AI could increase scientific productivity. But so much of science isn't easily mechanized tasks like pipetting -- it's adjusting optics, troubleshooting custom equipment, and keeping critters from dying. Here's a plan to capture and use this "tacit knowledge.".

2

0

1

RT @KateLobacheva: Join us at our lab’s symposium Aug 19–20! 🚀. I’ll present our recent ACL oral Training Dynamics Underlying Language Mode….

0

3

0

I'm actually surprised how often I've seen this reviewing papers in ML. I guess the expectation has become that many reviewers will only do a surface level reading and accept/reject based on vibes.

I've started checking sources for fun when I see a claim that seems dubious in something I'm reading, and probably more than half the time the source doesn't support the claim being made. A thread of some I saved:.

1

0

5

RT @sparse_emcheng: @tomjiralerspong Work led by @jinleewastaken and @tomjiralerspong , with Jade Yu and Yoshua Bengio . Updated preprint:….

arxiv.org

By virtue of linguistic compositionality, few syntactic rules and a finite lexicon can generate an unbounded number of sentences. That is, language, though seemingly high-dimensional, can be...

0

1

0

RT @nsaphra: If you’re in Vienna for ACL go check out our interpretability poster on how feature interactions reflect linguistic structure!….

0

6

0

RT @ziling_cheng: What do systematic hallucinations in LLMs tell us about their generalization abilities?. Come to our poster at #ACL2025 o….

0

7

0

RT @cesare_spinoso: How can we use models of cognition to help LLMs interpret figurative language (irony, hyperbole) in a more human-like m….

0

11

0

RT @ljyflores38: ⏰ Sharing our work on calibrated confidence scores at #ACL2025NLP, July 29 – 4PM Vienna time (Virtual)!. 📰 Improving the C….

0

9

0

RT @tongshuangwu: We all agree that AI models/agents should augment humans instead of replace us in many cases. But how do we pick when to….

0

21

0

RT @lasha_nlp: Life update: I’m excited to share that I’ll be starting as faculty at the Max Planck Institute for Software Systems(@mpi_sws….

0

43

0