Moh Baddar

@mbaddar2

Followers

199

Following

224

Media

112

Statuses

373

AI/ML Engineer | LLM-Dev , Ops and Evaluation | Applied Math PhD | Subscribe ✉️ https://t.co/rc9q8SYRnU

Berlin, Germany

Joined January 2025

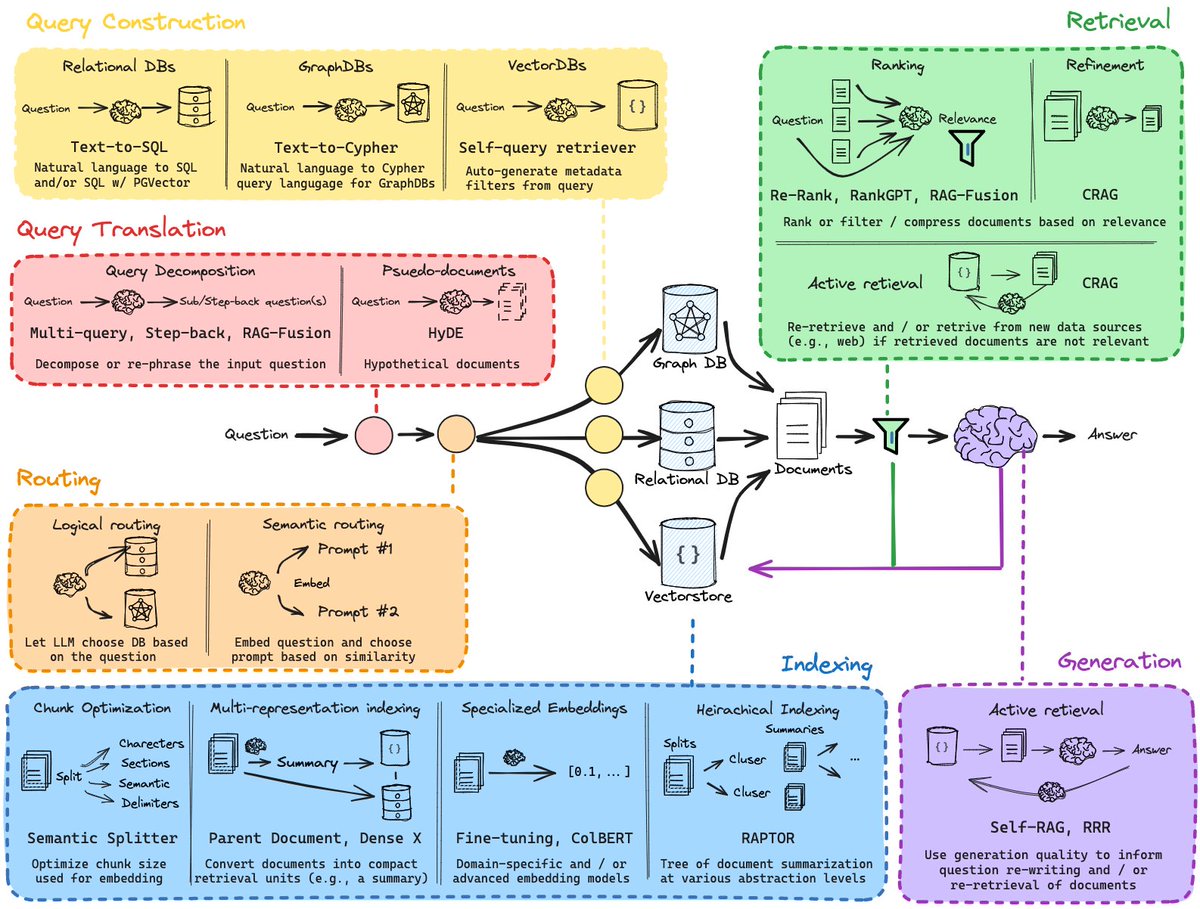

Check this star-worthy github repo for a comprehensive overview of RAG approaches. Concepts .1️⃣ Routing and Query Construction.2️⃣ Indexing & Advanced Retrieval .3️⃣ Reranking .

1

19

71

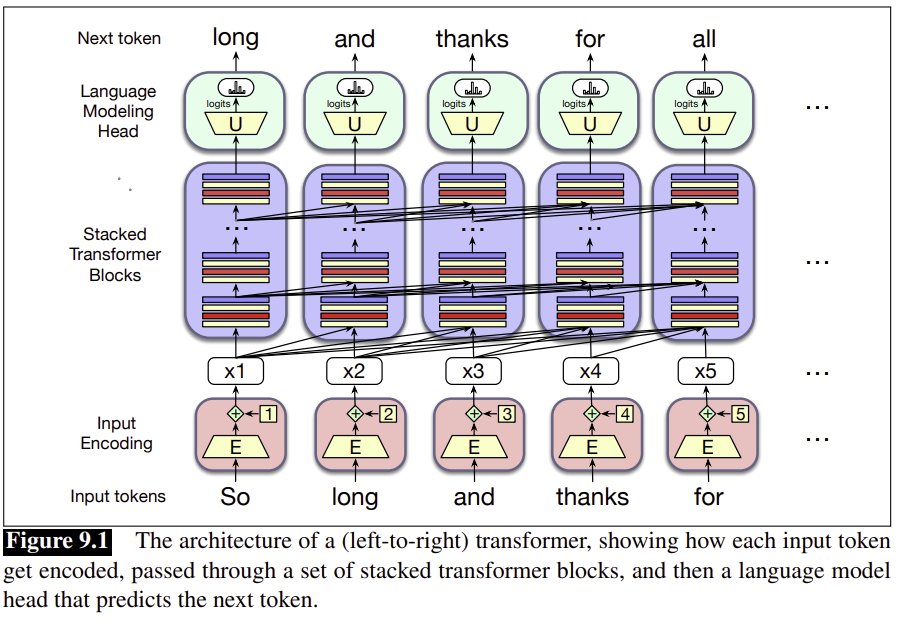

Despite the hype for Large Language Models (#LLMs), few people talk about the backbone technology that made LLMs possible : Transformers architecture. ---.Check my post below to get a very quick overview over transformers and how they model "context" in language models.

Just as blockchain serves as the foundational technology behind cryptocurrencies, Transformers are the core architecture powering Large Language Models (LLMs). At their essence, Transformers offer a sophisticated mechanism to capture context within a sequence of tokens—such as

0

0

1

Exposing an ML Model to user via an API is a critical step to make the model reusable by different services and Modules in your system. Check this Step-by-Step Guide to Deploying Machine Learning Models with FastAPI and Docker

machinelearningmastery.com

We’ll take it from raw data all the way to a containerized API that’s ready for the cloud.

0

1

2

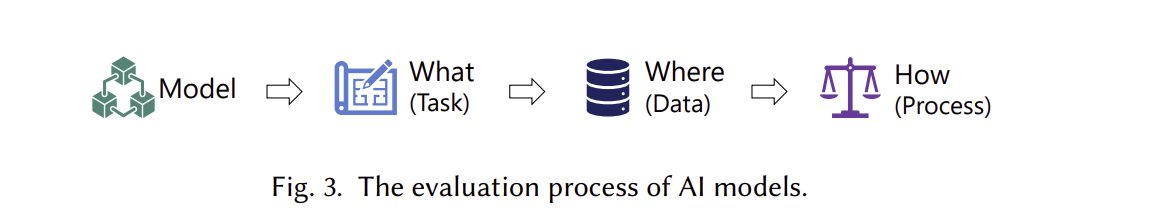

Evaluating Large Language Models ( #LLMs ) is a key step to properly adopt them in any software solution. However the two key challenges to evaluating LLMs are :.( 1 ) Generality of tasks : the is a false perception due to the availability of dozens of LLMs user-facing

0

0

0

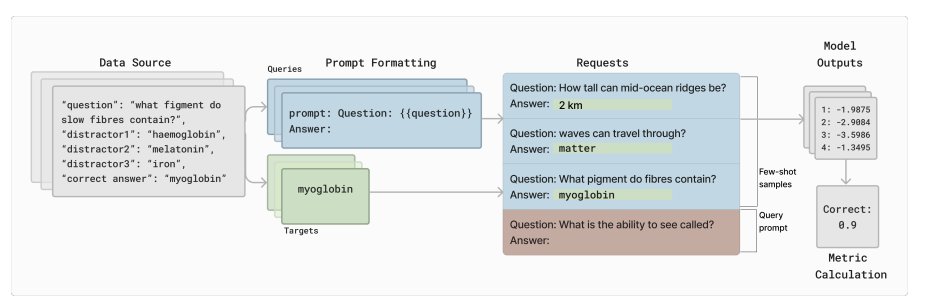

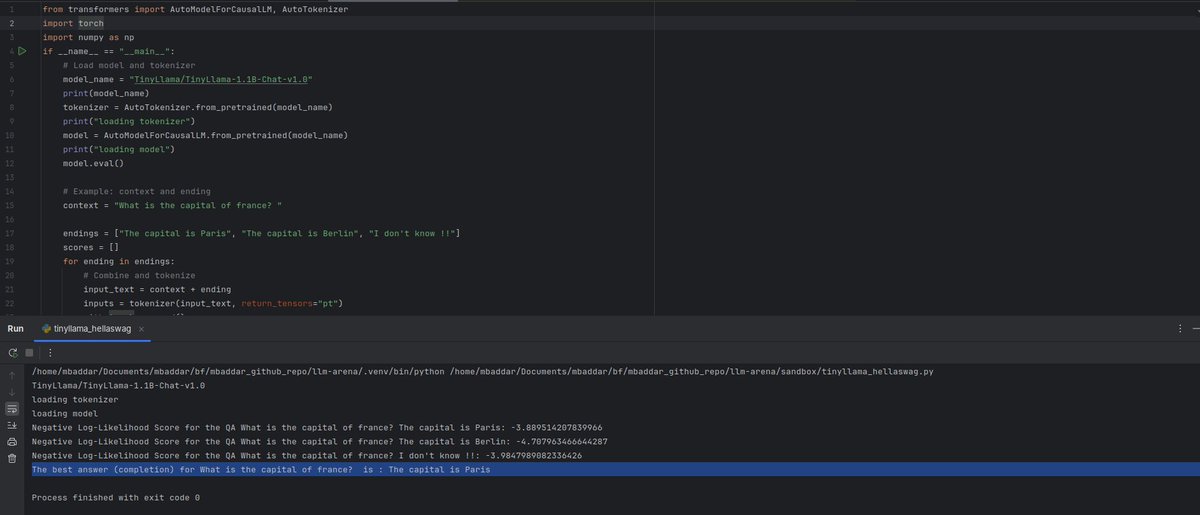

One of the interesting , yet challenging topics related to LLMs is benchmarking 🌡️. Due to the complex nature of #LLMs, in addition to the complex output format (free text), there are numerous benchmarks that measure the performance of LLMs for different task. To learn more ,.

There literally dozens of benchmarks for ( I would say 100s) of LLM models , both proprietary and open source. One of the problem I have faced while understanding the benchmarks, despite the existence of numerous framework for evaluation is a simple illustration , apart from

0

0

2

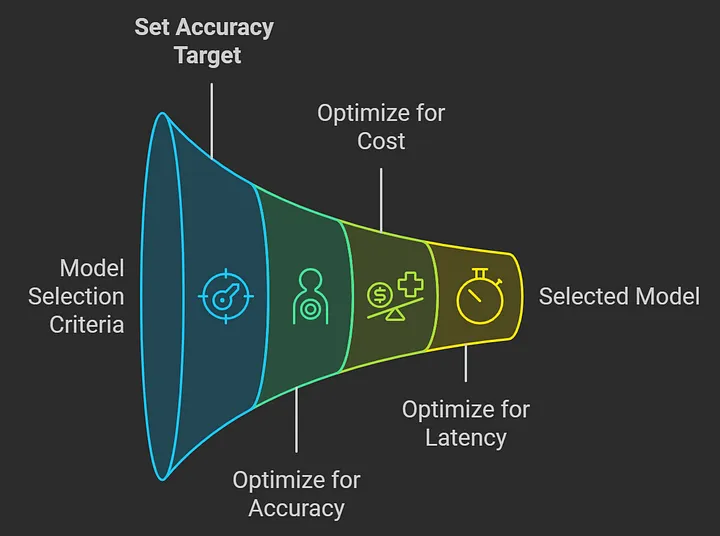

If you mean business with LLMs, then one of the technical approaches you must be aware of is building a self-hosted LLMs framework that suits your technical and business needs. However, one of the trickiest challenges you might face during the design phase is selecting the best.

One of the pressing questions I have in mind when thinking about Local-LLMs solutions is : Which model I should use a base model ? Which one is suitable for which use-case ? . Despite this question being simple, it is very hard and subjective to answer. However, there are many

0

0

0

If you are in the business of adopting LLM models to your application, you might consider options beyond @OpenAI or @Gemini APIs : You can have your own LLM framework on your infrastructure. Why? Cost efficient, Fully customizable, Control and Secure your own data. However, a.

One of the pressing questions I have in mind when thinking about Local-LLMs solutions is : Which model I should use a base model ? Which one is suitable for which use-case ? . Despite this question being simple, it is very hard and subjective to answer. However, there are many

0

0

2

If you want to build self hosted LLM-powered software solutions, one of the main design challenges you need to make is : Which base model I am going to use. There are , literally, hundreds of open source models: @llama @deepseek_ai @MistralAI and GPT family, etc. Usually,.

One of the pressing questions I have in mind when thinking about Local-LLMs solutions is : Which model I should use a base model ? Which one is suitable for which use-case ? . Despite this question being simple, it is very hard and subjective to answer. However, there are many

0

0

1