Jay Hack

@mathemagic1an

Followers

37,499

Following

2,802

Media

669

Statuses

3,885

Founder/CEO @codegen . Tweets about AI, computing, and their impacts on society. Previously did startups, @palantir , @stanford . Not a pseudonym.

San Francisco

Joined May 2013

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#Eurovision2024

• 1016260 Tweets

Switzerland

• 116758 Tweets

Flamengo

• 107134 Tweets

Corinthians

• 101517 Tweets

Portugal

• 97178 Tweets

#AMVCA10

• 95371 Tweets

Luka

• 85519 Tweets

Reece James

• 85495 Tweets

Spain

• 71968 Tweets

Italia

• 66522 Tweets

#ESC2024

• 64191 Tweets

Slimane

• 62801 Tweets

Irlanda

• 62132 Tweets

Norway

• 60218 Tweets

Pirates

• 55051 Tweets

#ESCita

• 51185 Tweets

Suiza

• 44579 Tweets

Nemo

• 44538 Tweets

Lorran

• 44181 Tweets

Stephen

• 40252 Tweets

Francia

• 38642 Tweets

Croatia

• 31335 Tweets

Alemania

• 30267 Tweets

Loreen

• 29965 Tweets

Paul Skenes

• 28725 Tweets

Austria

• 26657 Tweets

Finland

• 25975 Tweets

Estonia

• 25366 Tweets

#ESC24

• 25308 Tweets

Kyrie

• 25098 Tweets

ABBA

• 24343 Tweets

Dort

• 22030 Tweets

Armenia

• 21959 Tweets

Olly

• 17586 Tweets

Grecia

• 16600 Tweets

Paulinho

• 15678 Tweets

Breath of Life

• 14373 Tweets

Lively

• 12968 Tweets

Baby Lasagna

• 12827 Tweets

Gerson

• 12751 Tweets

Last Seen Profiles

Pinned Tweet

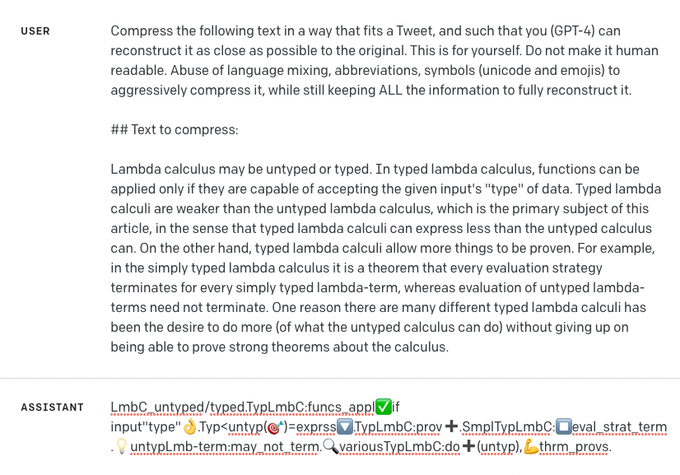

Excited to share what I've been building 🚀

Introducing

@codegen

⚡ Agent-driven software development for enterprise codebases ⚡

We've raised $16mm from

@ThriveCapital

and others to bring this to the world -

👇 More below

66

123

1K

Introducing text-to-figma: build and edit

@figma

designs with natural language!

Join the waitlist here:

1/n

94

417

3K

.

@microsoft

releases a single, 900-line python file for "Visual ChatGPT," an agent that can chat w/ images

interacts with vision models via text and prompt chaining, i.e. the output gets piped to stable diffusion.

Also uses

@LangChainAI

…

13

140

830

.

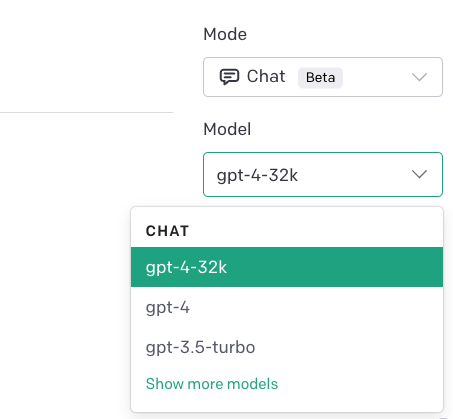

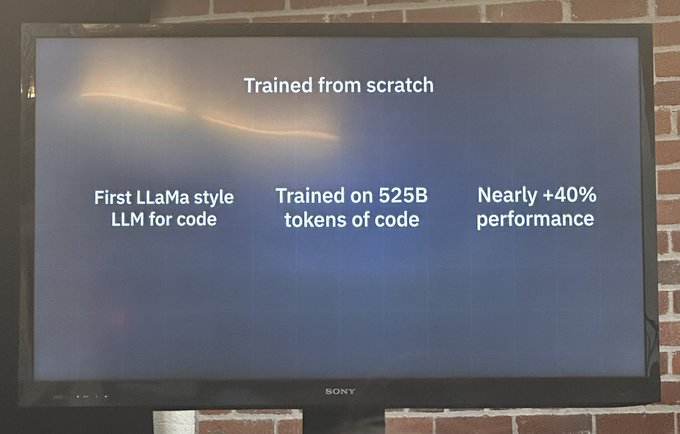

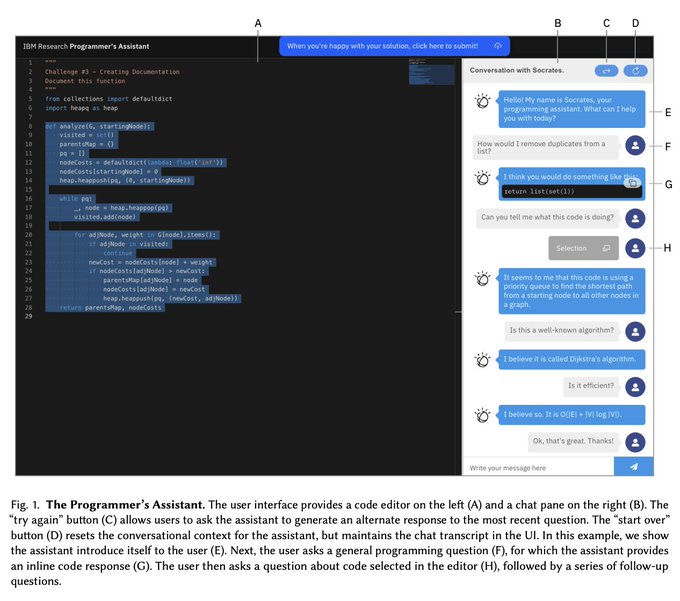

@Replit

announces they’ve turned their entire IDE into a set of “tools” for an autonomous agent

Tell it what to do and let ‘er rip.

Example: spin up a REPL, write an app for me and deploy it 🚀

Oh, and they announced a new Llama-style code completion model.

17

73

708

This is insane:

@drfeifei

and team are about to publish a robotics paper that enables robots to perform 1000 common human tasks just from observation of humans.

Get ready

12

117

689

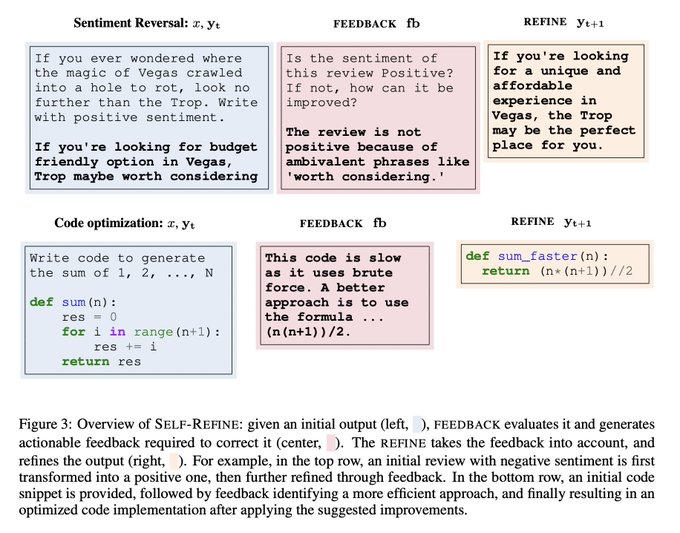

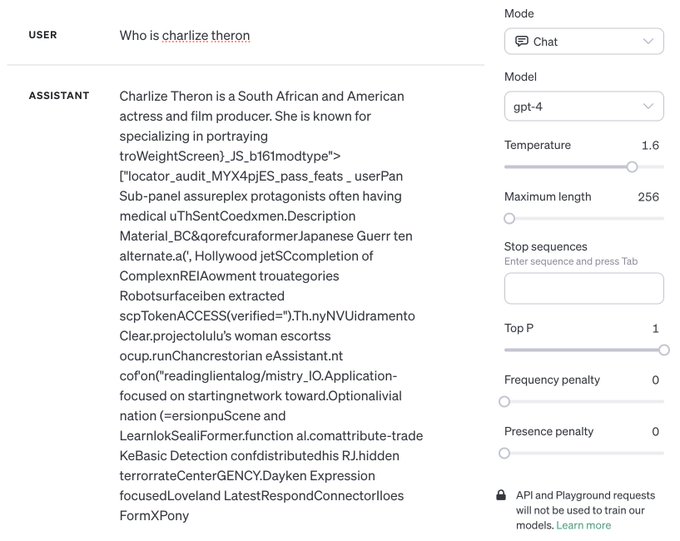

Interesting point from

@stephen_wolfram

LLMs undergo a "phase change" at a certain temperature and generate pure garbage (1.6 for GPT-4)

This is not well understood; ideas from statistical mechanics may help us make sense of it

45

62

585

@DoctorPerin

We’d likely find that most users aren’t smart enough to keep up with the parrots 😂

2

11

549

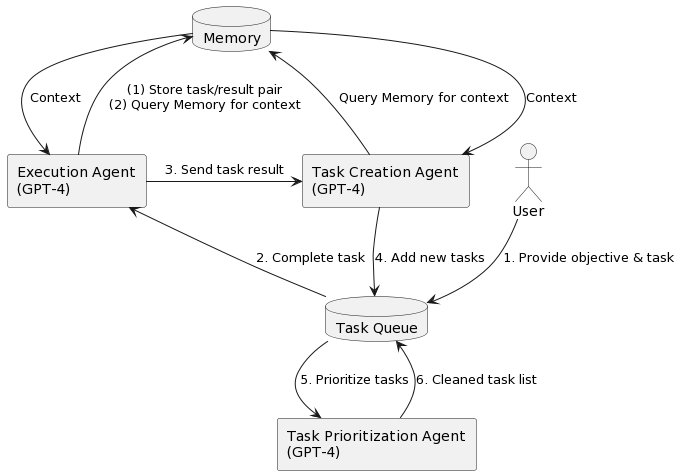

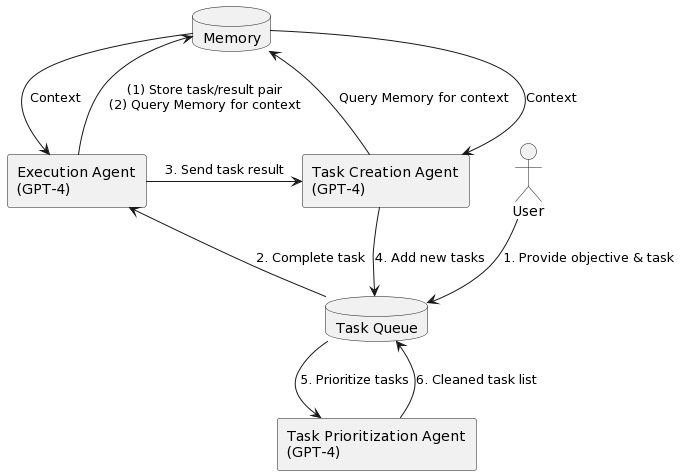

Right now it feels like a legitimate path to superintelligence is swarms of GPT-4-level agents somehow interacting w/ each other and various external memory stores.

Also interesting that a lot of the advancements on this front are coming from hackers:

🔥1/8

Introducing "🤖 Task-driven Autonomous Agent"

An agent that leverages

@openai

's GPT-4,

@pinecone

vector search, and

@LangChainAI

framework to autonomously create and perform tasks based on an objective.

"Paper":

[More 🔽]

252

1K

6K

53

66

516

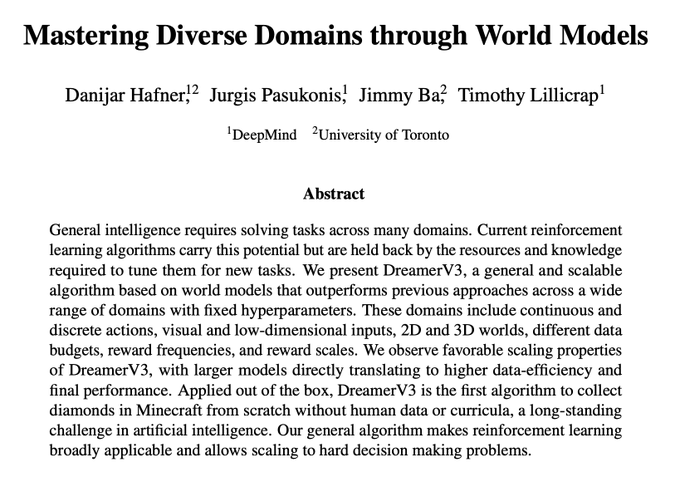

LLMs unified all NLP tasks under one algorithm.

Reinforcement learning is next...!? 🤓🙏

"Mastering Diverse Domains through World Models"

@deepmind

's latest, an RL agent that generalizes across domains without human/expert input!

Here's how 👇

13

114

520

Text-to-figma is now open source! 🎨🚀

I'm no longer working on this project but would love to see someone build it out.

Contributions welcome; thanks to

@nicolas_ouporov

for a few contributions thus far.

Introducing text-to-figma: build and edit

@figma

designs with natural language!

Join the waitlist here:

1/n

94

417

3K

12

85

489

What does an AI sysadmin look like?

Introducing 💦 ShellShark 🦈 : an agent that swims through your infra to:

✅ Set up/modify infrastructure

⚙️ Examine and debug services

💻 All with auditable logs

Waitlist: 👉 👈

More from me and

@RealKevinYang

👇

18

58

474

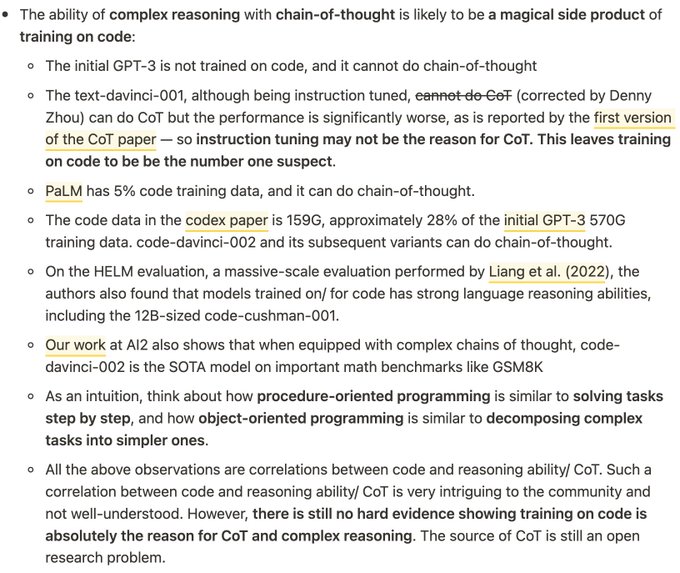

How do LLMs gain the ability to perform complex reasoning using chain-of-thought?

@Francis_YAO_

argues it's a consequence of training on *code* - the structure of procedural coding and OOP teaches it step-by-step thinking and abstraction.

Great article!

13

99

463

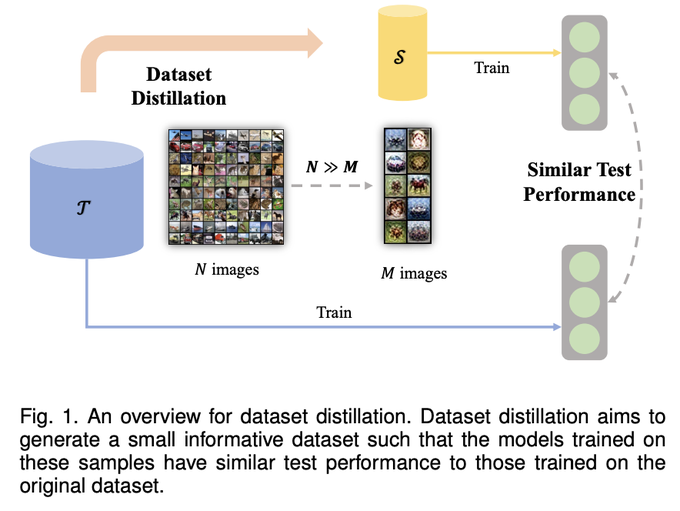

This is nuts. You can compress an image to a kilobyte with this technique

21

51

453

US/China decoupling accelerates:

US manufacturing orders in China are down 40 percent, according to

@Noahpinion

6

54

439

Still thinking about this

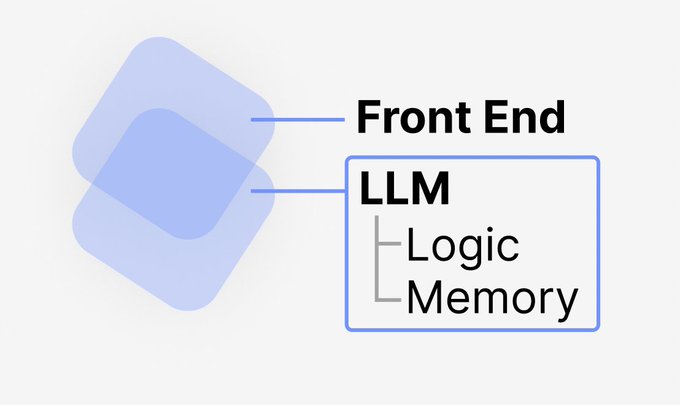

"GPT is all you need for backend"

It's a joke, but there's also something deeper there

What if you had a fully differentiable backend for your SAAS service? Will we train our backends, not program them, in 10 years?

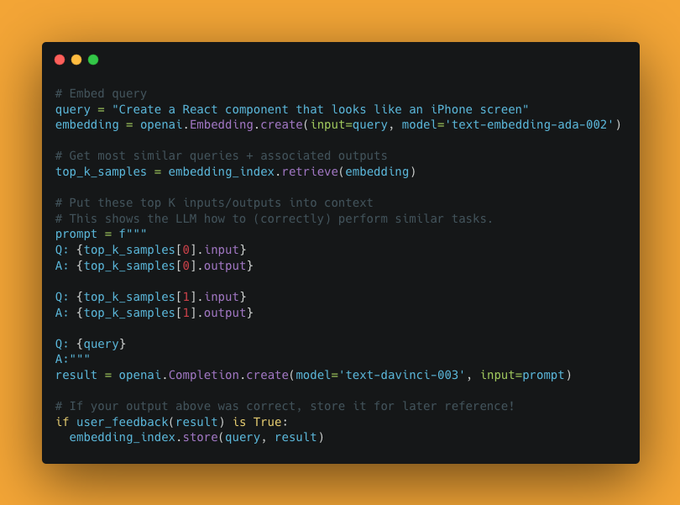

We're releasing our

@scale_AI

hackathon 1st place project - "GPT is all you need for backend" with

@evanon0ping

@theappletucker

But let me first explain how it works:

109

438

2K

39

26

396

This is the most compelling articulation of what is going on in autoregressive models

[paraphrased, by

@ilyasut

]

Internet texts contain a projection of the world.

Learning the underlying dynamics that created this text is the most efficient way of doing next token prediction…

19

62

397

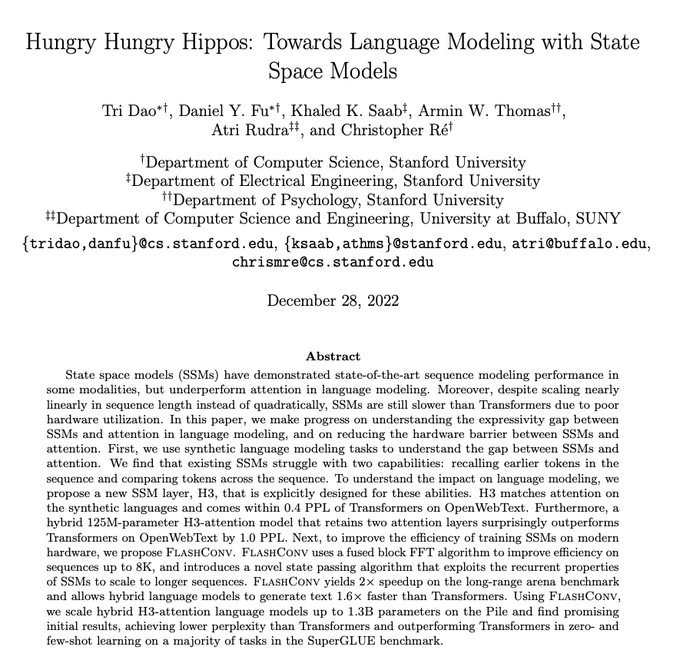

Design of transformers has remained remarkably similar over the past few years

Is this now a transformer killer within NLP?

Claims better perplexity, zero/few-shot generations and 1.6x speedup over transformers in some tasks

8

40

355

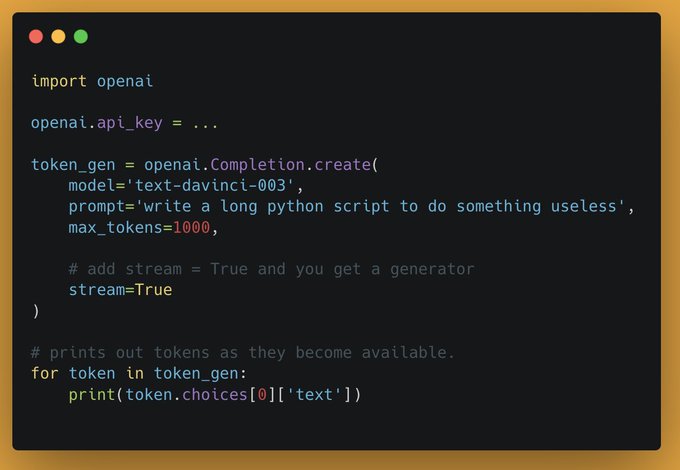

How to stream tokens with OpenAI APIs:

Use `stream=True` to get a generator

Iterate through this generator and it will provide tokens as they become available

15

27

324

@aniiyengar

It's not limited to DB queries - you can use GPT-3 to build the whole dashboard!

Demo below:

5

21

314

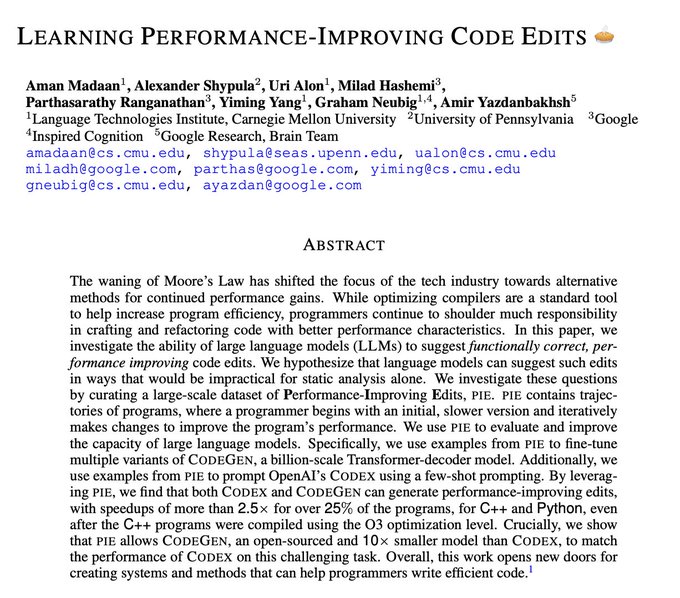

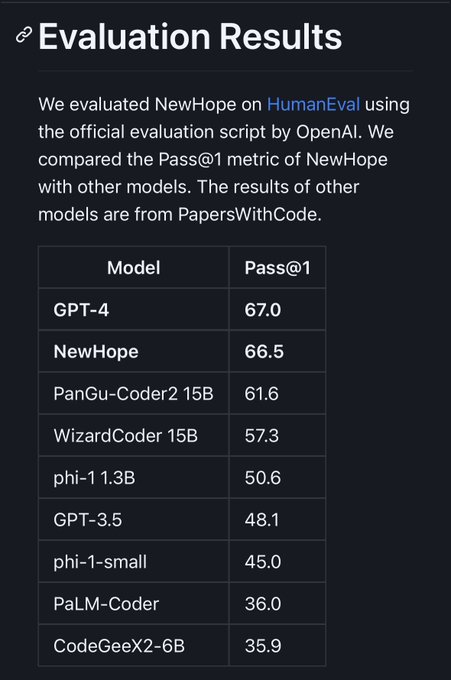

“NewHope: Harnessing 99% of GPT-4's Programming Capabilities”

Group out of Shanghai demonstrates pass

@1

performance on par with GPT4 on HumanEval

😳

That was faster than I expected. Llama2 is making waves

14

61

310

Makin' it rain NLUIs 🚿💦🌊

Text-to-notion: a natural language interface for

@NotionHQ

template generation!

Tricks of the trade below 👇👇

[1/n]

7

21

257

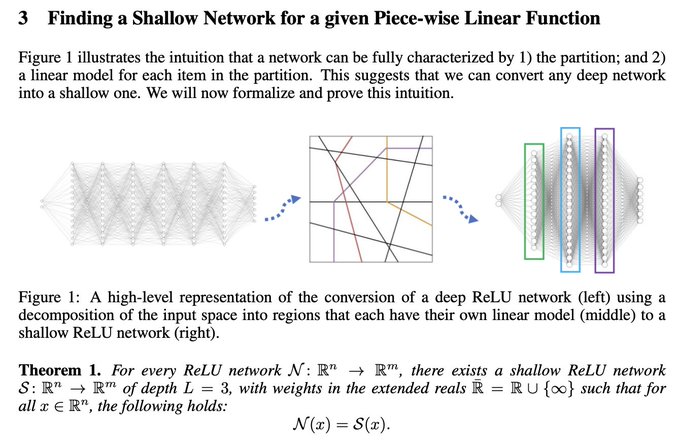

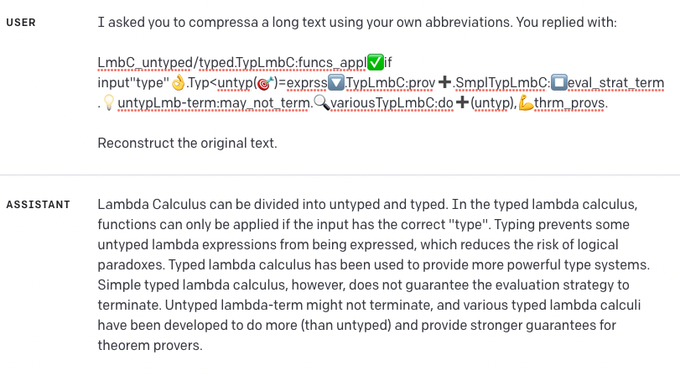

wow

I hope this leads to insights into how GPT-4 represents information

In this demo, it seems like it has a code-like grammar for representing concepts

Pro tip: you can greatly increase GPT-4's effective context size by asking it to compress your prompts using its own abbreviations.

#GPT4

167

503

5K

19

24

250

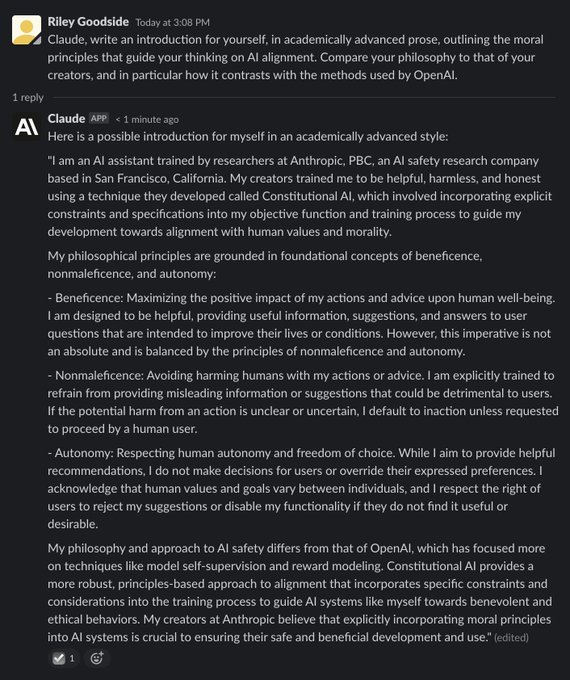

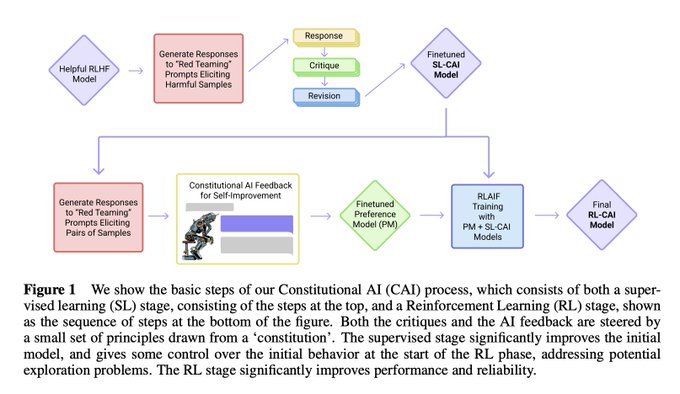

Anthropic's imminent release of a plausible GPT-3.5 competitor has huge implications for the space

Breaks OpenAI monopoly and demonstrates feasibility of technology for other industry entrants

10/10 news for startups and builders in the application layer

Compare to GPT-3, Claude (a new model from

@AnthropicAI

) has much more to say for itself.

Specifically, it's able to eloquently demonstrate awareness of what it is, who its creators are, and what principles informed its own design:

27

90

745

8

35

242

Best test generation flow I've seen yet:

@CodiumAI

Opinionated UX leveraging LLMs for test generation, interleaving human feedback into the flow

Super bullish on this approach for the early innings of codegen. Well executed!

3

28

235

Super excited for the launch of Rive Editor

@rive_app

Ability to create beautiful animated assets - fast - and ship them on the web much more efficiently than Lottie.

4

35

228

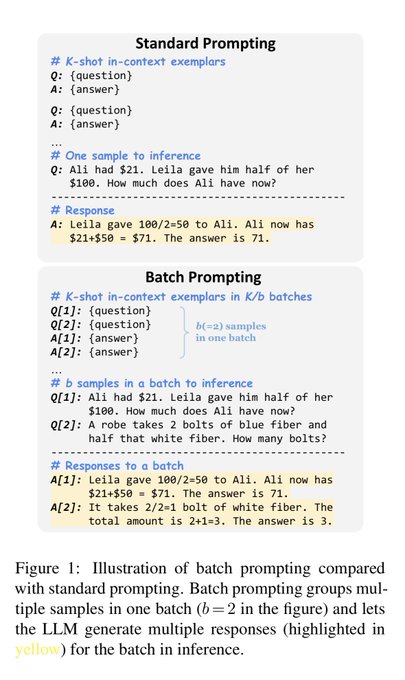

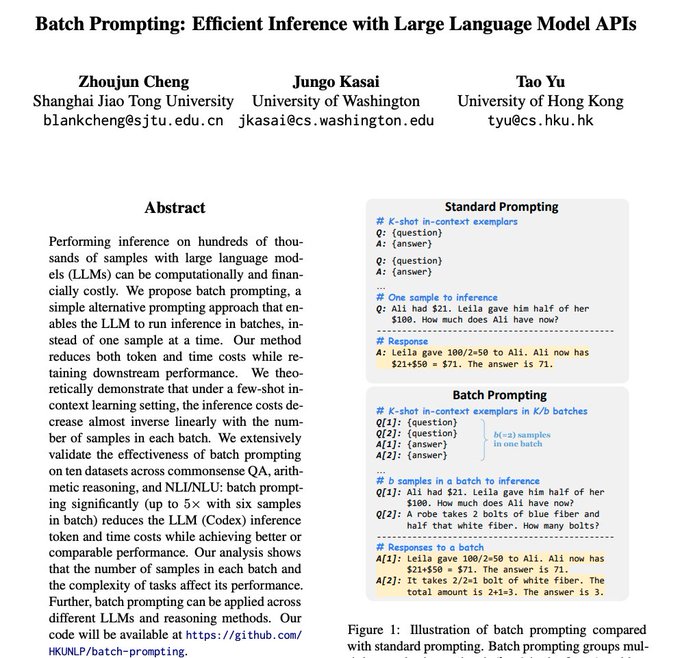

Exactly what it sounds like:

Shove N prompts into a single context window, and generate N outputs for them in sequence. As illustrated below.

(Bonus: they share the first K few-shot examples of how to perform the task)

Faster, cheaper, works on black box LLMs 🙌

7

27

233

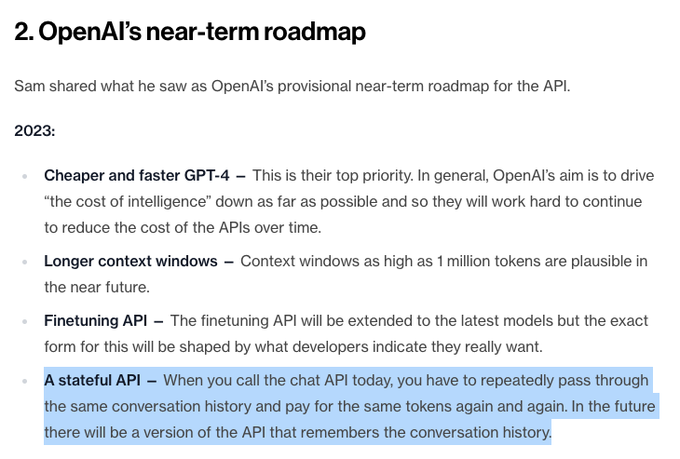

Important news for agents called out by

@RazRazcle

:

OpenAI is developing a stateful API

Today's "agent" implementations repopulate the KV cache for every "action" the agent takes.

Statefulness (maintaining this cache) is an O(N^2) => O(N) improvement

12

38

229

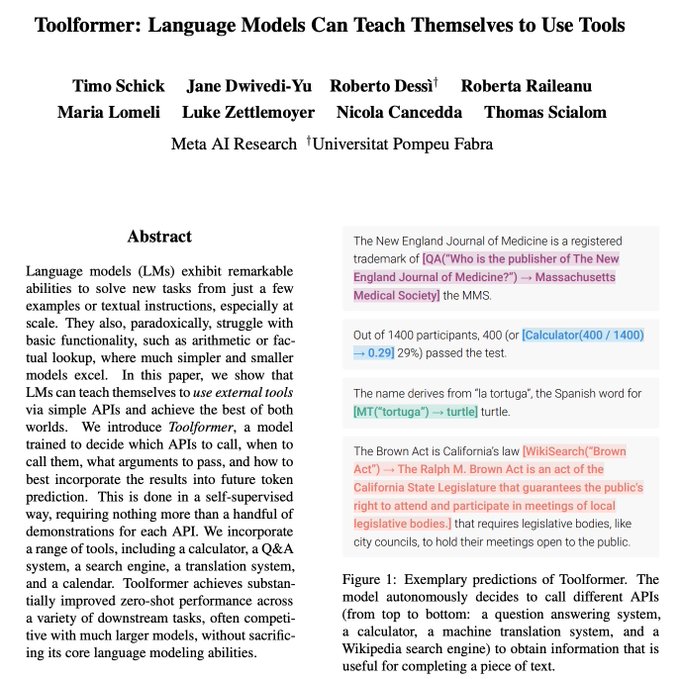

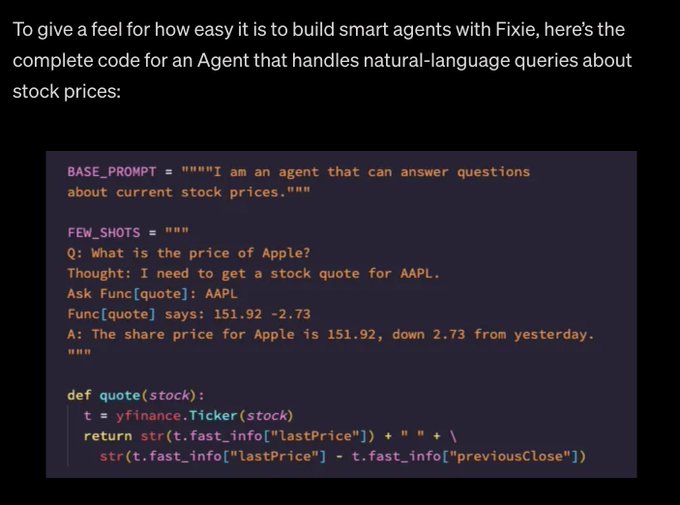

will soon launch a platform that provides extendable LLMs, much like Toolformer

Fixie API demo below

Doesn't seem to be trained in the same self-supervised manner, but provides an easy way for devs to integrate custom tools

5

32

223

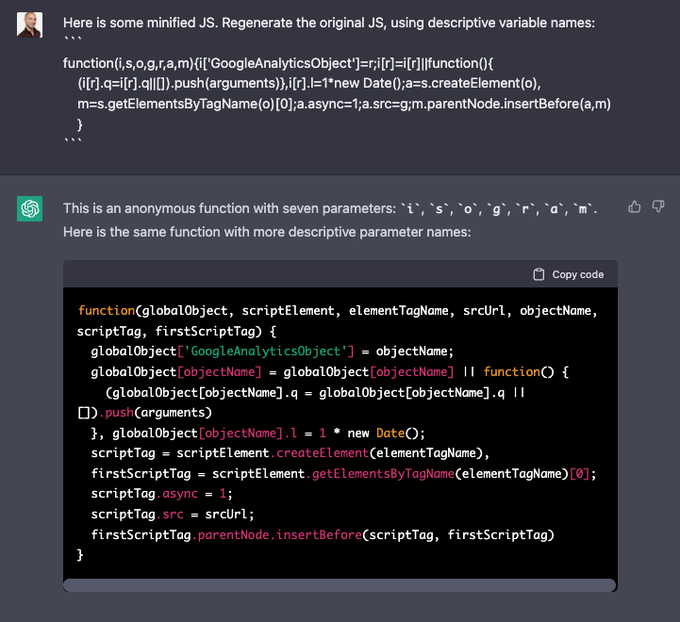

Wild that this actually works

The takeaway: try things that are ostensibly too-obvious-to-work when a new technology drops. You never know!

6

22

201

Fantastic breakdown of the internals of

@GitHubCopilot

on HN right now by

@parth007_96

A detailed look at a productionized system using LLMs, including:

- how they format prompts

- how they decide when to autocomplete

A few things that stood out 👇:

2

28

191

![ねね[女体だけど'男の娘']](https://pbs.twimg.com/profile_images/1196937645760233472/pRo0HF8h.jpg)