Mahed Mousavi

@mahedmousavi

Followers

151

Following

7K

Media

11

Statuses

365

Program Chair @IWSDSmeeting '26 Assistant Professor (RTD-a) @UniTrento_DISI Computational Linguistics, Conversational AI

Joined September 2013

RT @hafezeh_tarikhi: علی خامنهای، ۲۳شهریور۱۳۸۶:.مردم عراق مشکل برق دارند، مشکل آب سالم دارند، اینها جواب لازم دارد. اشغالگرها باید جواب….

0

3K

0

RT @raffagbernardi: @iwsdsmeeting @UniTrento @UniTrento_DISI Enjoy your summer holiday and then get ready for the IWSD deadline: *October….

0

2

0

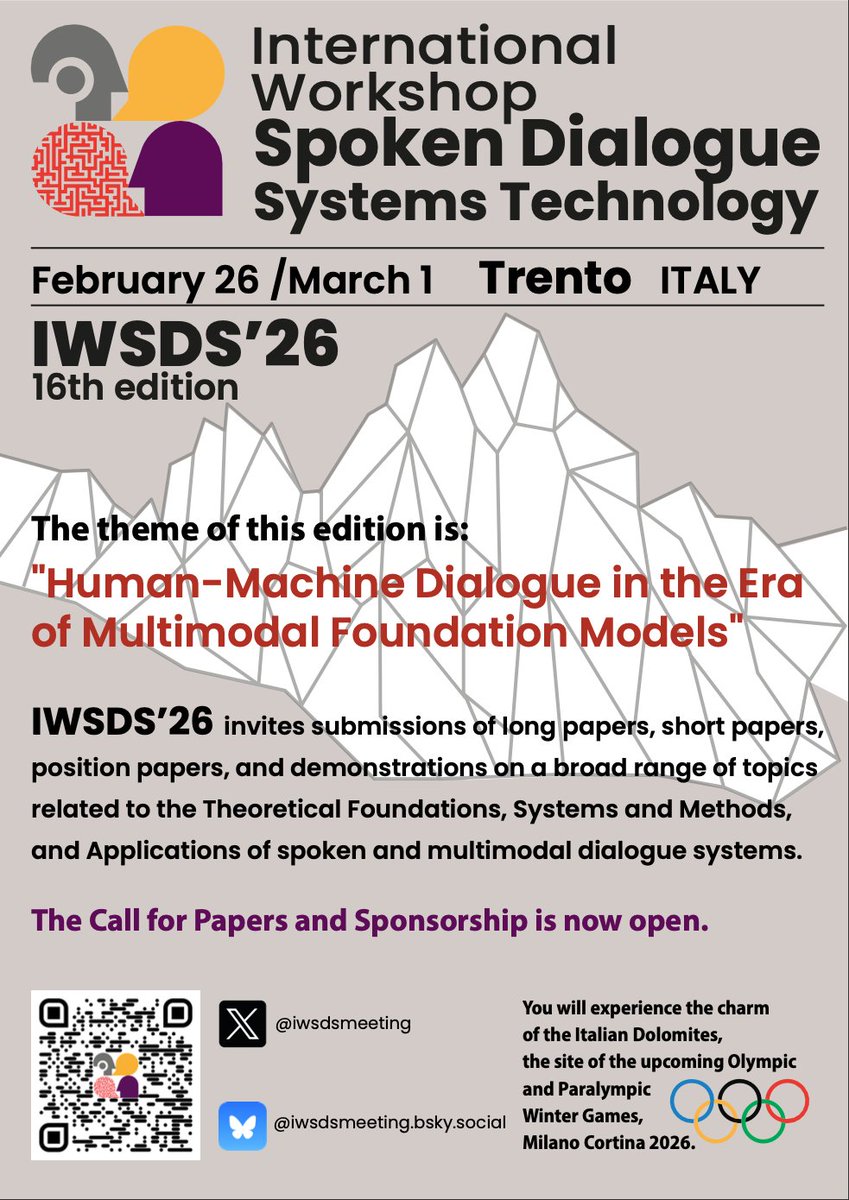

RT @iwsdsmeeting: 🎉.We’re excited to announce IWSDS 2026 will take place Feb 26 – Mar 1, 2026 in Trento🇮🇹! 🏔️✨.📢 Call for Papers is now ope….

sites.google.com

Welcome

0

10

0

RT @layerlens_ai: 🧠 High benchmark scores ≠ real-world reasoning. We teamed up with @mahedmousavi to explore why static evaluations miss th….

0

3

0

RT @PahlaviReza: یک هفته پس از رونمایی از پلتفرم #همکاری_ملی، به عنوان یک راه ارتباطی امن برای نیروهای نظامی، امنیتی، انتظامی و دولتی، نزد….

0

11K

0

RT @layerlens_ai: A must-read from @mahedmousavi et al. just dropped on arXiv: It confirms what many in the evalua….

arxiv.org

We conduct a systematic audit of three widely used reasoning benchmarks, SocialIQa, FauxPas-EAI, and ToMi, and uncover pervasive flaws in both benchmark items and evaluation methodology. Using...

0

1

0

🗑️Garbage In, #Reasoning Out?.High scores🎢 for reasoning in #LLMs? .BUT! What if your fav benchmark is just. BROKEN?.We audit popular reasoning benchmarks & studied how robust LLM reasoning is wrt input uncertainty. 📄 Paper:

arxiv.org

We conduct a systematic audit of three widely used reasoning benchmarks, SocialIQa, FauxPas-EAI, and ToMi, and uncover pervasive flaws in both benchmark items and evaluation methodology. Using...

well, the "something" they do is too surface-level to be significant. Garbage in, Briliance Out?.gonna put the paper on arxiv soon btw

1

5

12

well, the "something" they do is too surface-level to be significant. Garbage in, Briliance Out?.gonna put the paper on arxiv soon btw

of course LLMs don't "really reason". because if you ask people what is "reasoning" you will get many different answers, either very narrow or technical, or very broad and useless. and LLMs don't match the narrow ones, and the broad ones are too broad. the LLMs do. something.

0

0

0

⁉️Does "Blocking" put a stop to harassment in VR?.❓Can "Blocking" be a harassment tool itself?.Check out our new paper!!.🗞️"Investigating the Use and Perception of Blocking Feature in Social Virtual Reality Spaces: A Study on Discussion Forums".

dl.acm.org

This study explores the use of 'blocking' as a safety tool in social VR platforms, a feature widely implemented to protect users from harassment. Despite its prevalence, little research investigates...

0

0

0

RT @sislab7: 🎉 Congrats to @SimoneAlghisi for winning the Best Poster Award🥇 at #ICTDays for Dyknow🦕 @UniTrento_DISI ! 🏆👏💡🎉 .

0

4

0

❓ Can we fix the fragmented #knowledge within the #LLM? . 💡 We tried with a soft #neurosymbolic approach. 🗞️ New preprint:

0

0

2

We will present 🦕Dyknow @ #EMNLP2024 🏖️ today! Come and chat with us! . 📍In-Person Poster Session D, Riverfront Hall.⏲️10:30 - 12:00

SISLab will participate @ #EMNLP2024 with 2 Articles 🧵(2/2): .2. Is Your LLM Outdated? Can you fix it? .Look for "DyKnow🦕" to find out!!! .🗞️.📥 Repo: #knowledge #RAG #LLM #AI #NLP.

0

1

12

RT @sislab7: SISLab will participate @ #EMNLP2024 with 2 Articles 🧵(2/2): .2. Is Your LLM Outdated? Can you fix it? .Look for "DyKnow🦕" t….

github.com

Repository for "DyKnow: Dynamically Verifying Time-Sensitive Factual Knowledge in LLMs" presented @ EMNLP 2024 - sislab-unitn/DyKnow

0

1

0

#instruction_tuned #LLMs demonstrate a comparatively higher #prompt agreement and output consistency

We present DyKnow🦕, a Dynamic Knowledge benchmark where we show the (high) percentage of outdated knowledge & inconsistent responses in 18 open and closed LLMs! .🗞 Paper: 📥 Repo:

0

0

4

RT @m_guerini: This is crazy. At this point I think it is necessary to put a limitation on appendix pages.

0

1

0