Ludwig Sidenmark

@ludwigsidenmark

Followers

614

Following

1K

Media

14

Statuses

117

Postdoc @UofT | Researching HCI, XR, Eye Tracking | Prev PhD @LancasterUni. Also @RealityLabs, @Playstation, @TobiiTechnology | 🇸🇪 | I like baking

Toronto, Canada

Joined May 2013

Hi all, I’m on the job market for industry research scientist or TT faculty positions starting Summer 2025. Interested in roles related to HCI, XR, Eye Tracking, Adaptive Interfaces, and Human-AI Interaction. Please reach out if hiring or aware of any positions! RT appreciated!

3

33

71

Excited to present our work HeadShift: Head Pointing with Dynamic Control-Display Gain at #CHI2025 in Yokohama! Mon, 4:56–5:08 PM, Room G301 🎤 A big thank you to my amazing co-authors: @ludwigsidenmark, Florian Weidner, Joshua Newn, and @HansGellersen! See you there! 🙌

0

2

7

✍️ Introducing MaRginalia: A new way to take handwritten notes using #HoloLens and #iPad. Now, with fewer distractions! 👉 Check out our project video to learn more: https://t.co/7BBt32jKXv 🤝 In collaboration with Erin Kim, @sangho_suh, @ludwigsidenmark, and @ToviGrossman

📖 Marginalia: Mixed Reality for Lecture Note Taking #CHI2025 Marginalia lets students capture slides & transcripts in MR while staying engaged with the lecture. No more looking down at screens! 🔍 https://t.co/7QKKkZc8xj Led by @LepingQ at @UofT @UofTCompSci (5/13)

1

4

14

📢 New Research Alert! Just posted initial details of our latest papers across #CHI2025, #IUI20205, and #HRI2025! All work led by incredibly talented graduate students. Human-Centric AI, Programming Tools, Mixed-Reality, Robotics, and more! 🔗 https://t.co/Q8iux1S8mv (1/13)

1

8

47

I am on the job market, seeking tenure-track or industry research positions starting in 2025. My research combines human-computer interaction and robotics—please visit https://t.co/POmSPUd2H9 for updated publications and CV. Feel free to reach out if interested. RT appreciated!

1

39

96

Preprint here: https://t.co/P9B63Iqzp9 Please join us in the Shared Spaces session on Tuesday the 15th Oct, 9am! https://t.co/xwSmKcPytM

0

0

1

Next week at #UIST2024 I'll present Desk2Desk where we use Optimization methods to integrate MR workspaces of various screen layouts for Remote Side-by-side Collaboration! This work was made with Tianyu Zhang, Leen Al Lababidi, @J_Chris_Li and @ToviGrossman. Check our video!

1

4

48

💼 I'm on the job market for tenure-track faculty positions or industry research scientist roles, focusing on HCI, Human-AI interaction, Creativity Support, and Educational Technology. Please reach out if hiring or aware of relevant opportunities! RT appreciated! 🧵 (1/n)

3

62

161

For more info regarding past and current projects and CV please get in touch or check out my website: https://t.co/yYf61V3SmW

0

0

0

Excited to announce our latest TOCHI publication: "HeadShift: Head Pointing with Dynamic Control-Display Gain" with @ludwigsidenmark @FlorianWeidner @JoshuaNewn @HansGellersen

dl.acm.org

Head pointing is widely used for hands-free input in head-mounted displays (HMDs). The primary role of head movement in an HMD is to control the viewport based on absolute mapping of head rotation to...

0

2

4

This may come a bit late, but I had a blast working with @ludwigsidenmark as #CHI2024 video preview chairs! Also glad to retire from this role after 3 consecutive years of CHI!!

0

1

22

Human-Computer Interaction research from @UofTCompSci is well represented at #CHI2024 with over 30 accepted papers, including a Best Paper Award and a few honourable mentions! https://t.co/yqu1iVIMbD

0

9

20

All CHI 2024 video previews are now up! Check them here 👉 https://t.co/oJgZdJtglV Huge thanks to @kashtodi for the stellar support with all SIGCHI conference videos over the years & to my video preview buddy @ludwigsidenmark for the great teamwork! 🎉 #CHI2024 #SIGCHI

0

1

18

🚨 The #CHI2024 technical program teaser is here! Watch now at https://t.co/gwlMSfsceK Big applause to my video preview buddy @ludwigsidenmark for the fantastic editing work!! #SIGCHI #HCI

1

4

16

3. A project led by @rubyleehs where we developed three techniques that allow viewport control in virtual reality only with your eyes. With @rubyleehs, @0xflarion and @HansGellersen. https://t.co/ARnn2fV2Vj

1

0

8

2. A project led by Pavel Manakhov where we investigate how walking and jogging affects our ability to visually focus on targets in extended reality. With Pavel, @KenPfeuffer and @HansGellersen. https://t.co/KLqVKg10cp

1

0

10

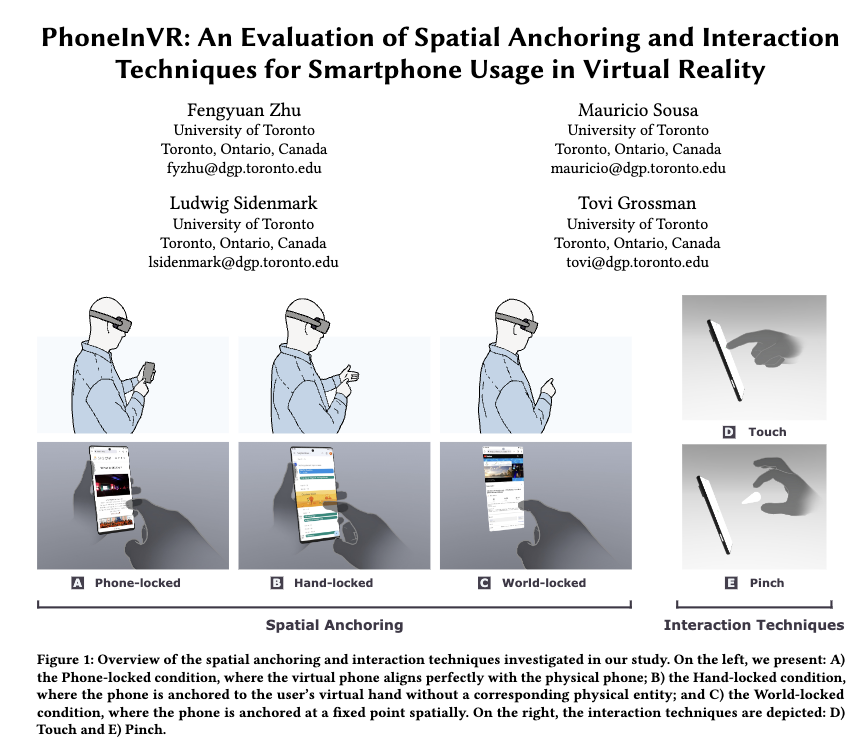

I was lucky to be involved in 3 #CHI2024 papers. :-) 1. A project led by @FengyuanZ where we investigate spatial anchorings and interaction techniques for interacting with smartphones in virtual reality. With @FengyuanZ, @vivaomauricio and @ToviGrossman. https://t.co/kwjPFJR5AF

2

10

61

Please share: We are looking for a new PhD student for the research project “Human-Computer Interaction in Extended Reality (XR)” – read more here:

2

31

56