Lerrel Pinto

@LerrelPinto

Followers

8K

Following

3K

Media

167

Statuses

673

Making robots more dexterous, robust and general. Co-founder of Assured Robot Intelligence (ARI) | Prof @nyuniversity

NYC

Joined June 2019

We have developed a new tactile sensor, called e-Flesh, with a simple working principle: measure deformations in 3D printable microstructures. Now all you need to make tactile sensors is a 3D printer, magnets, and magnetometers! 🧵

126

751

7K

Deeply honored to be a part of MIT Tech Review Innovators Under 35 List this year. This recognition highlights our work on building robot intelligence that generalizes to unseen and unstructured human environments, executed with my friends & colleagues at NYU Courant & beyond.

8

5

56

For agents to improve over time, they can’t afford to forget what they’ve already mastered. We found that supervised fine-tuning forgets more than RL when training on a new task! Want to find out why? 👇

14

126

811

Starting the semester with @kchonyc telling the story of @NYU_Courant & @NYUDataScience CILVR group to a full house of students. Featuring @rob_fergus & @ylecun.

3

14

160

Happy to share that I'm a Dr. now! 🎓🎉 I'm indebted to @LerrelPinto for his 🔥 advice & mentorship, and an unforgettable five years. Next up, I'm starting a postdoc with @JitendraMalikCV, spending time at @berkeley_ai & @AIatMeta. Would love to say hi if you're in the bay area!

43

9

303

90% success rate in unseen environments. No new data, no fine-tuning. Autonomously. Most robots need retraining to work in new places. What if they didn’t? Robot Utility Models (RUMs) learn once and work anywhere... zero-shot. A team from NYU and Hello Robot built a set of

13

36

286

Thanks @IlirAliu_ for a detailed thread on our lab's work! I have been so fortunate to work with spectacular students and collaborators over the past decade. Now prepping for our next big release 😉

From dexterous hands to imitation from internet videos, his group keeps dropping breakthroughs that set the tone for the field. @LerrelPinto’s lab at NYU has quietly reshaped robotic learning. A breakdown 🧵 [📍SAVE MEGA THREAD FOR LATER📍]

1

3

41

It was wonderful chatting with @IlirAliu_ to share my journey into robotics and discuss what it will take to solve robotics’ toughest problems.

🎙️Ep 75: In this episode, I talk with @LerrelPinto, Assistant Professor at NYU and one of the most cited researchers in robotics today: His work spans everything from self-supervised learning to robot dexterity, and he's on a mission to make robots generalize the way humans do.

1

3

36

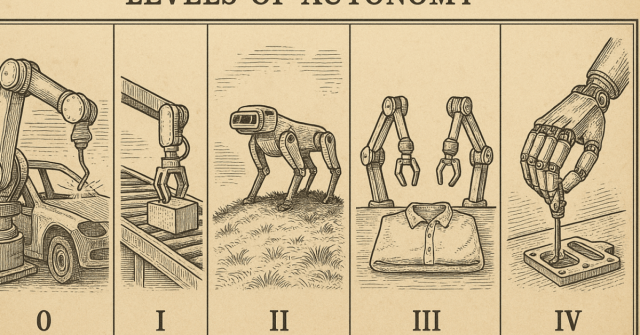

I had a wonderful time talking to @robotknower and Niko Ciminelli for @SemiAnalysis_'s article on Robotics Levels of Autonomy. The frontier of robotics (level 4) is indeed force-dependent dexterity, which our team is actively pushing on. Stay tuned😉 https://t.co/Fpz0JHyeLV

semianalysis.com

Robots have powered manufacturing for decades, yet they stayed single-purpose and thrived only in perfect settings. Previous attempts at intelligent machines overpromised and underdelivered. But th…

1

14

85

Great to see the first glimpse of @SkildAI. It’s also nice to see how the data-driven robotics work that @gupta_abhinav_ and I developed a decade ago is being applied today. Congratulations to the team on this release.

Modern AI is confined to the digital world. At Skild AI, we are building towards AGI for the real world, unconstrained by robot type or task — a single, omni-bodied brain. Today, we are sharing our journey, starting with early milestones, with more to come in the weeks ahead.

2

4

69

Modern AI is confined to the digital world. At Skild AI, we are building towards AGI for the real world, unconstrained by robot type or task — a single, omni-bodied brain. Today, we are sharing our journey, starting with early milestones, with more to come in the weeks ahead.

44

254

1K

i got to play with the e-flesh and robotic hand at Open Sauce! these low cost open source designs made me so excited for all the new humanoid research and hobbyist projects they can be used in!!

We have developed a new tactile sensor, called e-Flesh, with a simple working principle: measure deformations in 3D printable microstructures. Now all you need to make tactile sensors is a 3D printer, magnets, and magnetometers! 🧵

3

3

81

Is "scaling is all you need" the right path for robotics? Announcing our @corl_conf workshop on "Resource-Rational Robot Learning", where we will explore how to build efficient intelligent systems that learn & thrive under real-world constraints. Submission deadline: Aug 8 🧵

3

22

127

Nice to see RUMs being live-demoed at the @UN AI for Good Summit in Geneva. You can pretty much drop any object in front of this robot and it can semi-reliably pick it up. This general-purpose model and associated robot tools will be open-sourced soon!

We had an incredible time showcasing everything Stretch 3 is capable of at the AI for Good Summit! It was a pleasure to be joined by the talented team from the NYU GRAIL lab, who demonstrated their cutting-edge work on Robot Utility Models. learn more at: https://t.co/1qVA6yWDUf

0

1

21

We had an incredible time showcasing everything Stretch 3 is capable of at the AI for Good Summit! It was a pleasure to be joined by the talented team from the NYU GRAIL lab, who demonstrated their cutting-edge work on Robot Utility Models. learn more at: https://t.co/1qVA6yWDUf

0

6

24

Robots no longer have to choose between being precise or adaptable. This new method gives them both! [📍 Bookmark Paper & Code] ViTaL is a new method that teaches robots precise, contact-rich tasks that work in any scene. From your cluttered kitchen to a busy factory floor.

3

24

195

A nice pipeline: use a VLM to find objects in scene, get close, and use a well-constrained visuo-tactile policy to handle the last inch.

Current robot policies often face a tradeoff: they're either precise (but brittle) or generalizable (but imprecise). We present ViTaL, a framework that lets robots generalize precise, contact-rich manipulation skills across unseen environments with millimeter-level precision. 🧵

2

13

136

🚀 With minimal data and a straightforward training setup, our VisualTactile Local Policy (ViTaL) fuses egocentric vision + tactile feedback to achieve millimeter-level precision & zero-shot generalization! 🤖✨ Details ▶️ https://t.co/OOEjKqisEZ

1

10

36

For the full paper, videos and open-sourced code: https://t.co/k7DdGBy1V7 This project was led by @Zifan_Zhao_2718 & @Raunaqmb and a collaboration with @haldar_siddhant & @cui_jinda_hri

0

1

8