Kevin Frans

@kvfrans

Followers

4K

Following

785

Media

149

Statuses

488

@berkeley_ai @reflection_ai prev mit, read my thoughts: https://t.co/7CZsOTrKRA

Berkeley, CA

Joined August 2013

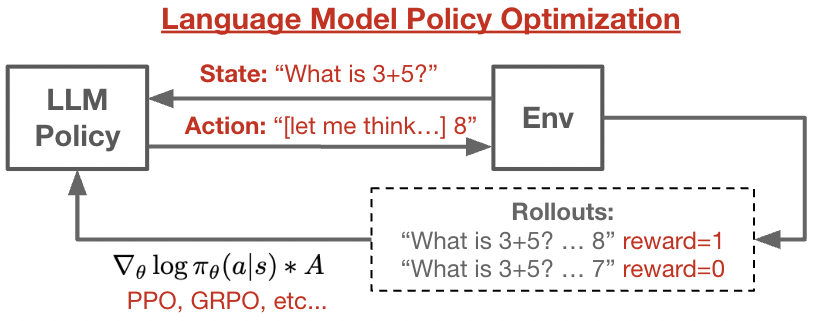

LLM RL code does not need to be complicated! Here is a minimal implementation of GRPO/PPO on Qwen3, from-scratch in JAX in around 400 core lines of code. The repo is designed to be hackable and prioritize ease-of-understanding for research:

11

44

446

Code understanding agents are kind of the opposite of vibe coding -- users actually end up with *more* intuition. It's also a problem ripe for multi-step RL. Contexts are huge, so there's large headroom for agents that "meta" learn to explore effectively. Exciting to push on!.

Engineers spend 70% of their time understanding code, not writing it. That’s why we built Asimov at @reflection_ai. The best-in-class code research agent, built for teams and organizations.

3

6

74

RT @seohong_park: Flow Q-learning (FQL) is a simple method to train/fine-tune an expressive flow policy with RL. Come visit our poster at….

0

65

0

This is a great investigation, and it answers the itch that often comes up when using gradient accumulation -- why not just make more frequent updates? The answer is that you need to properly scale the b1/b2 settings too!.

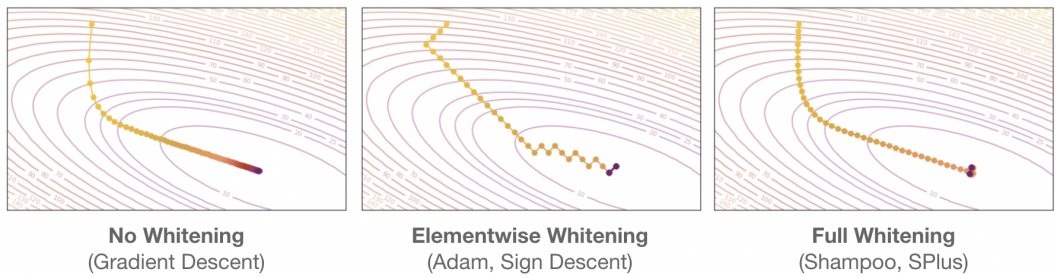

🚨 Did you know that small-batch vanilla SGD without momentum (i.e. the first optimizer you learn about in intro ML) is virtually as fast as AdamW for LLM pretraining on a per-FLOP basis? 📜 1/n

2

0

15

RT @kevin_zakka: We’re super thrilled to have received the Outstanding Demo Paper Award for MuJoCo Playground at RSS 2025!.Huge thanks to e….

0

21

0

RT @N8Programs: Replicated in MLX on MNIST. S+ is an intriguing optimizer that excels at both memorizing the training data and generalizing….

0

6

0

Thanks to my advisors @svlevine and @pabbeel for supporting this project, that is not in my usual direction of research at all. And thank you to TRC for providing the compute!. Arxiv: Code:

github.com

Contribute to kvfrans/splus development by creating an account on GitHub.

2

2

34

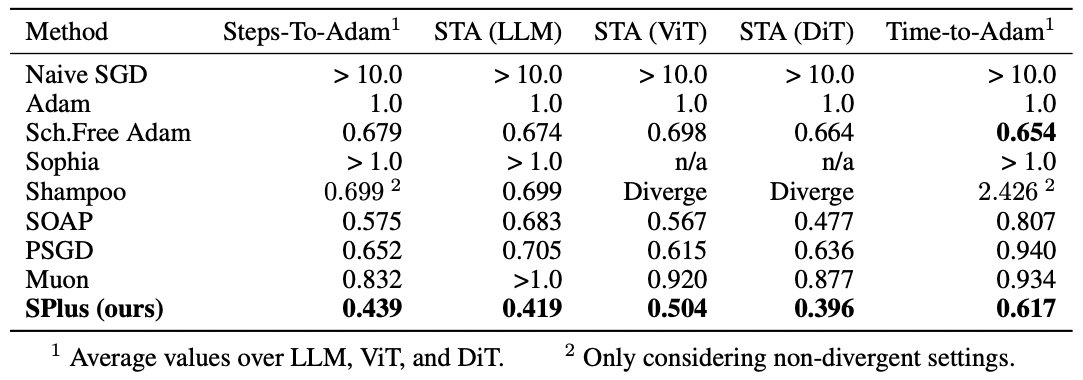

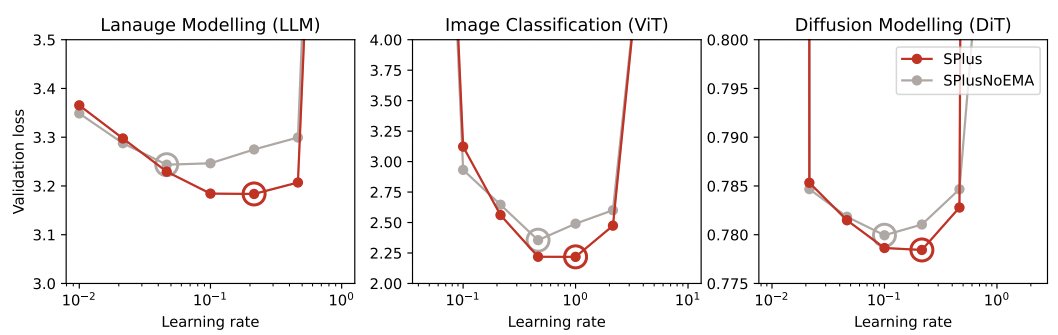

We tried out best to make SPlus easy to use, so please try it out! You can swap from Adam in just a few lines of code. The JAX and Pytorch implementations are single-file and minimal. Let me know how it goes :). Repo:

github.com

Contribute to kvfrans/splus development by creating an account on GitHub.

3

1

54

I really liked this work because of the solid science. There are 17 pages of experiments in the appendix… . We systematically tried to scale every axis we could think of (data, model size, compute) and over 1000+ trials found only one thing consistently mattered.

Is RL really scalable like other objectives?. We found that just scaling up data and compute is *not* enough to enable RL to solve complex tasks. The culprit is the horizon. Paper: Thread ↓

0

3

39

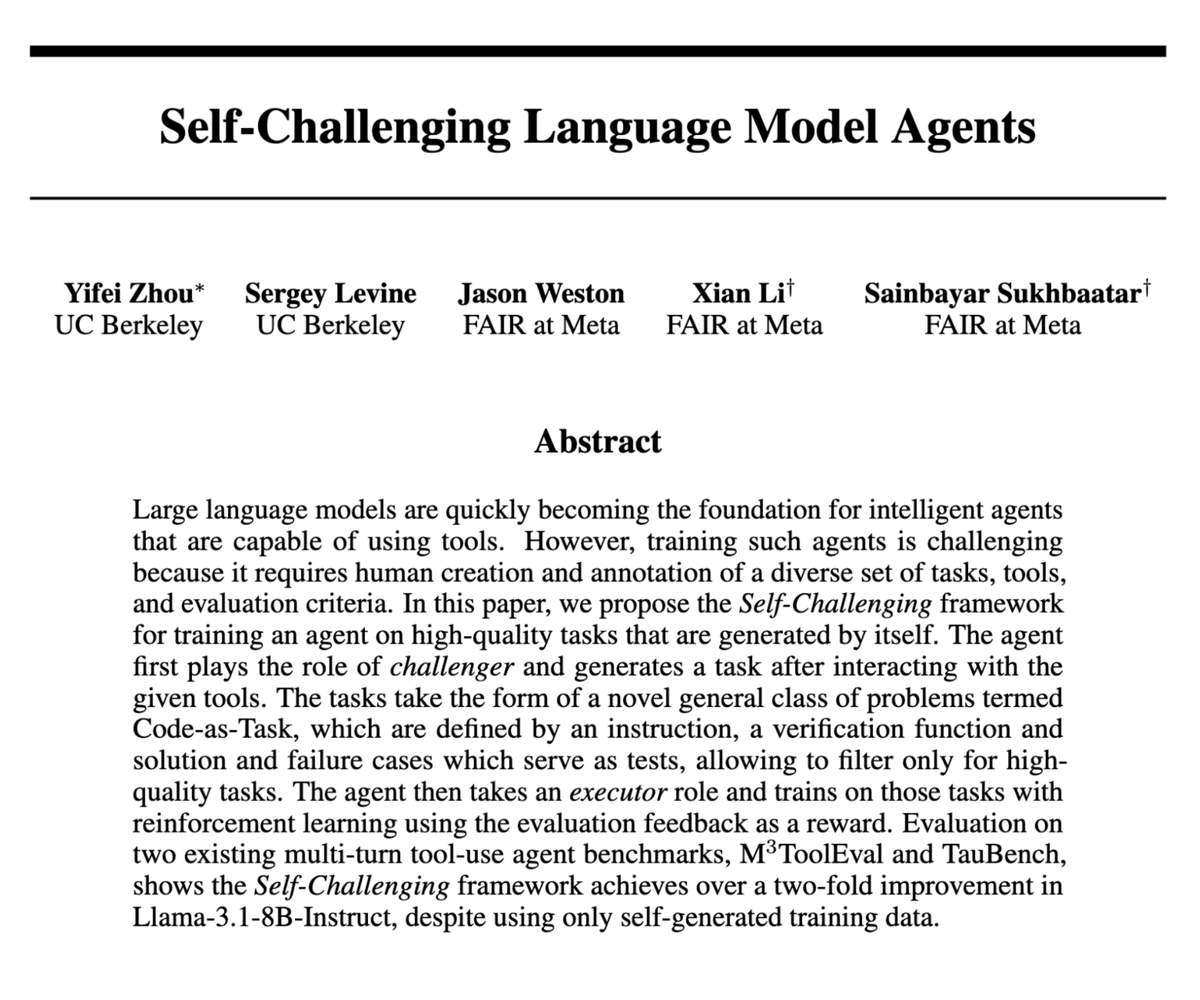

Cool ideas from Yifei, who generally has great sense on building these self-improving systems.

📢 New Preprint: Self-Challenging Agent (SCA) 📢. It’s costly to scale agent tasks with reliable verifiers. In SCA, the key idea is to have another challenger to explore the env and construct tasks along with verifiers. Here is how it achieves 2x improvements on general

1

3

23