Keunwoo Choi

@keunwoochoi

Followers

5,638

Following

807

Media

383

Statuses

4,154

AI x {LLM Engineer @PrescientDesign @genentech , Advisor @gaudiolab }. music, audio, language, AI. Prev: @BytedanceTalk , @spotify , @c4dm @qmul .

New York, NY

Joined June 2015

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

England

• 204965 Tweets

Corinthians

• 153544 Tweets

#BBCDebate

• 95233 Tweets

Mimi

• 64213 Tweets

#loveIsland

• 59250 Tweets

Saka

• 52694 Tweets

Southgate

• 40967 Tweets

#KızılcıkŞerbeti

• 35668 Tweets

Palmer

• 34121 Tweets

Iceland

• 32094 Tweets

Fatih

• 32066 Tweets

Penny Mordaunt

• 29319 Tweets

Gordon

• 28684 Tweets

Mainoo

• 28415 Tweets

#summergamefest

• 27558 Tweets

Rayner

• 24818 Tweets

Daylight

• 24694 Tweets

Foden

• 23440 Tweets

Kane

• 22662 Tweets

Cenk

• 20539 Tweets

Zverev

• 19954 Tweets

Trent

• 19527 Tweets

Inglaterra

• 17420 Tweets

Stephen Flynn

• 17038 Tweets

Bafana Bafana

• 12836 Tweets

Super Eagles

• 11656 Tweets

Semih

• 11091 Tweets

Geoff

• 10730 Tweets

Harriet

• 10177 Tweets

Last Seen Profiles

I won the best paper award in

#ismir2017

!!! Feeling honoured!!!! Thanks for co-authors

@markbsandler

György Fazekas

@kchonyc

21

20

263

Animated attention by

@kchonyc

which took his good 3hrs

@facebookai

@PyTorch

#torched

#deeplearning

#nlp

#attention

#seq2seq

#kyunghyuncho

#nyu

#cds

3

12

195

🌱 We’re hiring 2024 summer research interns on LLMs for drug discovery and biomedical applications. Join me,

@stephenrra

,

@kchonyc

, and other amazing people at NYC to work on the LLM product development of

@PrescientDesign

,

@genentech

✨

Details:

0

21

115

THIS IS BIG! All the music folks in Google Deepmind focus on one thing: AI music generation while NOT exploiting artists. Nothing is perfect, there're probably still some holes in giving the credit, but this is better than anything ever for very sure.

Thrilled to share

#Lyria

, the world's most sophisticated AI music generation system. From just a text prompt Lyria produces compelling music & vocals. Also: building new Music AI tools for artists to amplify creativity in partnership w/YT & music industry

111

537

3K

2

8

93

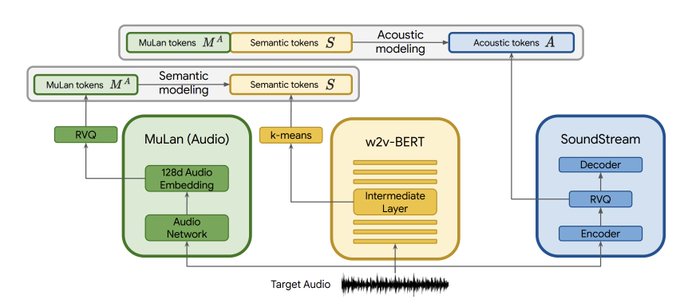

New AI music model alert! yes, again 🎉

#SingSong

, another music generation model by Google;

@chrisdonahuey

et al.

Ok let me do another run for collecting followers. How does it work?

1

11

90

the “llama moment” has come to audio research today! i can’t even imagine what we’ll see out of AudioCraft.

whatever you work on in music/audio, do consider using it, as much as you can. if you don’t know what to do, think what you can do with it and get a head start.

4

11

92

"All you need is AI and music" by Keunwoo Choi, 2021-12-08; A guest lecture at New York University - at

@kchonyc

's class. Now you can watch it :)

4

17

91

My

#NeurIPS2018

summary from music x ML (=music information retrieval (=MIR)) perspective is here:

3

29

85

for

#icassp2024

attendees, i'm open sourcing my `What to eat around COEX` list. originally written for

@cwu307

but sharing it for a large crowd and make the world better place, reduce p(doom), etc.

2

14

81

Hi people!

Me and

@kchonyc

's

#ismir2019

paper, "Deep Unsupervised Drum Transcription" aka 🥁 DrummerNet is here.

Paper -->

Blog post -->

Supplementary material -->

2

18

79

📄+📄+📄+📄+📄+📄+📄= 7 papers

🔥MIR researchers at ByteDance (SAMI team) made 7 papers accepted to

#ISMIR2021

🔥

🧵I'll introduce them here one by one :)👇

1

6

79

Audio/Deep learning folks - please go check out our `torchaudio-contrib`!

By

@faroit

, Kiran Sanjeevan, and me.

3

27

66

👋

I joined

@PrescientDesign

recently. I distracted

@kchonyc

with music research circa 2016-2019. This time he offered me to join his realm -- languages! I'm already having a lot of fun, knowing more to come.

6

2

65

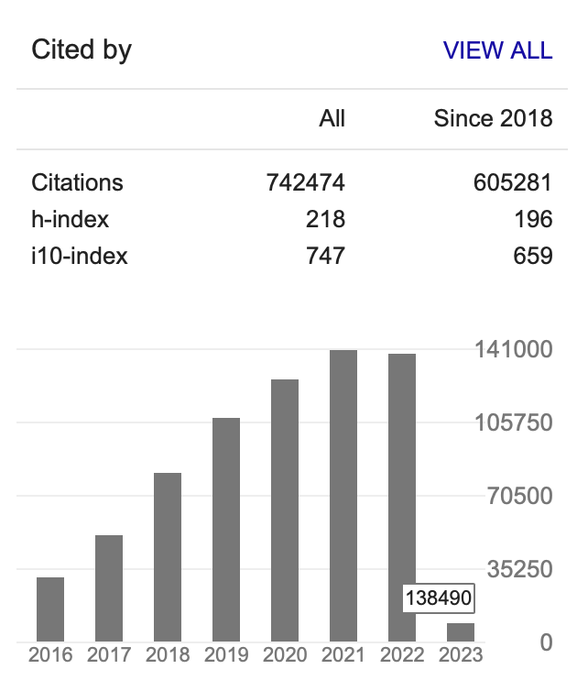

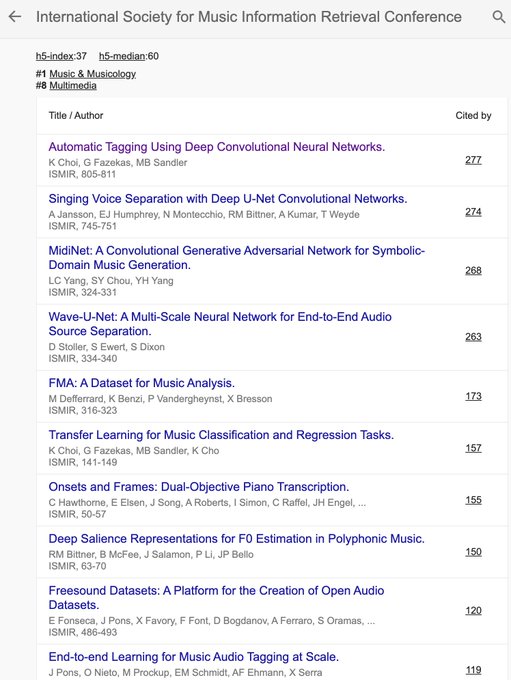

<shameless as always>

my papers are 1st and 6th most cited ISMIR paper in the last 5 years!🔥🔥

heard it was mentioned at the

#ismir2021

trivia organized by the titans

@r4b1tt

@urinieto

. i think they should arXiv the trivia and cite my paper thx

5

2

61

ByteDance/TikTok is hiring research scientists and software developers around music information retrieval and music/audio signal processing at Mountain View, US. Please hit me up!

#ismir2020

1

12

57

@urinieto

ROCKING

#ismir2019

HAHAHAHAHAHA 😂😂😂 seriously, my every follower should watch this otherwise please unfollow thanks.

0

12

53

*QUITE A FEW* papers are accepted to

#ismir2021

from our team in ByteDance 🚀🚀🚀🚀🚀🚀🚀 I'll share more details once the proceedings are updated.

And yes we're hiring 🔥🔥🔥🔥🔥🔥🔥

0

2

45

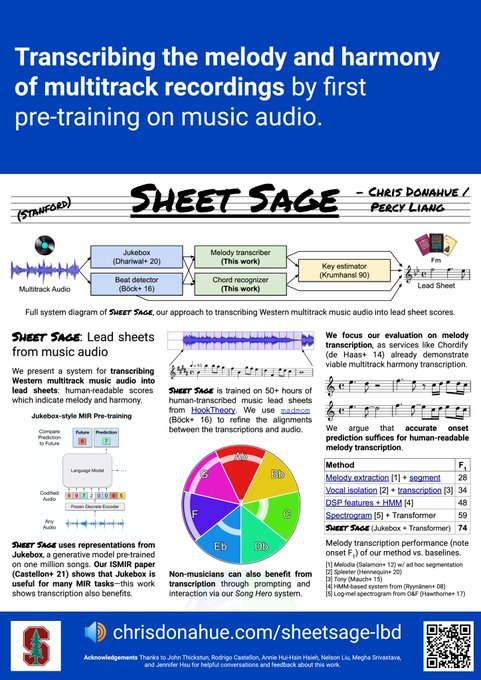

Sheet Sage: Lead sheets from music audio

Leverage Jukebox for melody extraction.

Who'd submit this level of amazing work simply to late-breaking/demo session? This guy →

@chrisdonahuey

1

6

44

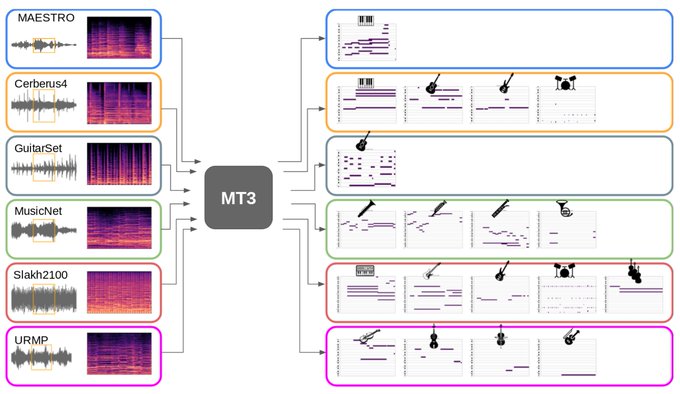

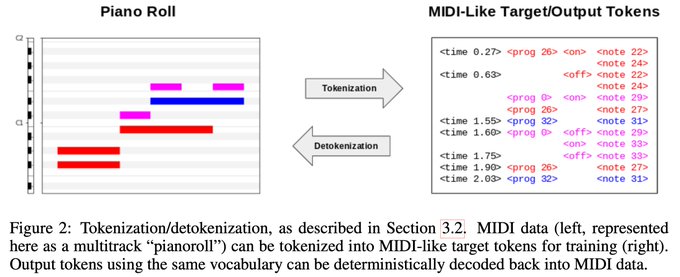

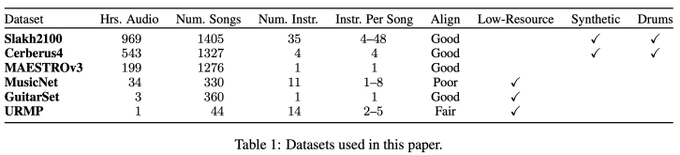

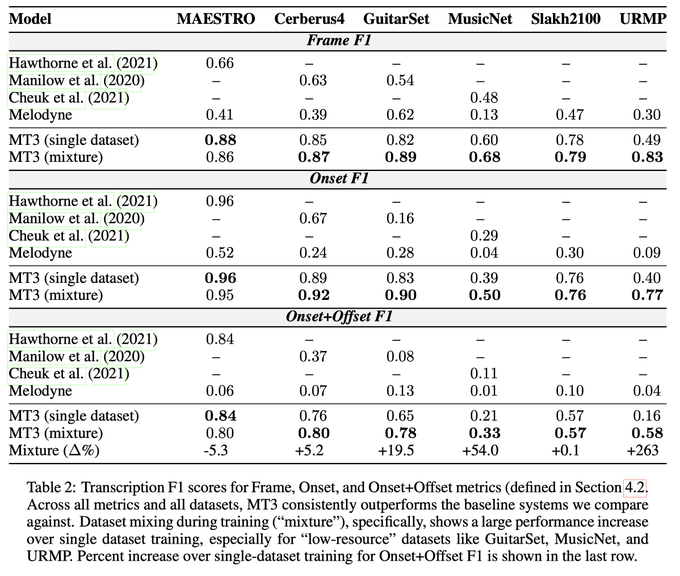

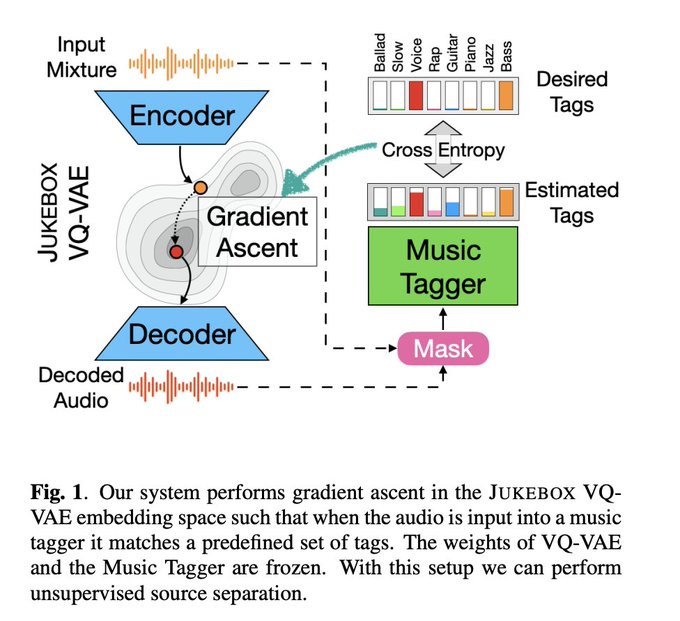

amazing, amazing. done by

@ethanmanilow

@pseetharaman

et al.

2

5

43

🚨 We have a MLE position open at

@PrescientDesign

to find a strong engineer to make our language models stronger.

2

12

39

Room Impulse Response Estimation in a Multiple Source Environment. By

@kyungyunleee

at

@gaudiolab

et al.

3

2

40

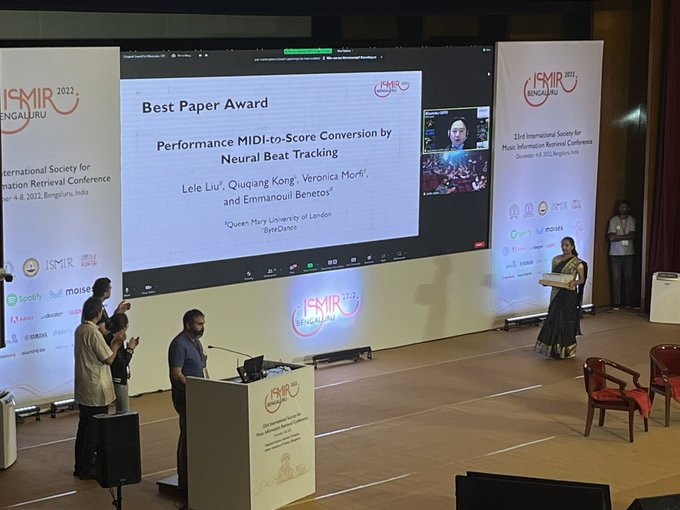

c4dm folks won the

#ismir2022

best paper award!! 🎉🥳🎊 amazing!

congrats,

@liulelecherie

@QiuqiangK

@veromorfi

@emmanouilb

!

0

2

40

i'm giving an introductory talk about LLMs for drug discovery at

#ASCPT2024

pre-conference soon.

2

8

38

new music AI model alert 🚨

get your music tracks segmented by

@taejun_kim_

2

3

38

DawDreamer: A Python-interfaced DAW. Yeah we can do lot of things with this.

1

4

38

look how shamelessly i'm included here! as always, it was great to connect to all the great researchers in MACLab supervised by

@juhan_nam

at

@ISMIRConf

.

This year, people from the Music and Audio Computing Lab at KAIST, led by

@juhan_nam

, participated in the

@ISMIRConf

, and presented our work through scientific programs, late-breaking demos and music sessions!

1

3

35

1

0

35

The longest ever video of me talking public has become public. "Deep Learning with Audio Signals: Prepare, Process, Design, Expect" in

@QConAI

. In case me tweeting around you isn't enough.

1

5

35

generative AI audio is here to stay.. and prosper! check out this year's challenge.

T7. Sound Scene Synthesis

#DCASE2024

0

2

33

are you an LLM nerd who can understand ML/language model papers and write good code? 👀

2

4

32

The

#ismir2019

poster repo is hosting 25 posters and 38-starred now. Would you please 'Like' this tweet if you've ever been the repo and seen any posters there? I wanna know its impact. Thanks!

0

6

33

"Building the MetaMIDI Dataset: Linking Symbolic and Audio Musical Data"

Hell a lot of midi files and matched audio clips.

#ismir2021

1

4

31

oo more text-to-music to come. this time, from academia!

2

6

31

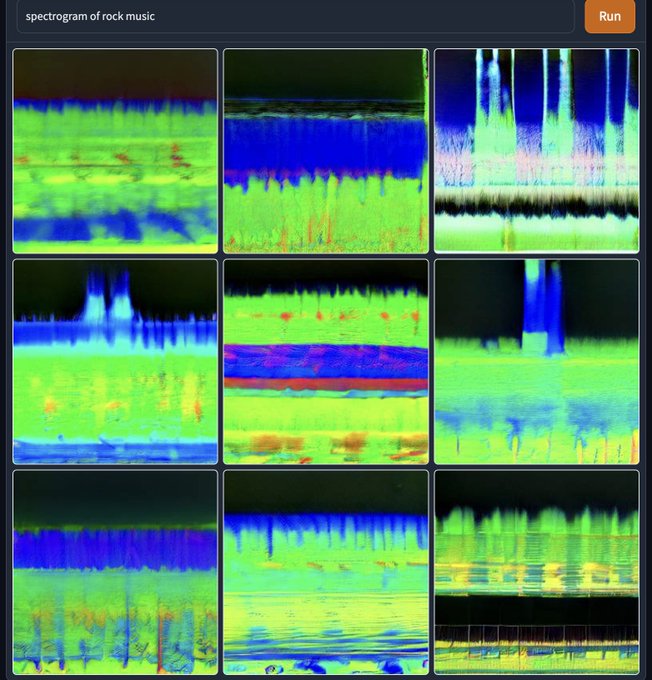

so are spectrograms just images???

Riffusion, real-time music generation with stable diffusion

@huggingface

model:

project page:

64

626

3K

6

2

31