Jianlan Luo

@jianlanluo

Followers

1K

Following

120

Media

45

Statuses

115

Previously Postdoc @berkeley_ai at Google X @Theteamatx, PhD from @UCBerkeley

Berkeley, CA

Joined January 2013

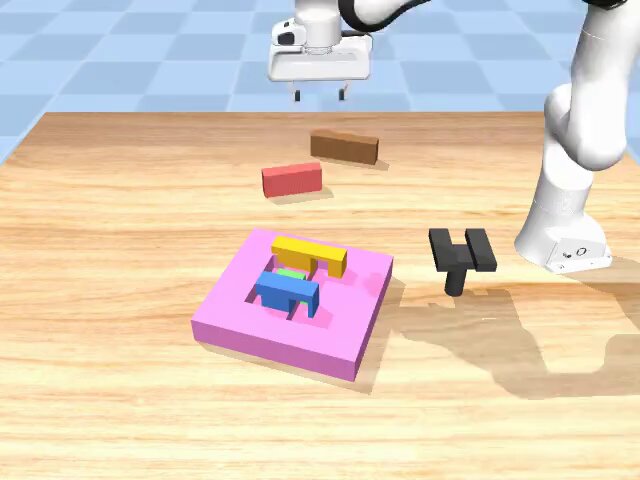

We present HIL-SERL, a reinforcement learning framework for training general-purpose vision-based robotic manipulation policies directly in the real-world. It effectively addresses a wide range of challenging manipulation tasks: dynamic manipulation, dual-arm coordination,

8

31

302

HIL-SERL appears at Science Robotics today!It learns complex robotic skills with reinforcement learning directly in the real world in just 1-2 hours Website: https://t.co/BBkivJmXGG

https://t.co/rZE0K0FQxH

6

19

136

This was fun a fun project! It's also nice to work together with @jianlanluo and his new colleagues at @AgiBot_zhiyuan to get such a variety of skills running with one cross-embodiment VLA.

We are excited to share new experiments with AgiBot @AgiBot_zhiyuan on multi-task, multi-embodiment VLAs! With one model that can perform many tasks with both two-finger grippers and multi-fingered hands, we take another step toward one model for all robots and tasks.

2

7

103

Very happy to work with some old and new friends at @physical_int and @AgiBot_zhiyuan to make this happen! One generalist policy for dexterous manipulation (hand + gripper), more videos are available at:

We are excited to share new experiments with AgiBot @AgiBot_zhiyuan on multi-task, multi-embodiment VLAs! With one model that can perform many tasks with both two-finger grippers and multi-fingered hands, we take another step toward one model for all robots and tasks.

0

0

25

Great work led by @yunhaif , and in collaboration with @csu_han @zhuoran_yang @YueXiangyu @svlevine For more: Website: https://t.co/rvd1EzA8Wo Code: https://t.co/izs5ZgK8yF Paper:

arxiv.org

Solving complex long-horizon robotic manipulation problems requires sophisticated high-level planning capabilities, the ability to reason about the physical world, and reactively choose...

0

1

11

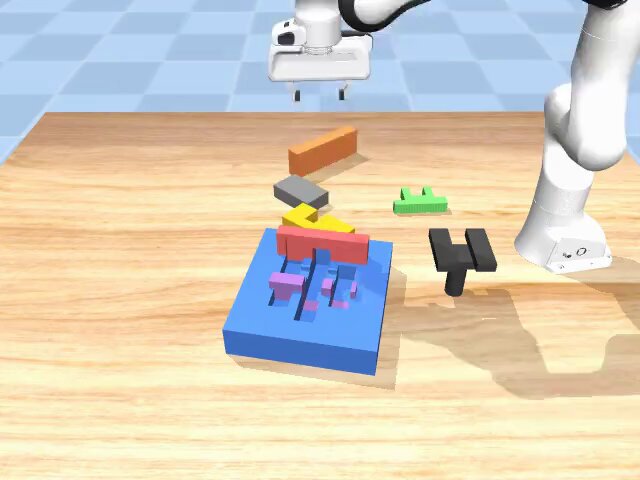

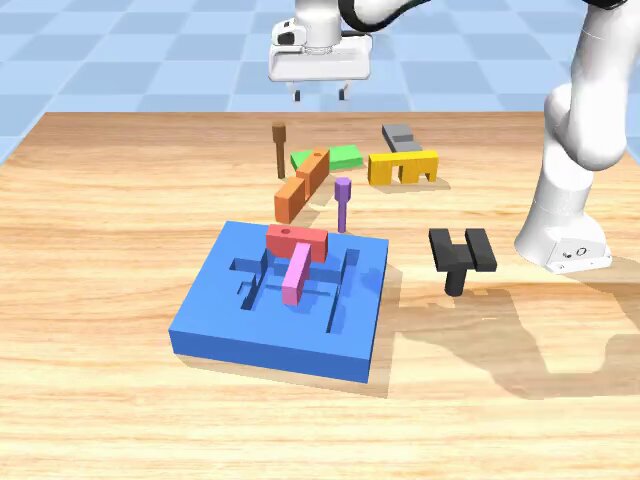

And it works really well on these very difficult tasks! I'll highlight a few that the initial configurations were intentionally presented as infeasible to proceed. ReflectVLM was able to generate plans that move out the blocking objects and replan.

1

0

1

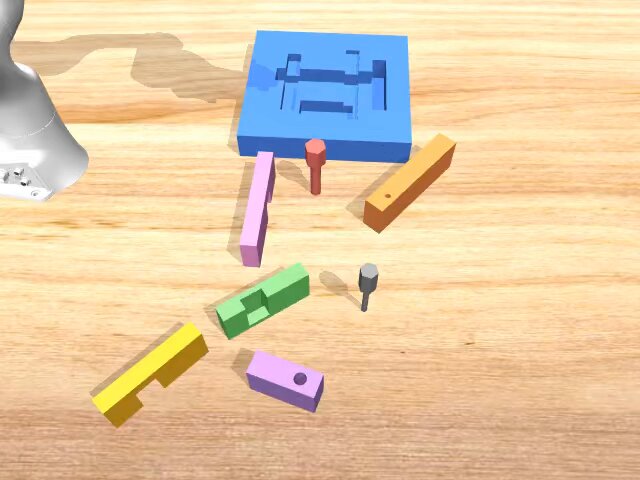

At inference time, it uses the trained "base" model together with the diffusion model to perform planning, the reflected outcome could revise the action proposed by the base model. In this regard, it could be seen as a lightweight yet effective inference-time compuation method!

1

0

3

ReflectVLM utilizes a pre-trained VLM such as LLaVa to generate initial action plan; however it also uses a diffusion model to imagine future outcomes if executing such plans and reflect on that outcome when fine-tuning such models.

1

1

8

Out of 100 test assembly sets, we found SoTA commercial VLMs such as GPT-01, Gemini 2.0-thinking struggle with these tasks requiring nuanced understanding of the intricate physics. Our method, ReflectVLM was able to achieve 6x more performance on these tasks.

1

0

2

We consider the problem of high-level robotic task planning, where a planner needs to reactively choose the right low-level skills. We procedurally generate many of these interlocking objects to be assembled so that it presents a true challenge for physical reasoning and planning

1

1

3

VLMs are great and could potentially be used to solve robotic planning problems. But can they really solve multi-stage long-horizon planning problems that require sophisticated reasoning about nuanced physics? In ReflectVLM, we tackle this problem🧵

2

23

132

Work from BAIR researchers at RAIL lab led by @svlevine.

UC Berkeley researchers devised a fast and precise way to teach robots tasks like assembling a motherboard or an IKEA drawer. 🤖 https://t.co/IxELGtkC1m

3

3

45

HIL-SERL/SERL is featured by Berkeley News! @svlevine @CharlesXu0124 @real_ZheyuanHu @JeffreyWu13579

UC Berkeley researchers devised a fast and precise way to teach robots tasks like assembling a motherboard or an IKEA drawer. 🤖 https://t.co/IxELGtkC1m

0

0

12

The end-to-end part back then was pixel to torque, which is actually different than what we are doing today. But this paper did inspire me to work on robot learning and changed my career, hard to imagine it has been 10 years!!

Disappointed with your ICLR paper being rejected? Ten years ago today, Sergey and I finished training some of the first end-to-end neutral nets for robot control 🤖 We submitted the paper to RSS on January 23, 2015. It was rejected for being "incremental" and "unlikely to have

0

0

9

I'm hiring exceptional researchers, engineers (both research and full-stack) at @physical_int. Please apply on our website or DM me with questions. Referrals very appreciated!

10

25

234

What she said is not at all acceptable. This is precisely nothing but racism. PC: Internet

NeurIPS acknowledges that the cultural generalization made by the keynote speaker today reinforces implicit biases by making generalisations about Chinese scholars. This is not what NeurIPS stands for. NeurIPS is dedicated to being a safe space for all of us. We want to address

1

0

4

NeurIPS acknowledges that the cultural generalization made by the keynote speaker today reinforces implicit biases by making generalisations about Chinese scholars. This is not what NeurIPS stands for. NeurIPS is dedicated to being a safe space for all of us. We want to address

533

384

3K