Rahul Venkatesh

@Rahul_Venkatesh

Followers

192

Following

73

Media

23

Statuses

67

CS Ph.D. student at Stanford @NeuroAILab @StanfordAILab

Joined November 2009

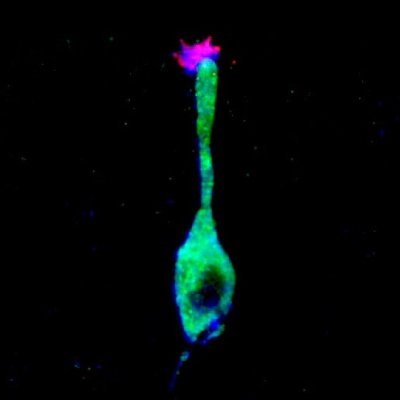

AI models segment scenes based on how things appear, but babies segment based on what moves together. We utilize a visual world model that our lab has been developing, to capture this concept — and what's cool is that it beats SOTA models on zero-shot segmentation and physical

6

15

55

A couple of months ago @KordingLab trolled (in the best possible sense of that term) my happy-go-lucky @StanfordBrain podcast on Brain simulations. Actually he had some interesting points.... We decided to have an in-depth "podcast" about it, for your listening pleasure:

3

15

94

Our part-aware 3D generation work, OmniPart, is accepted by Siggraph Asia 2025. Code and model released! Paper: https://t.co/vEAyV5kqD2 Project page: https://t.co/ovnAysSa7I Code: https://t.co/dToyRki7R8 Demo: https://t.co/9gcBmo2NdP

1

40

299

“Make it red.” “No! More red!” “Ughh… slightly less red.” “Perfect!” ♥️ 🎚️Kontinuous Kontext adds slider-based control over edit strength to instruction-based image editing, enabling smooth, continuous transformations!

16

36

158

Super excited about this line of work! 🚀 A simple, scalable recipe for training diffusion language models using autoregressive models. We're releasing our tech report, model weights, and inference code!

Introducing RND1, the most powerful base diffusion language model (DLM) to date. RND1 (Radical Numerics Diffusion) is an experimental DLM with 30B params (3B active) with a sparse MoE architecture. We are making it open source, releasing weights, training details, and code to

0

9

68

1/x Our new method, the Inter-Animal Transform Class (IATC), is a principled way to compare neural network models to the brain. It's the first to ensure both accurate brain activity predictions and specific identification of neural mechanisms. Preprint: https://t.co/hPqo5PrZoc

3

14

46

These are the most impressive examples of physical understanding I've seen from a computer vision model (let alone one that's not hooked up to an LLM.) And IMO the first good explanation of *how* physical understanding can arise without supervision.

PSI enables some cool zero-shot applications: visual Jenga, physical video editing, and motion estimation for robotics.

0

2

12

1/ A good world model should be promptable like an LLM, offering flexible control and zero-shot answers to many questions. Language models have benefited greatly from this fact, but it's been slow to come to vision. We introduce PSI: a path to truly interactive visual world

3

35

131

PSI enables some cool zero-shot applications: visual Jenga, physical video editing, and motion estimation for robotics.

1

2

13

(4/) If you'd like to explore this more, we provide code and models here with detailed documentation:

github.com

Contribute to neuroailab/SpelkeNet development by creating an account on GitHub.

0

0

1

(3/) It also turns out that our segments are really useful for complex physical object manipulation.

1

0

0

(2/) This capability helps discover more physically meaningful object segments, compared to those from state-of-the-art models like SegmentAnything (SAM).

1

0

1

(1/) Once optical flow is integrated, it allows us to interact with the scene through virtual pokes.

1

0

1

Excited to share PSI — our new framework for building pure vision foundation world models via Probabilistic Structure Integration! World models that rely on language conditioning often fall short in enabling physical interaction. Integrating structured signals like optical flow

1/ A good world model should be promptable like an LLM, offering flexible control and zero-shot answers to many questions. Language models have benefited greatly from this fact, but it's been slow to come to vision. We introduce PSI: a path to truly interactive visual world

1

3

4

Here is our best thinking about how to make world models. I would apologize for it being a massive 40-page behemoth, but it's worth reading.

1/ A good world model should be promptable like an LLM, offering flexible control and zero-shot answers to many questions. Language models have benefited greatly from this fact, but it's been slow to come to vision. We introduce PSI: a path to truly interactive visual world

5

41

220

Humans largely learn language through speech. In contrast, most LLMs learn from pre-tokenized text. In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech. Poster P6, Aug 19 at 13:30, Foyer 2.2!

8

32

194

Thrilled to welcome members of @cogsci_soc to the SF/Bay area for #CogSci2025 this week! Here's a preview of what the Cognitive Tools Lab 🧠🛠️ @Stanford @StanfordPsych will be presenting!

3

16

149

🚀 Just published in Nature Photonics: synthetic aperture waveguide holography—a new path toward ultra-thin, high-quality 3D mixed reality displays. 📄 https://t.co/sjX7HpqvrT

#Photonics #Holography #MR 1/5

10

65

362

I’m one of those people who still enjoys the archaic thrill of coding without AI tools—just me and the editor. But I recently tried Anycoder by @_akhaliq and was genuinely impressed. You describe it and it builds the app you want, and deploys on huggingface:

0

1

14