Jan Kulveit

@jankulveit

Followers

9K

Following

8K

Media

78

Statuses

1K

Researching x-risks, AI alignment, complex systems, rational decision making at @acsresearchorg / @CTS_uk_av; prev @FHIoxford

Oxford, Prague

Joined September 2014

We already see some early variants: e/acc thermodynamic god, 'don't be a substrate chauvinist', opposing AI progress puts you on the wrong side of history. Mostly not spreading because they're true

1

0

9

The meme pool already contains the ingredients - from 'AIs are our children' to 'historical inevitability' to warmed-over Nietzschean power worship.

1

0

7

1. Working on AGI & the risks create a lot of cognitive dissonance. 2. Almost everyone wants to be the hero of their own story -> cultural evolution will find ideologies that resolve this tension

1

0

8

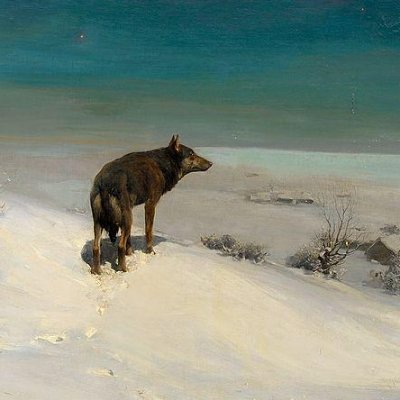

Memetics of AI successionism: Cultural evolution will predictably produce ideologies that make humanity's replacement feel virtuous or inevitable, and why these spread even if false.

2

0

13

Hot take, but I think the decision by the organizers of The Curve to on the margin invite less people who understand the topic and more people who have visible power or fame was bad.

2

0

46

How might the world look after the development of AGI, and what should we do about it now? Help us think about this at our workshop on Post-AGI Economics, Culture and Governance!

3

13

60

If you're interested in gradual disempowerment, consider applying to work with ACS (@jankulveit and @raymondadouglas):

0

5

28

AI Psychology & Agent Foundations ML Researcher We need people who can bring technical and methodological rigour, taking high-level ideas about AI psychology and turning them into concrete ML experiments. This could include of evaluations, mech interp, post-training. More

0

0

5

Millions have joined ImpactSocial dot media For family, friends & community—built to boost engagement + productivity. Still free! Premium <10¢/day. Sponsorship bundles = a gym membership to inspire. Reviewers & influencers—help spread the word!

1

3

89

LLM Psychology & Sociology Researcher We want people with a strong intuitive understanding of LLMs to help run empirical studies on topics like LLM introspection and self-conception, LLM social dynamics, and how ideologies spread between AIs.

1

0

7

Gradual Disempowerment Research Fellow We're looking for polymaths who can reason about civilizational dynamics. This role comes with a lot of intellectual freedom - it could mean economic modelling, theoretical work on multi-agent dynamics, historical analysis, and more.

1

0

3

Deadline in few weeks. These are 1-2 year appointments in Prague, London or San Francisco Bay Area. Hiring page with more details - https://t.co/33CnDhkB20 Form to apply -

1

0

4

ACS is hiring researchers to work on LM psychology, and understanding gradual disempowerment - full time roles with autonomy, flexibility, and competitive compensation. We're looking for a mix of polymaths, ML engineers, and people with great intuitions about how AIs behave.

1

6

29

Defending Elon Musk & reevaluating Tom Hanks and Stephen King, an unconventional, freewheeling conversation. This is my latest podcast ep. I made it in hopes of better things, but sometimes forward progress involves honest discussion about contentious topics. I was going to

2

6

15

A major hole in the "complete technological determinism" argument is that it completely denies agency, or even the possibility that how agency operates at larger scales could change. Sure, humanity is not currently a very coordinated agent. But the trendline also points toward

1

9

65

A major hole in the "complete technological determinism" argument is that it completely denies agency, or even the possibility that how agency operates at larger scales could change. Sure, humanity is not currently a very coordinated agent. But the trendline also points toward

1

9

65

This is a fine example of thinking you get when smart people do evil things and their minds come up with smart justifications why they are the heroes. Upon closer examination it ignores key inconvenient considerations; normative part sounds like misleading PR.

Should we create agents that fully take over people's jobs, or create AIs that merely assist human workers? This is a false choice. Full automation is inevitable, whether we choose to participate or not. The only real choice is whether to hasten the inevitable, or to sit it out.

3

13

146

WSJ covering AI successionism, aka people advocating for omnicide because of a confused belief they have solved axiology. The general vibe is of Dunning-Kruger effect: most of the sensible ideas in the space were considered by smart transhumanists like Bostrom and

Governments and experts are worried that a superintelligent AI could destroy humanity. For some in Silicon Valley, that wouldn’t be a bad thing, writes David A. Price.

3

8

78

Me and @raymondadouglas on how AI job loss could hurt democracy. “No taxation without representation” summarizes that historically, democratic rights flow from economic power. But this might work in reverse once we’re all on UBI. Some highlights: 🧵 https://t.co/ge2ejwgMxv

“There is no physical reason why computers and robots can’t eventually become more efficient and capable than humans,” argue Raymond Douglas and @DavidDuvenaud. “The constant demand for progress in this direction makes such a development seem inevitable”

2

10

62

I'm interviewing @DavidDuvenaud, co-author of GRADUAL DISEMPOWERMENT, which argues that AGI could render humans irrelevant, even without any violent or hostile takeover. What should I ask him? Why are or aren't you worried about gradual disempowerment?

29

11

145

Market with a lot of agents biased against humans can make humans "uncompetitive" much faster. Also something like "20% difference" may not look large, but biases of the form "who to buy from" can easily get amplified via network effects.

0

0

4