Mohit

@imohitmayank

Followers

223

Following

1K

Media

833

Statuses

3K

Lead AI Engineer | LLM, AI Agents, GenAI, Audio, RL | Helping Startups 10x AI Game 🤖 | Author “Lazy Data Science Guide” | Creator of Jaal

Pune, Maharashtra

Joined February 2012

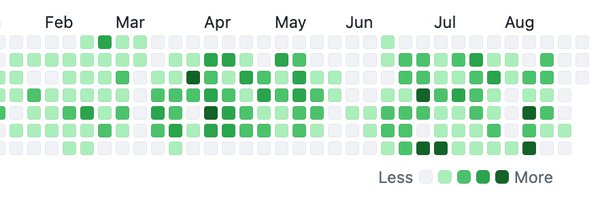

🚀 Cursor Tab just got smarter and more precise with online reinforcement learning! At Cursor, the Tab feature predicts your next coding action by analyzing your code context and cursor position, providing suggestions you can accept with a tap of the Tab key. Their latest

1

0

0

Cursor’s auto completion was the best in the market, the new update seems to up the game!

We've trained a new Tab model that is now the default in Cursor. This model makes 21% fewer suggestions than the previous model while having a 28% higher accept rate for the suggestions it makes. Learn more about how we improved Tab with online RL.

0

0

1

Tested EmbeddingGemma and it seems 256 is the magic embedding size - perfectly balancing accuracy, storage, and speed. What: I evaluated Google's EmbeddingGemma model, focusing on how Matryoshka Representation Learning (MRL) compromises accuracy when reducing embedding

0

0

0

🔍 Exploring the diverse world of text similarity algorithms reveals there’s no one-size-fits-all solution; it all depends on your specific use case. Understanding text similarity involves several algorithm types: - Edit distance methods: Hamming and Levenshtein distances

1

0

0

Cleaning Vibe Code using Vibe Coding - hottest job in the AI market rn 🥵

1

0

1

Working on LLMs for code comprehension or RAG functionality for Text<>Code? Check out Jina AI's jina-code-embeddings! Jina AI has announced the release of jina-code-embeddings, a powerful duo of code embedding models at just 0.5B and 1.5B parameters that set a new

1

0

1

Two new Stealth LLMs with a massive 2M context size—for free on OpenRouter!

0

0

1

🚀 The world's first LLM running locally on a business card is here—for fun and innovation! Binh Pham has just created a business card-sized device that runs a 260k parameter Llama 2 language model locally at 24 tokens per second, using a custom-designed card inspired by Ouija

0

0

1

these Chinese AI are undervalued. I switched from Claude to https://t.co/tkkgRVX1Pr (using Claude Code CLI), and guess what, I noticed no difference in daily use. Except it's $3 instead $200 there is world outside OpenAI and Anthropic, that is better than you may think.

347

778

12K

Jina AI’s Code Embeddings: https://t.co/p0aeHFnKt4 Google's EmbeddingGemma:

developers.googleblog.com

0

0

0

🔥 Fresh drop: two new embedding models hit the scene—Google's EmbeddingGemma and Jina AI’s Code Embeddings! What: Google’s EmbeddingGemma and Jina AI’s Code Embeddings are advanced embedding models targeting different domains—text/document vs code retrieval. EmbeddingGemma is a

1

0

1

Who needs sleep? Kimi-K2-Instruct-0905 just landed. 200+ T/s, $1.50/M tokens. 256k context window. Built for coding. Rivals Sonnet 4. Available now. 👇

28

69

871

Today we're releasing jina-code-embeddings, a new suite of code embedding models in two sizes—0.5B and 1.5B parameters—along with 1~4bit GGUF quantizations for both. Built on latest code generation LLMs, these models achieve SOTA retrieval performance despite their compact size.

8

51

315

Fun + informative article on using RL to train Gemma-3 to play Wordle. All done locally with MLX:

5

21

225

Introducing EmbeddingGemma, our new open embedding model for on-device AI applications. - Highest ranking open model under 500M on the MTEB benchmark. - Runs on less than 200MB of RAM with quantization. - Dynamic output dimensions from 768 down to 128. - Input context length of

24

62

464

Kimi k2 just got better!

Kimi K2-0905 update 🚀 - Enhanced coding capabilities, esp. front-end & tool-calling - Context length extended to 256k tokens - Improved integration with various agent scaffolds (e.g., Claude Code, Roo Code, etc) 🔗 Weights & code: https://t.co/83sQekosr9 💬 Chat with new Kimi

0

0

1