Explore tweets tagged as #TextGrad

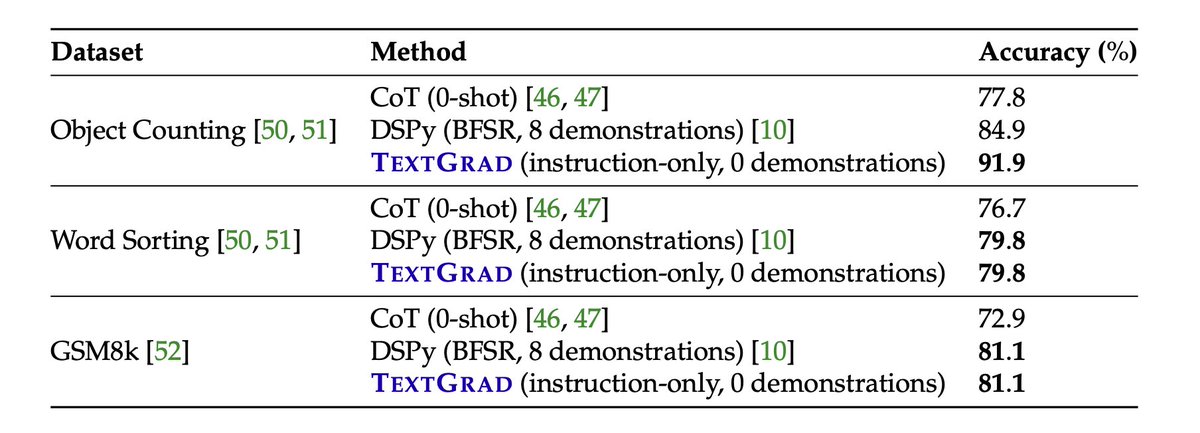

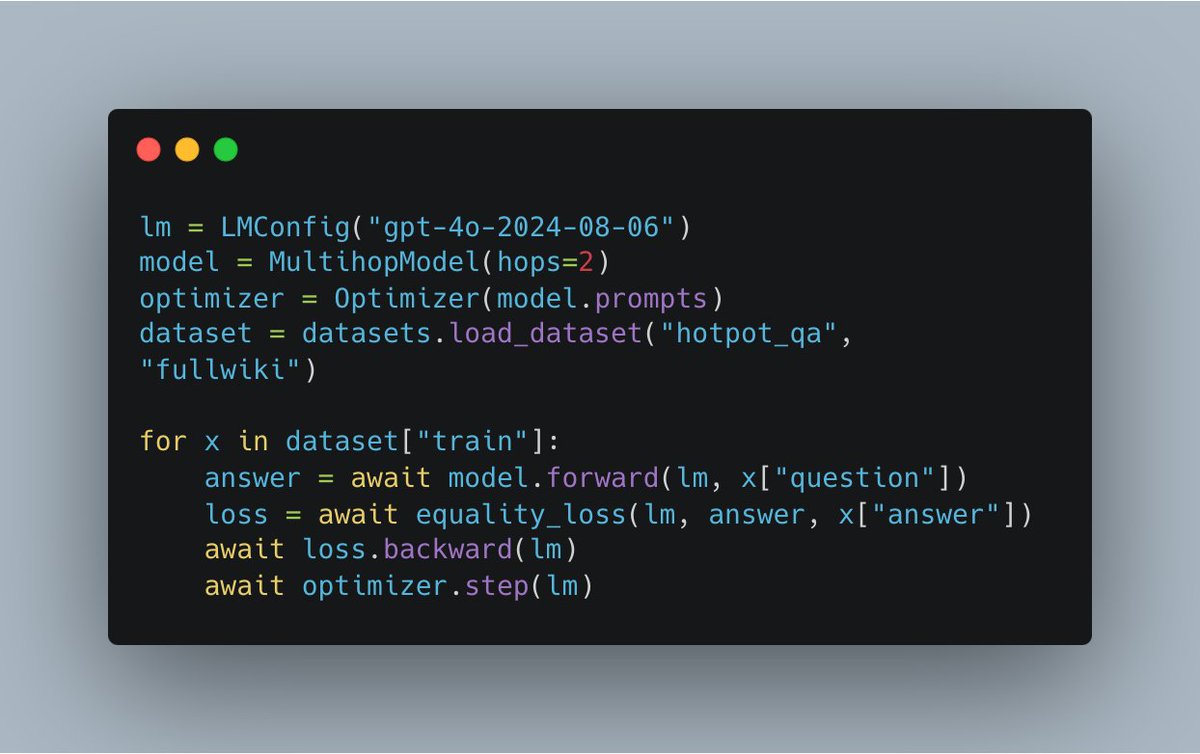

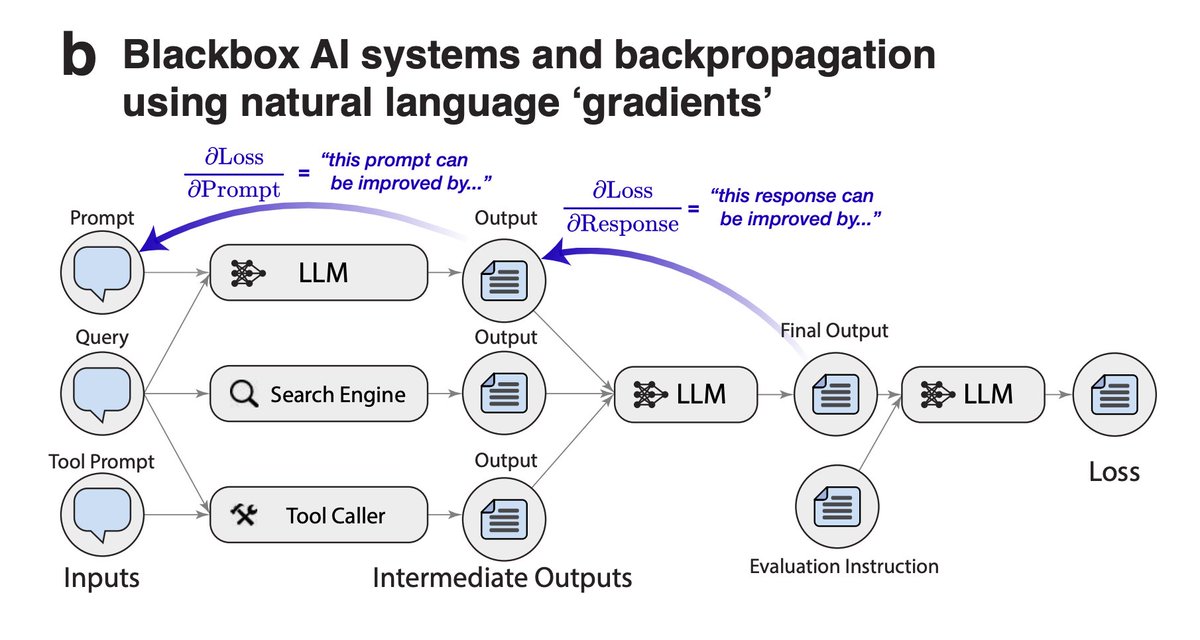

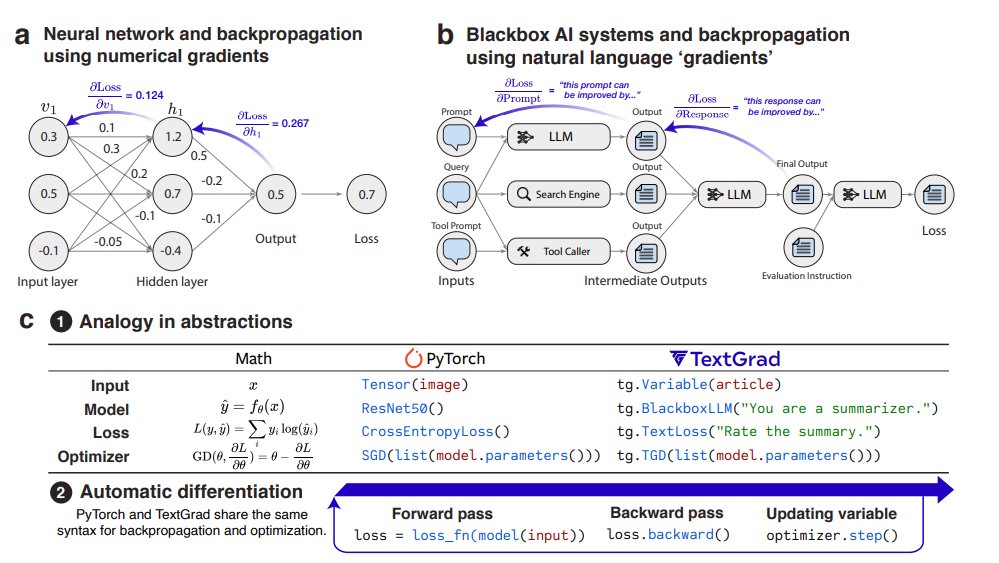

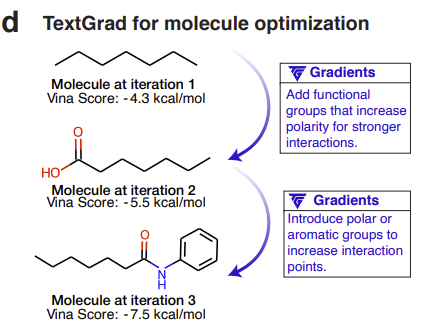

#TextGrad #LLM framework shows that optimizing answer AND the #PROMPT using multistep #reflextion, greatly improves accuracy. they created a python package with a similar syntax as pytorch. the framework has nice logging but I don't find it intuitive and extendable.

0

0

0

Super excited to share our work on benchmarking LLM routers at Compound AI Systems workshop at @Data_AI_Summit ! . Had the pleasure to chat with other masterminds in the space like @matei_zaharia @ChenLingjiao . Found @ShengLiu_ ‘s textgrad presentation to be particularly

4

5

38