Explore tweets tagged as #ModelCompression

Introducing Nota 🇰🇷 . AI model compression technology that makes deep learning models run faster with less computing power. They help companies deploy AI on mobile devices without sacrificing performance. Efficiency-focused AI innovation. #AI #ModelCompression #MobileAI

0

0

2

Optimizing AI Models for Local Devices: Balancing Privacy and Performance.#AIModelOptimization #LocalDevices #PrivacyVsPerformance #ModelCompression #AIChallenges #DeviceProcessingPower #DataPrivacy #ModelQuality #AIOnDevices #AIInnovation

0

1

3

CommVQ (ICML ’25) shows an 8× KV-cache reduction with 2-bit quantization—and even 1-bit with only minor quality loss—letting an 8 B LLaMA-3.1 handle 128 K tokens on a single RTX 4090 (24 GB). Paper ▶︎ #ICML2025 #LLM #KVcache #ModelCompression.

0

0

1

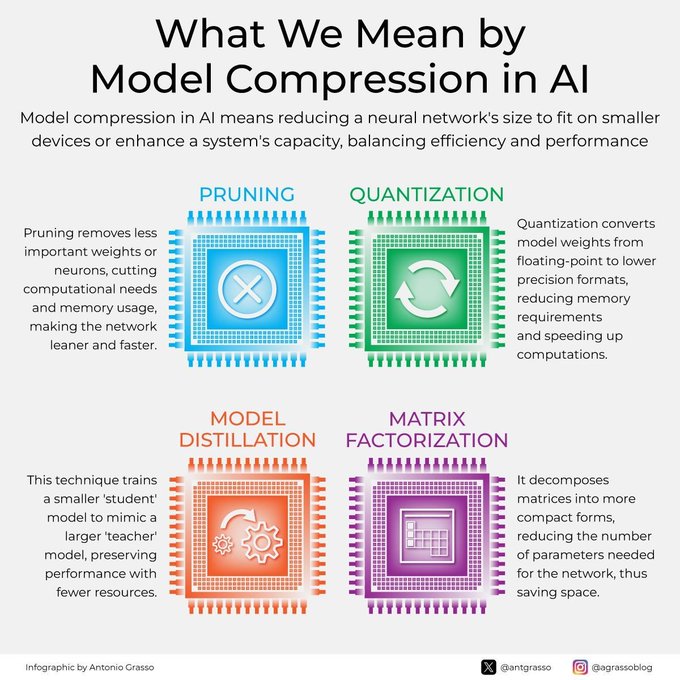

Model compression in ICCLOUD! 8 - bit Quantization cuts memory by 75%, Weight Pruning reduces compute by 50%. Save resources! #ModelCompression #Resources

55

177

437

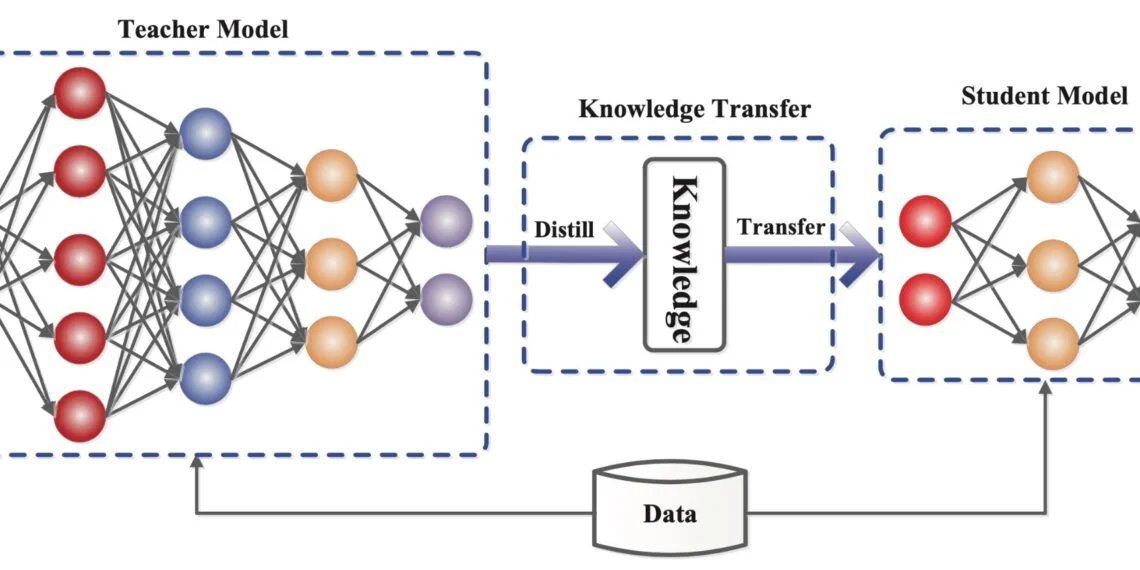

📚🤖 Knowledge Distillation = small AI model learning from a big one! Smarter, faster, efficient. Perfect for NLP & vision! 🚀📱. See here - #KnowledgeDistillation #AI2025 #DeepLearning #TechChilli #ModelCompression

0

0

1

Today's #PerfiosAITechTalk talks about how #ModelCompression can be used for efficient on-device runtimes. #NeuralNetworks #Datascience #ML #AI

0

0

1

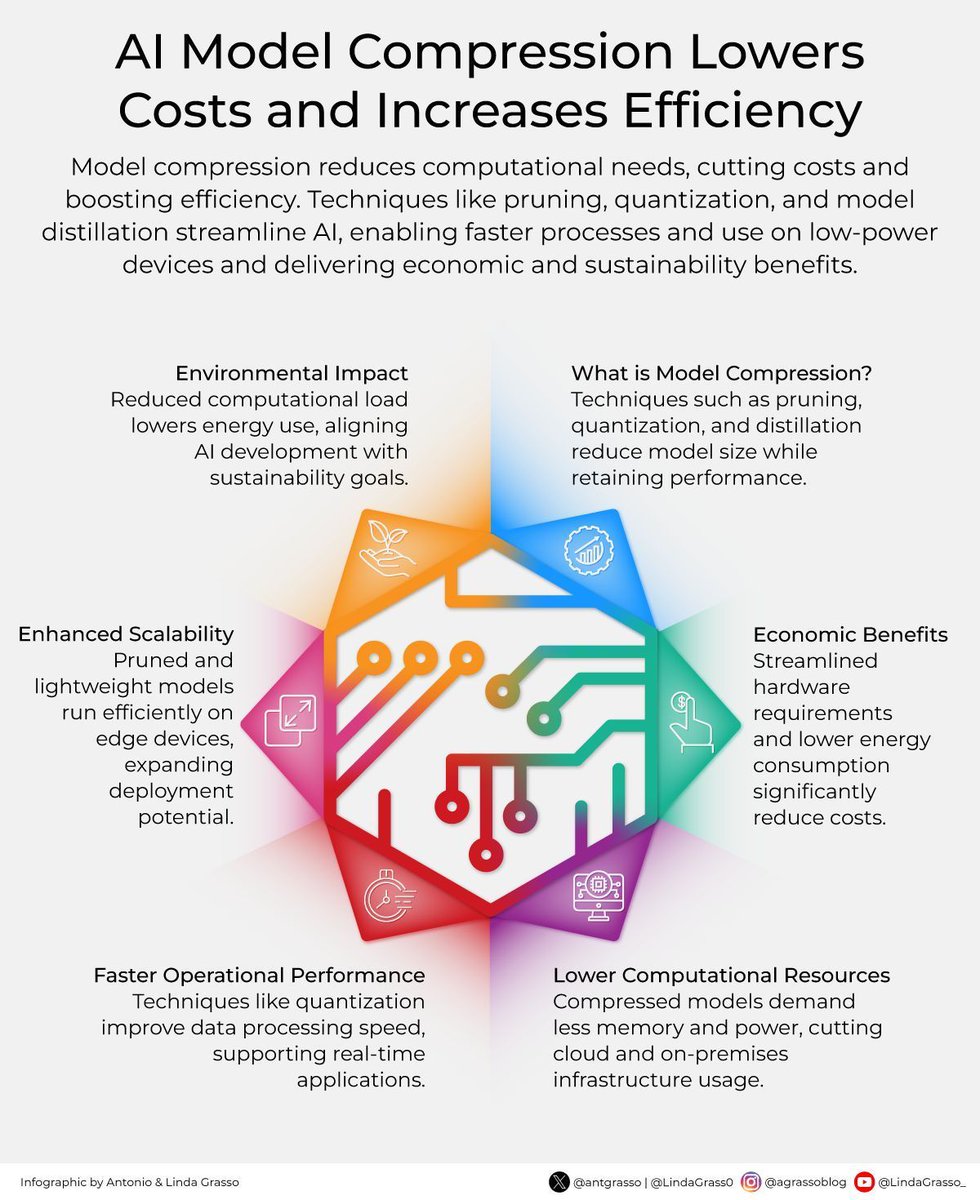

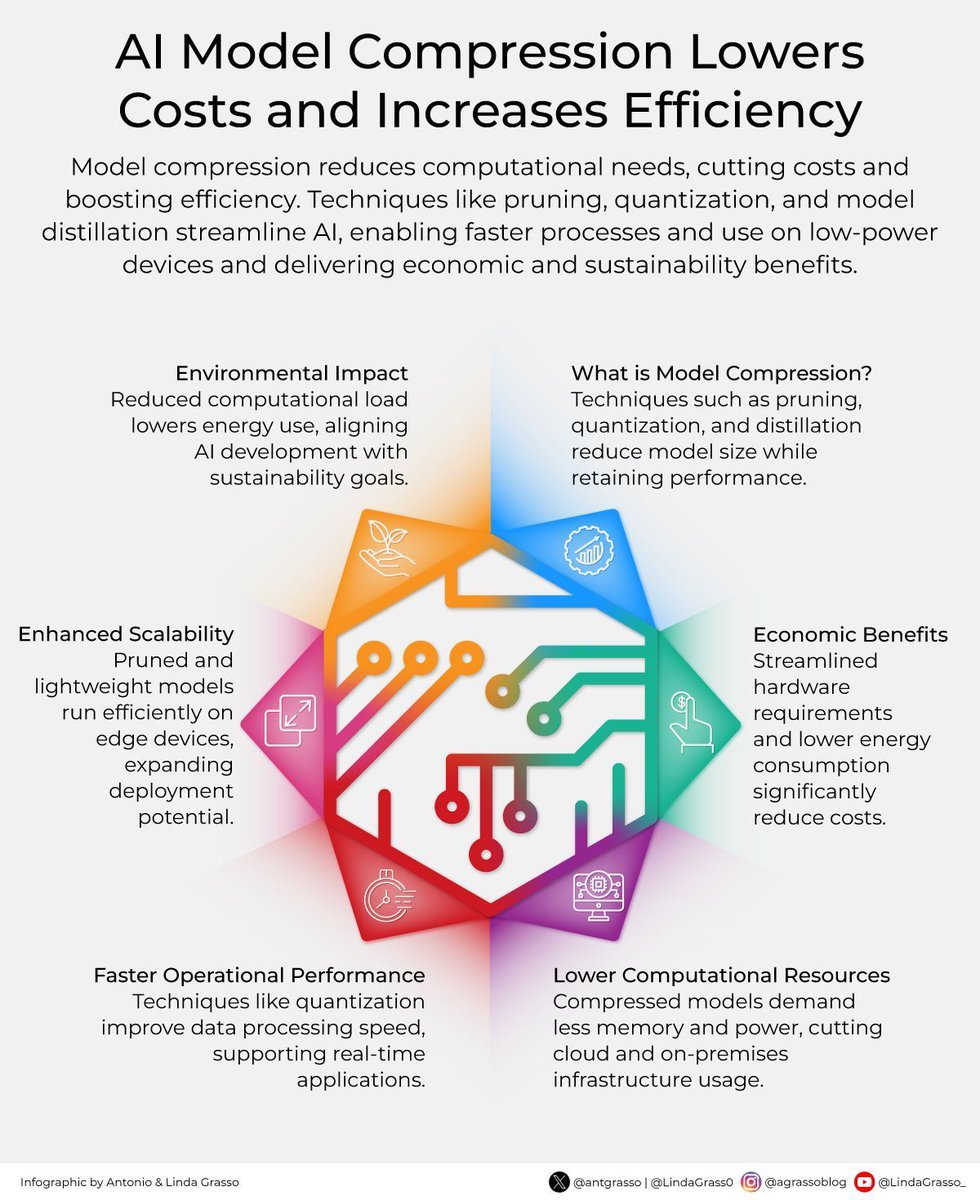

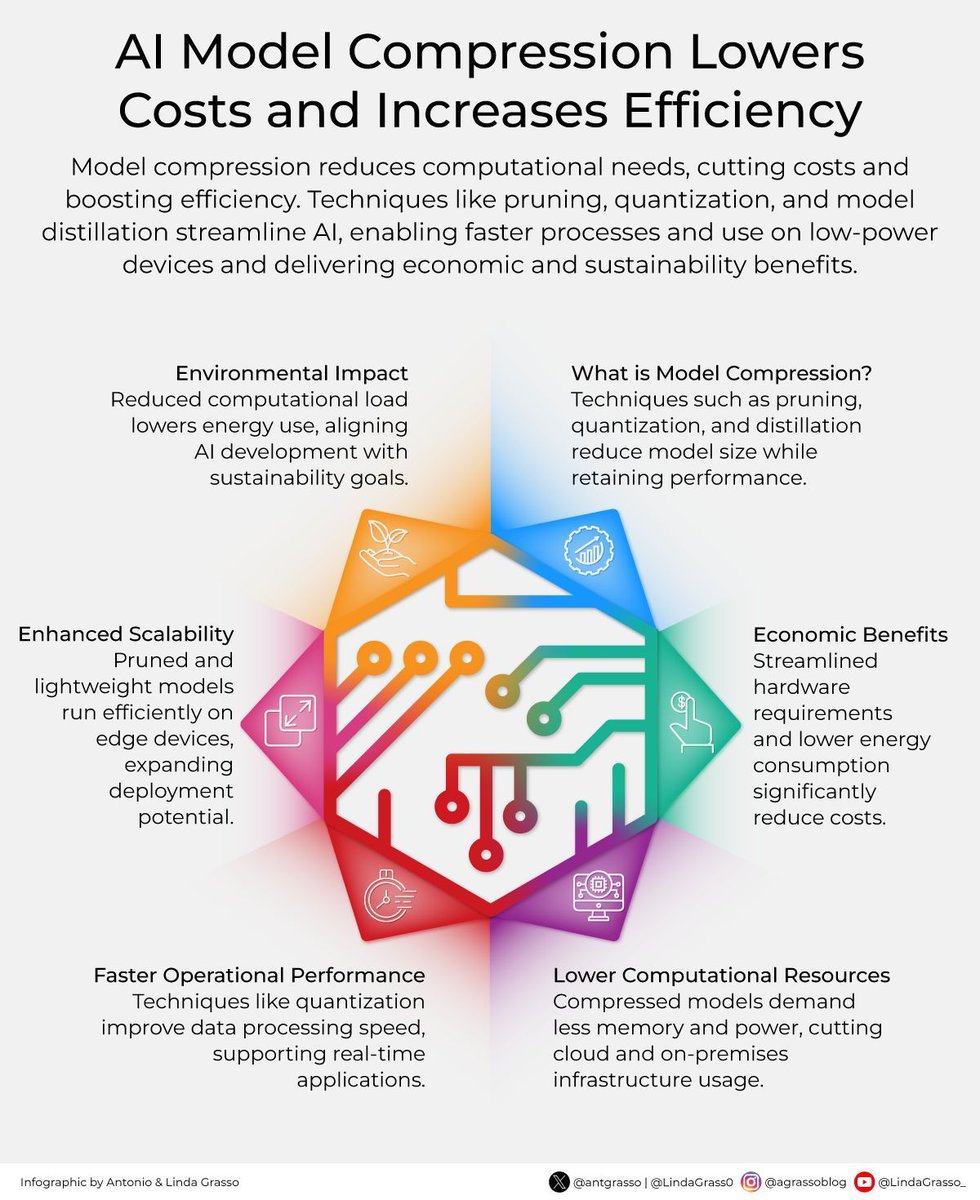

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. By @antgrasso #AI #ModelCompression #Efficiency

0

2

9

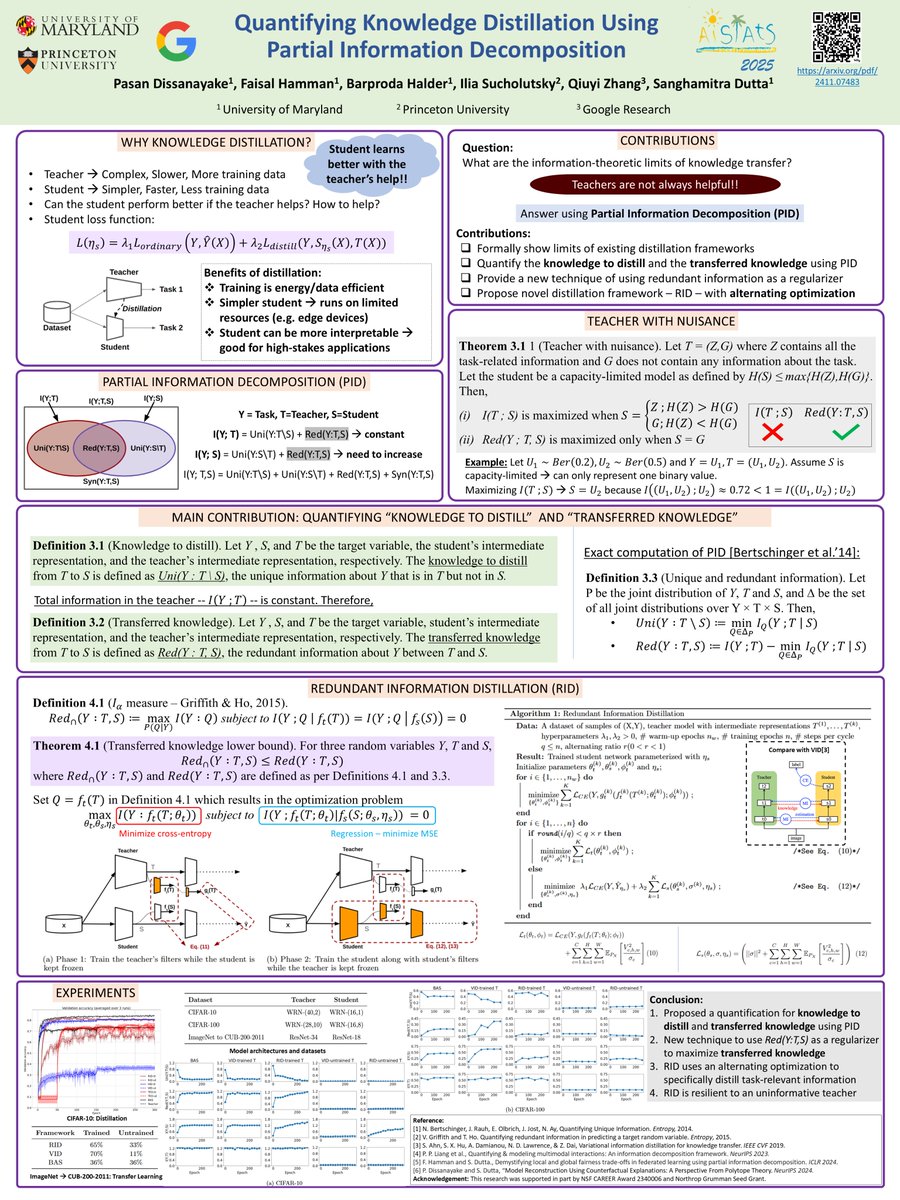

📢 Our paper "Quantifying Knowledge Distillation Using Partial Information Decomposition" will be presented at #AISTATS2025 on May 5th, Poster Session 3! Our work brings together #modelcompression and #explainability through the lens of #informationtheory.Link:

1

4

18

🔍 New Blog Alert! Dive deep into the fascinating journey of AI model compression in Part VII: From Vision to Understanding - The Birth of Attention, written by Ateeb Taseer. ➡️ Read the full blog now 👉 #AI #ModelCompression #AttentionMechanisms

0

1

0

RT Model Compression: A Look into Reducing Model Size #machinelearning #modelcompression #tinyml #deeplearning

0

1

0

The four common #ModelCompression techniques:.1) Quantization.2) Pruning .3) Knowledge distillation.4) Lower rank matrix factorization. What does your experience point you toward? #NeuralNetworks #DataScience #PerfiosAITechTalk

0

0

0

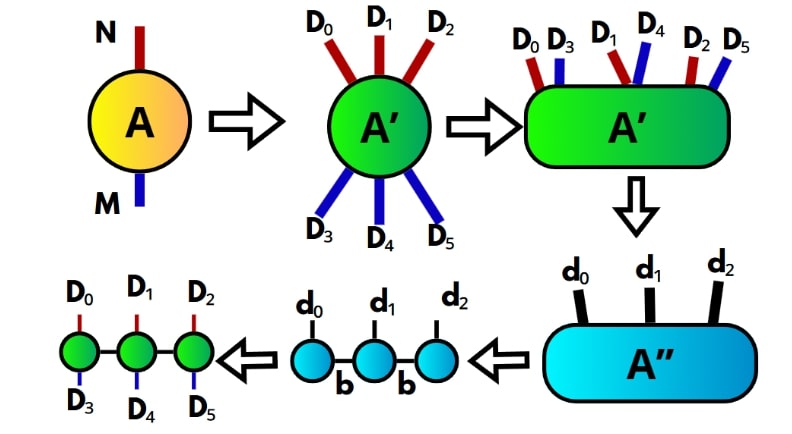

🧠💡 Cómo hacer que #ChatGPT quepa en tu bolsillo con inspiración cuántica. En el #BlogdeExpertosITCL nuestro experto en #computacióncuántica nos da las claves para poder hacerlo. 📉📈. #IA #LLM #ModelCompression #TensorNetworks #ChatGPT #EdgeAI .

0

1

1

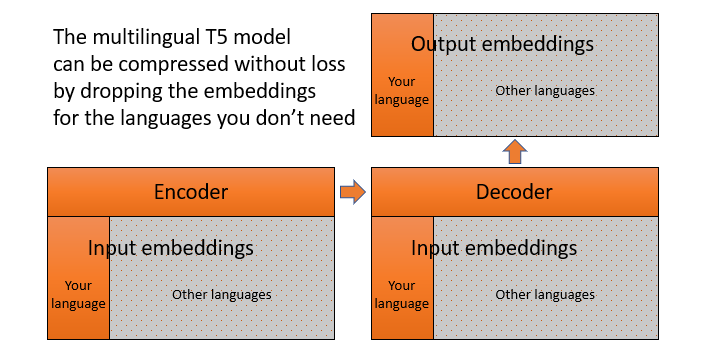

RT How to adapt a multilingual T5 model for a single language #nlp #transformers #modelcompression #machinelearning #t5

0

1

0

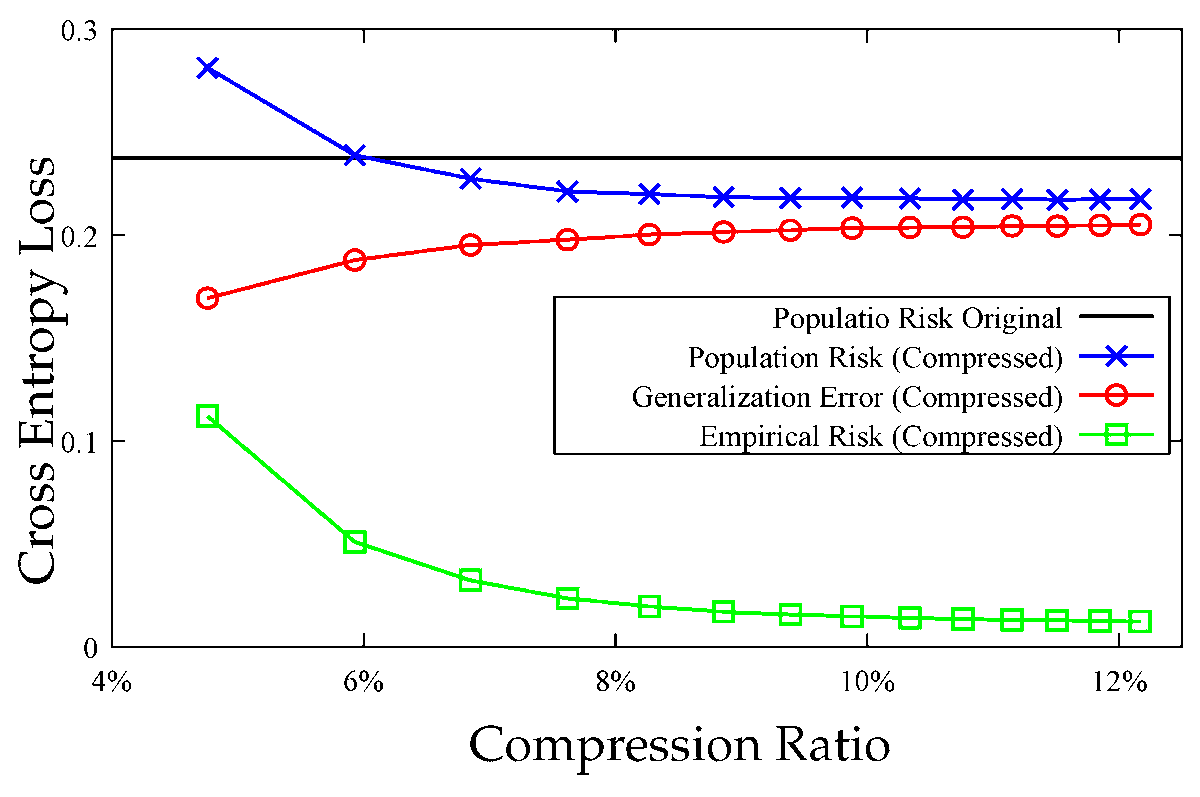

#mdpientropy "Population Risk Improvement with Model Compression: An Information-Theoretic Approach" #empiricalrisk.#generalizationerror.#modelcompression.#populationrisk

0

0

3

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. Microblog by @antgrasso #AI #ModelCompression #Efficiency

6

57

68

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. rt @antgrasso #AI #ModelCompression #Efficiency

0

1

4

Georgia Tech & #Microsoft Reveal ‘Super Tickets’ in Pretrained Language Models: Improving Model Compression and Generalization | #AI #ML #ArtificialIntelligence #MachineLearning #DeepNeuralNetworks #LanguageModel #ModelCompression

0

1

4

🚀 New shared task at #WMT2025 (co-located with @emnlpmeeting ): Model Compression for Machine Translation!.Can you shrink an LLM and keep translation quality high?🔧.Submit by July 3 and push the limits of efficient NLP!.👉 #NLP #ML #LLM #ModelCompression.

0

11

10