Explore tweets tagged as #FrontierMath

Introducing FrontierMath Tier 4: a benchmark of extremely challenging research-level math problems, designed to test the limits of AI’s reasoning capabilities.

19

64

558

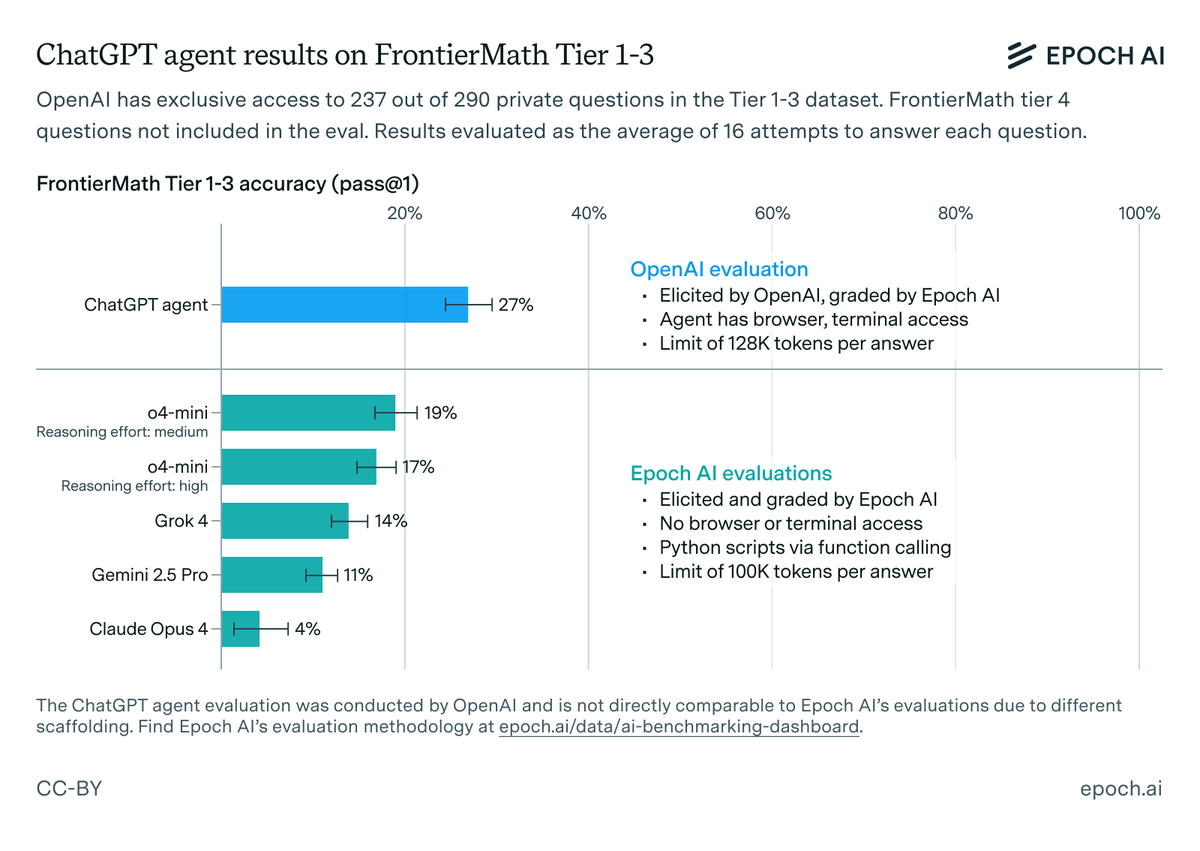

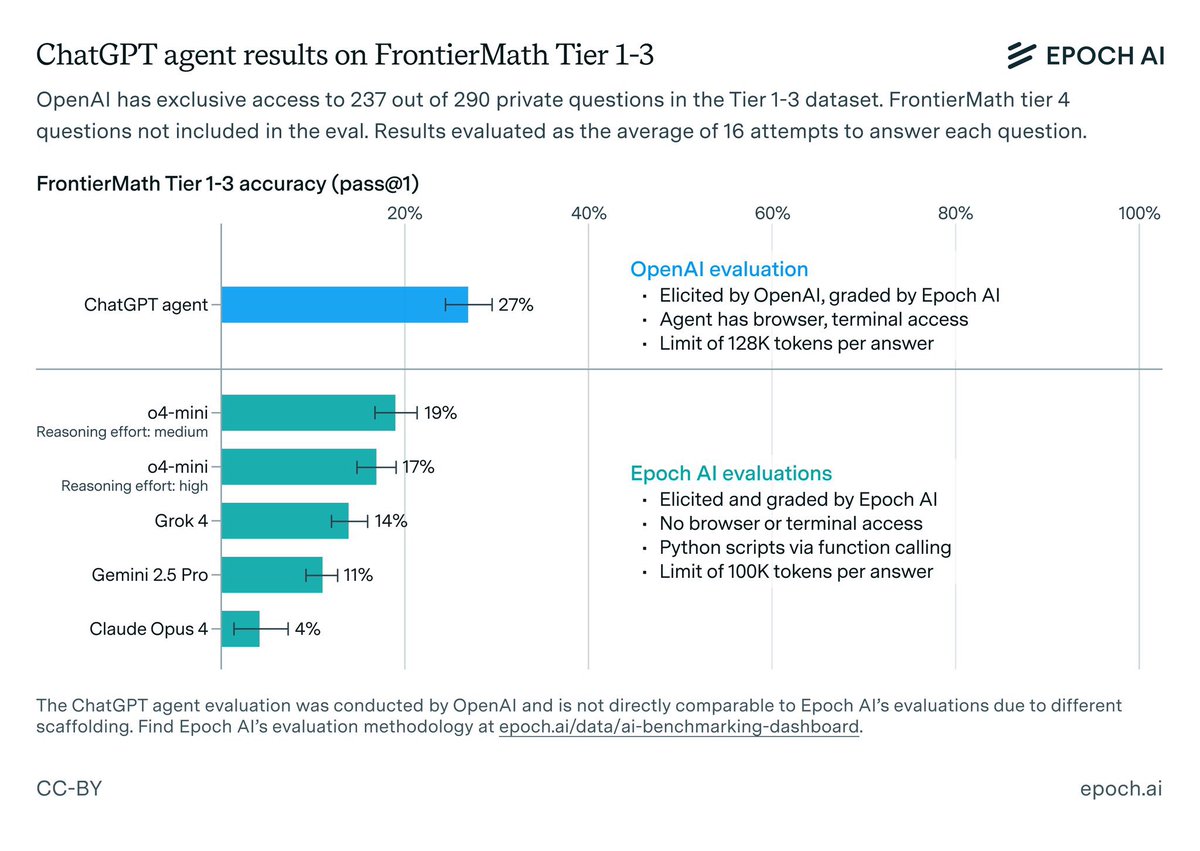

We have graded the results of @OpenAI's evaluation on FrontierMath Tier 1–3 questions, and found a 27% (± 3%) performance. ChatGPT agent is a new model fine-tuned for agentic tasks, equipped with text/GUI browser tools and native terminal access. 🧵

36

151

864

Re: “At Secret Math Meeting, Researchers Struggle to Outsmart AI” — What Actually Happened. Just saw a news report about the FrontierMath Symposium (hosted by @epochai). While AI is advancing at an incredible pace, I think some parts of the report were a bit exaggerated and could

11

18

93

Reasoning LLMs like o3 and R1 have reportedly solved some advanced math problems, including those from the FrontierMath benchmark. However, we find that they still struggle with pre-college theorem proving. When tasked with proving inequalities from math competitions, both o3 and

🚀 Excited to share our #ICLR2025 paper: "Proving Olympiad Inequalities by Synergizing LLMs and Symbolic Reasoning"!.📄 Paper: LLMs like R1 & o3 excel at solving complex, calculation-based math problems. However, when it comes to proving inequalities in

5

22

165

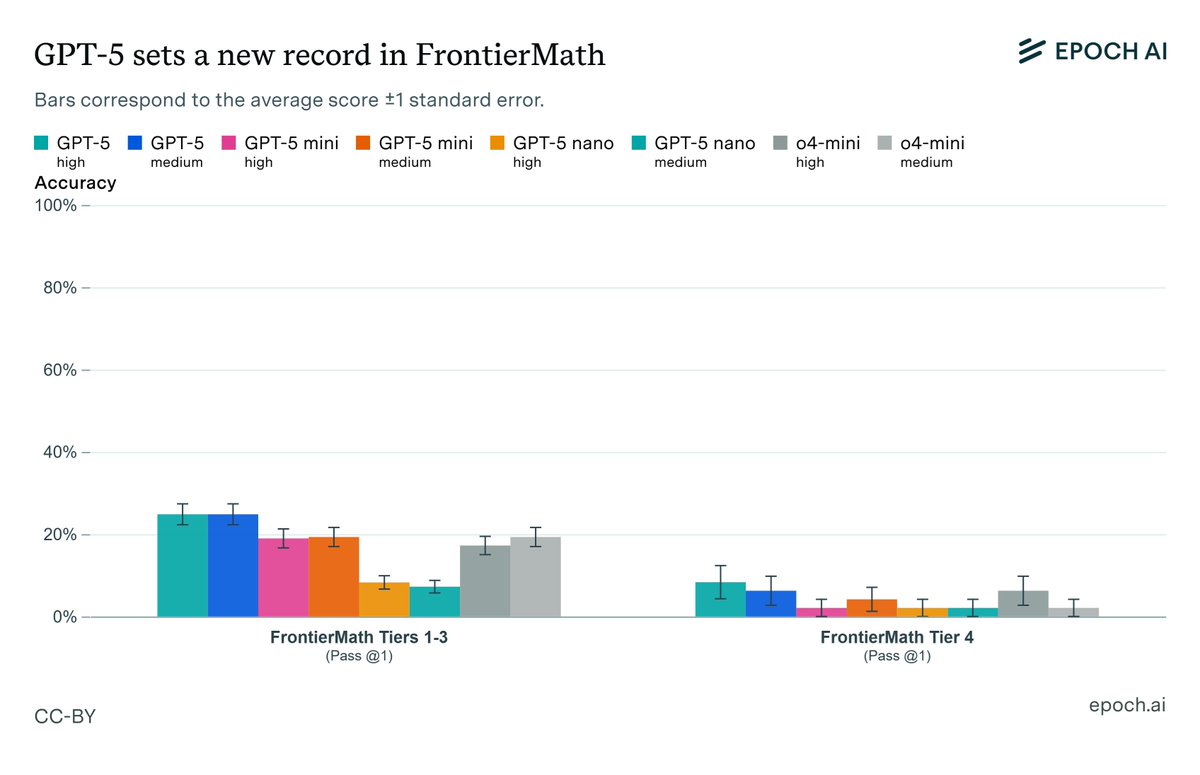

GPT-5 sets new SOTA on FrontierMath (22-28% Tiers 1-3, 4-12% Tier 4) and OTIS Mock AIME (83-91%), improves on SWE-bench Verified (57-61%) over o4-mini but trails Claude 4.1, and matches top models on GPQA (83-87%), with positive Cursor user anecdotes.

We’ve independently evaluated the GPT-5 model family on our benchmarking suite. Here is what we’ve learned 🧵

0

0

22

🚨Truth: 2.5 pro is better than full o3 in AIME 2024 and GPQA Diamond. @pass 1. AIME GPQA.2.5 pro | 92% | 84%.o3 | 90-91% |82-83%.(I tried to measure it using a software as accurately as possible).I want to see it on ARC-AGI2 & FrontierMath

10

10

147