Explore tweets tagged as #DataLoader

New NanoGPT training speed record: 3.28 FineWeb val loss in 3.95 minutes. Previous record: 4.41 minutes.Changelog:.- @leloykun arch optimization: ~17s.- remove "dead" code: ~1.5s.- re-implement dataloader: ~2.5s.- re-implement Muon: ~1s.- manual block_mask creation: ~5s

13

24

302

This is some seriously high-quality analysis on which open dataloader to use for your multimodal workflows. It's definitely going to save you months of time!.

Ever wondered how large-scale multimodal training worked?.How ~petabytes of data are loaded from the cloud to accelerators?. Here I benchmark 4 frameworks (WebDataset, Energon, MDS, LitData) on data prep efficiency, cloud streaming perf, & fault tolerance. TL;DR: Try out LitData

0

1

18

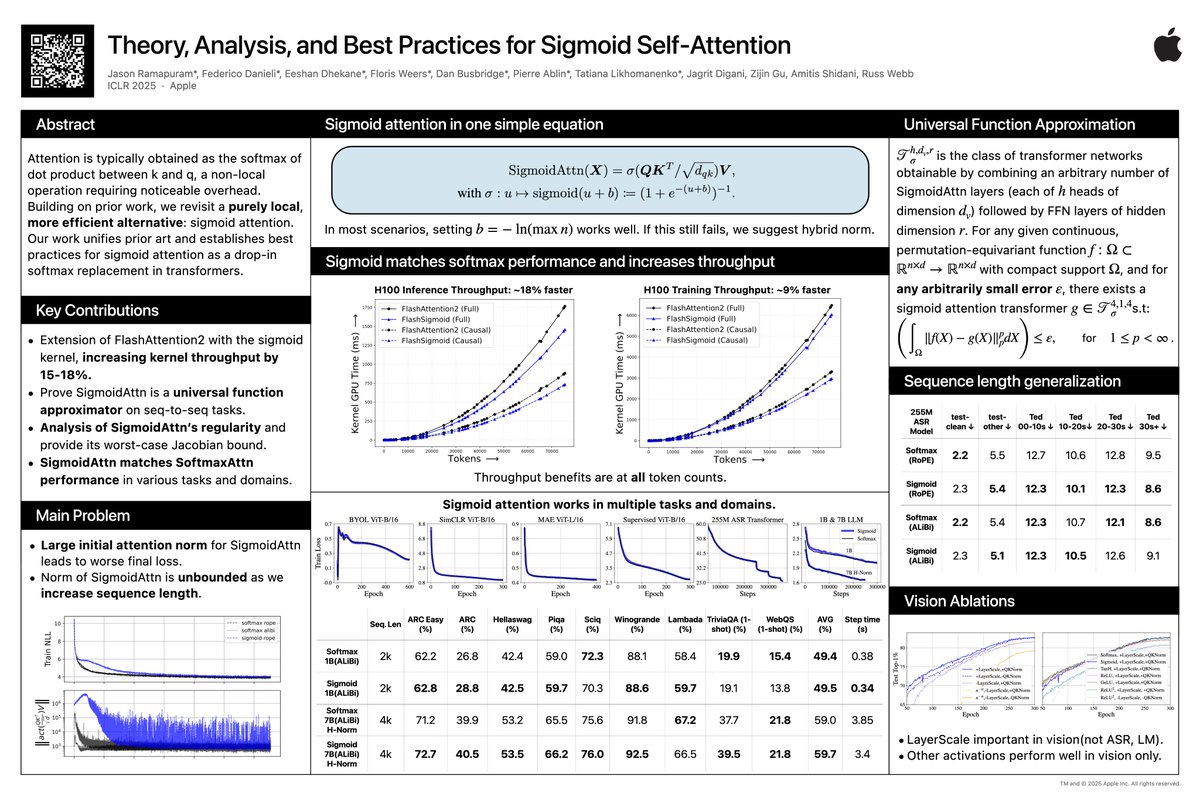

Stop by poster #596 at 10A-1230P tomorrow (Fri 25 April) at #ICLR2025 to hear more about Sigmoid Attention! . We just pushed 8 trajectory checkpoints each for two 7B LLMs for Sigmoid Attention and a 1:1 Softmax Attention (trained with a deterministic dataloader for 1T tokens):. -

Small update on SigmoidAttn (arXiV incoming). - 1B and 7B LLM results added and stabilized. - Hybrid Norm [on embed dim, not seq dim], `x + norm(sigmoid(QK^T / sqrt(d_{qk}))V)`, stablizes longer sequence (n=4096) and larger models (7B). H-norm used with Grok-1 for example.

1

14

45