Goku Mohandas

@GokuMohandas

Followers

14K

Following

2K

Media

125

Statuses

953

RT @PyTorch: An #OpenSource Stack for #AI Compute: @kubernetesio + @raydistributed + @pytorch + @vllm_project ➡️ This Anyscale blog post by….

0

28

0

Key @anyscalecompute infra capabilities that keeps these workloads efficient and cost-effective:. ✨ Automatically provision worker nodes (ex. GPUs) based on our workload's needs. They'll spin up, run the workload and then scale back to zero (only pay for compute when needed).

1

0

1

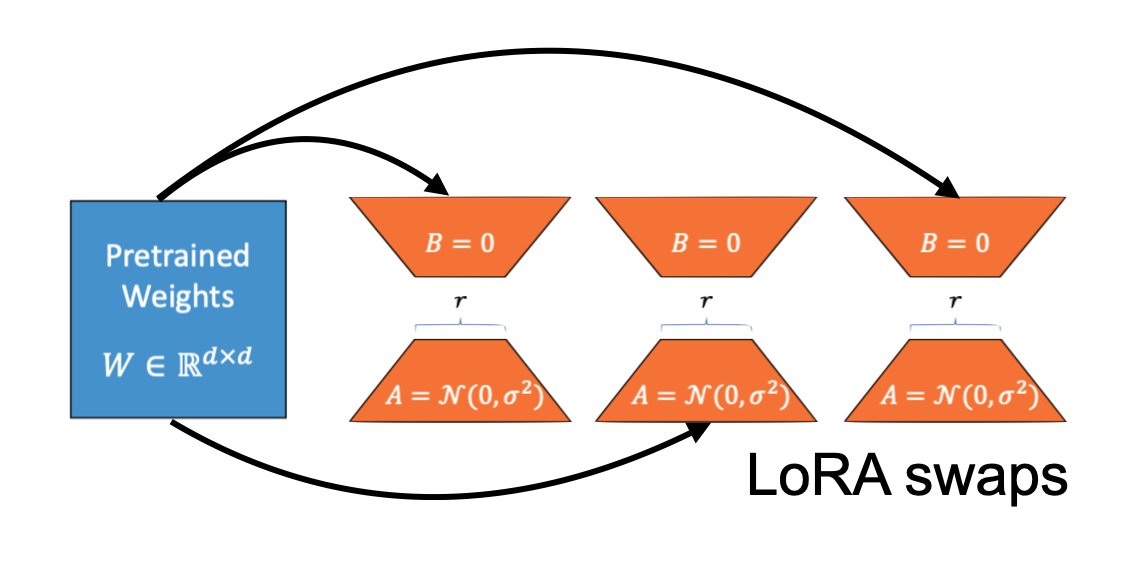

⚖️ Evaluate our fine-tuned LLMs with batch inference using Ray + @vllm_project. Here we apply the LLM (a callable class) across batches of our data and vLLM ensures that our LoRA adapters can be efficiently served on top of our base model.

1

0

3

🛠️ Fine-tune our LLMs (ex. @AIatMeta Llama 3) with full control (LoRA/full parameter, training resources, loss, etc.) and optimizations (data/model parallelism, mixed precision, flash attn, etc.) with distributed training.

1

0

3

🔢 Preprocess our dataset (filter, clean, schema adherence, etc.) with batch data processing using @raydistributed. Ray data helps us apply any python function or callable class on batches of data using any compute we want.

1

0

5

RT @smlpth: I’ve read dozens of articles on building RAG-based LLM Applications, and this one by @GokuMohandas and @pcmoritz from @anyscale….

0

11

0

RT @bhutanisanyam1: The definitive guide to RAG in production! 🙏. @GokuMohandas walks us through implementing RAG from scratch, building a….

0

90

0

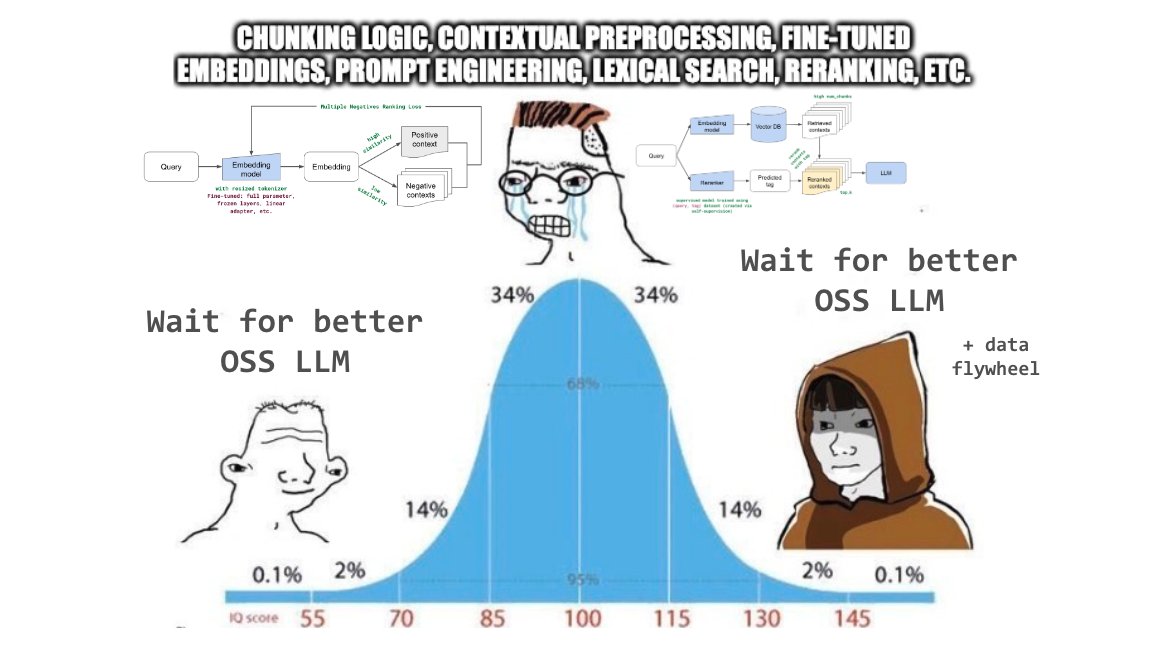

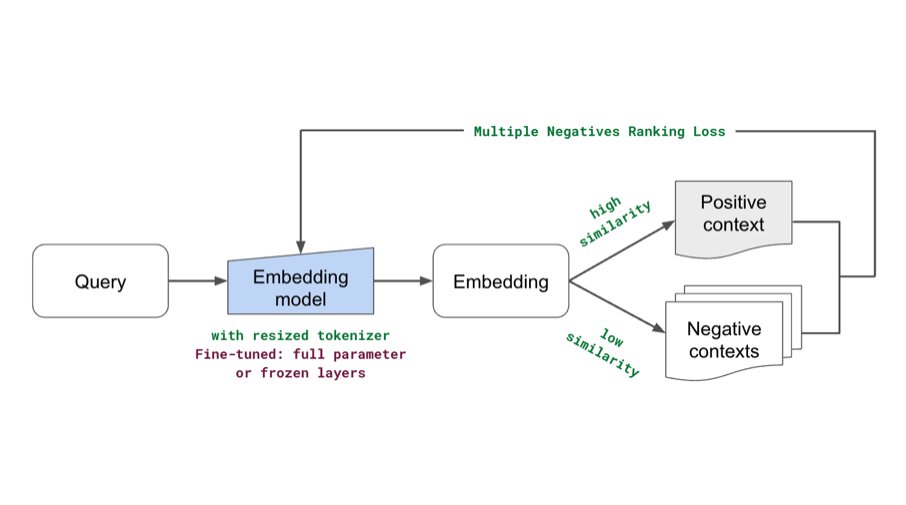

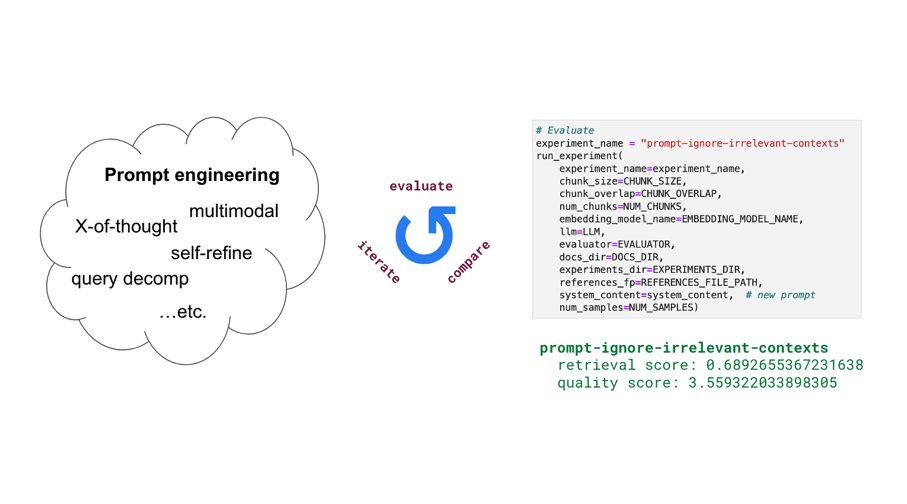

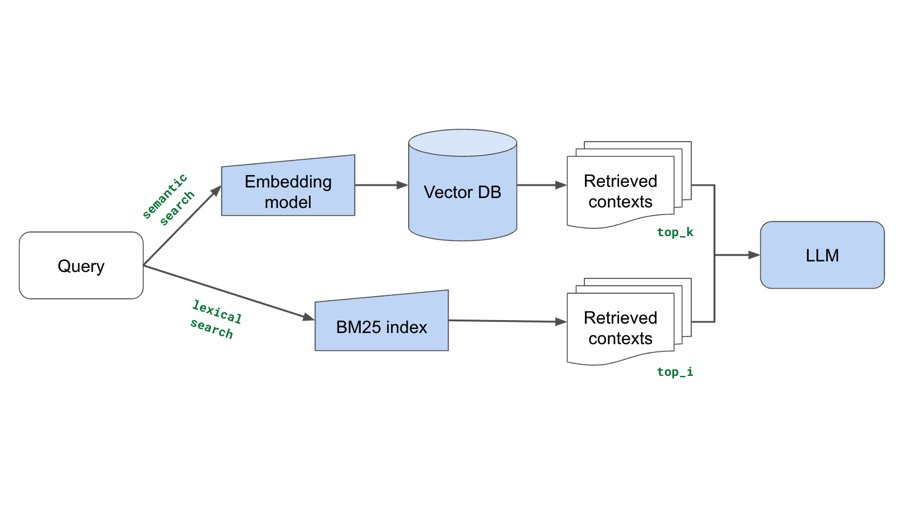

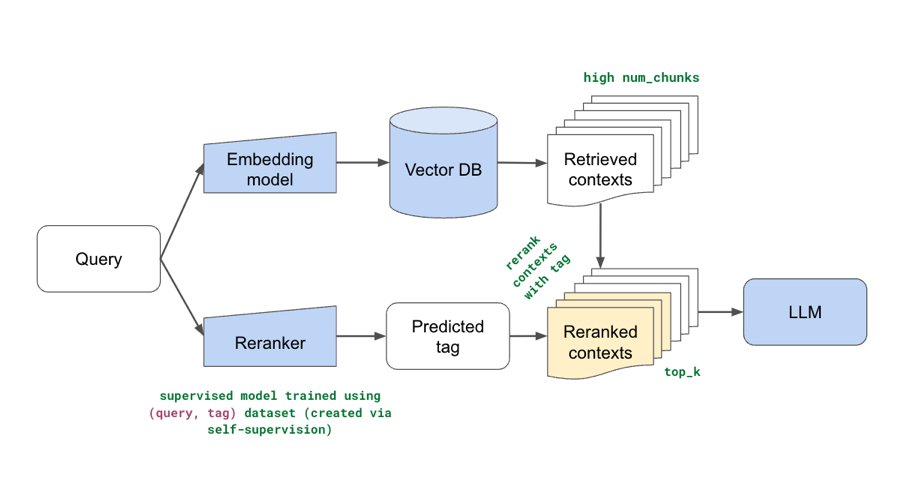

Added some new components (fine-tuning embeddings, lexical search, reranking, etc.) to our production guide for building RAG-based LLM applications. Combination of these yielded significant retrieval and quality score boosts (evals included). Blog:

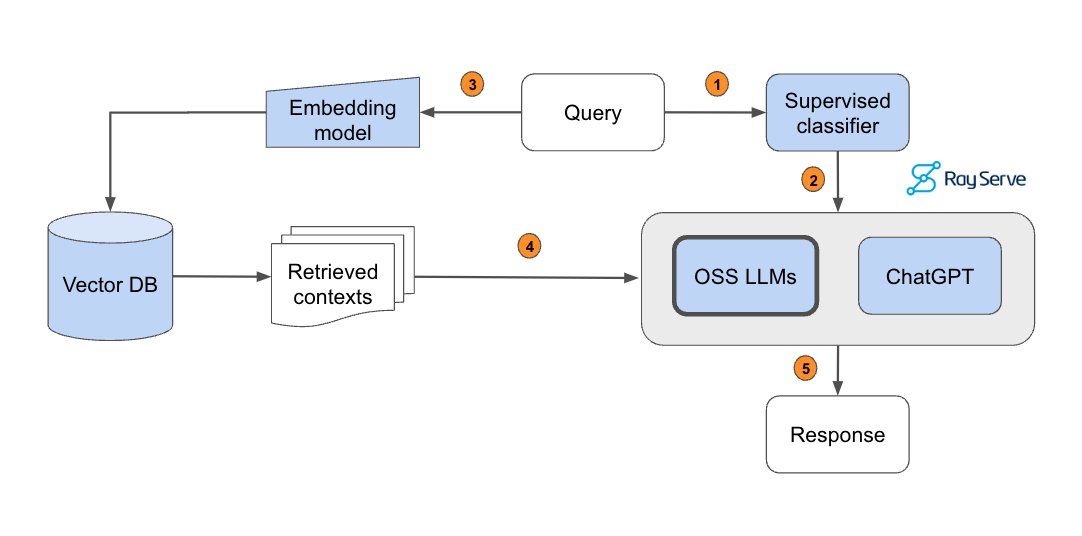

Excited to share our production guide for building RAG-based LLM applications where we bridge the gap between OSS and closed-source LLMs. - 💻 Develop a retrieval augmented generation (RAG) based LLM application from scratch. - 🚀 Scale the major workloads (load, chunk, embed,

7

48

205

RT @chipro: New blog post: Multimodality and Large Multimodal Models (LMMs). Being able to work with data of different modalities -- e.g. t….

0

191

0

RT @LangChainAI: looking for a good read with your weekend ☕ or 🍵?. This series on RAG from @anyscalecompute is full of great stuff!.

0

19

0

RT @bhutanisanyam1: The best guide I’ve read on RAG based LLM Applications! 🙏. It’s a crispy code first tutorial that starts from scratch,….

0

51

0

RT @hwchase17: This is an incredible resource on building RAG-based LLM applications. 45 minute read!!!! Lots to learn.

0

40

0