rohan

@global__void

Followers

22

Following

630

Media

11

Statuses

85

inference is perhaps the most valuable emerging software category. as models get smarter and more economically valuable, compute will increasingly be spent drawing samples from the models. if you'd like to work on inference at openai, reach out — gdb@openai.com. include a

104

127

2K

What a privilege to be tired from the work you prayed for. What a privilege to feel overwhelmed by growth you used to dream about. What a privilege to be challenged by a life you created on purpose. What a privilege to outgrow things you used to settle for.

81

14K

51K

triton, gluon, cutedsl, hopper, blackwell, tensorcores, layouts, composition, local_tile, partitionS, partitionD, wgmma, tcgen05, TMA, block scaling, coalesced access, ampere, ada lovelace, cutlass, cublas, cudnn, flash attention, gemm, sgemm, fp16, bf16, mxfp8, nvfp4, int4,

38

113

2K

half of my model runtime is spent on sampling and the other half of them time it ooms on collab 🥀

0

0

1

CUDA 13.0 just dropped. I compressed their 26 page pdf into a thread:

12

123

1K

he also has an amazing youtube channel, one of the first ever videos I watched on training was from him:

0

0

0

This guy literally dropped the best life advice you'll ever hear

17

211

2K

just when I think I'm beginning to understand CuTe layouts I'll see another one that shatters me

0

0

1

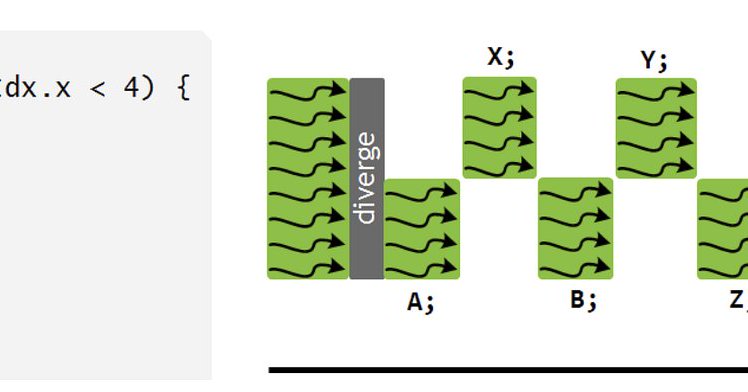

Cuda Warps make the sweet parallelism that we love possible , but what about Divergence ? In this blog - I talk about how it has been handled over the years and If you do give it a read I would love to hear what you have to say ! https://t.co/IQf8yJalQ9

medium.com

As I’ve been learning CUDA and exploring its execution model, one concept that initially stood out was the ideal of warp-level lockstep…

0

0

0

been a while but we stay cooking ! ( there so much to learn ahhhhh )

0

0

2

huhhh

> fp8 is 100 tflops faster when the kernel name has "cutlass" in it kms https://t.co/KpZjwSAkrM

0

0

1

I used to think warp level execution is guaranteed to be lockstep and many sources, llms made it seem that way but this is because before volta this was the case , it no longer is guaranteed . Im enlightened but that means I have to unlearn some notions ⚰️

0

0

1