Giannis Daras

@giannis_daras

Followers

3,893

Following

402

Media

125

Statuses

1,232

Ph.D. candidate, Computer Science @UTAustin , working with @AlexGDimakis . Ex: @nvidia , @google , @explosion_ai , @ntua

Joined October 2018

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Trump

• 2704376 Tweets

America

• 961247 Tweets

Justice

• 899237 Tweets

Jury

• 834481 Tweets

Felon

• 564206 Tweets

New York

• 517649 Tweets

Democrats

• 445441 Tweets

Joe Biden

• 358929 Tweets

MAGA

• 335991 Tweets

All 34

• 307462 Tweets

Republicans

• 216414 Tweets

White House

• 120201 Tweets

POTUS

• 118825 Tweets

Clinton

• 104809 Tweets

Hillary

• 98910 Tweets

Dems

• 91319 Tweets

Alvin Bragg

• 82513 Tweets

Stormy Daniels

• 75855 Tweets

Endrick

• 55235 Tweets

Norita

• 51740 Tweets

Neymar

• 49660 Tweets

Law and Order

• 47267 Tweets

#StateOfPlay

• 38132 Tweets

OUÇA MINHA HERANÇA

• 35784 Tweets

#SVGala13

• 35271 Tweets

Michael Cohen

• 30341 Tweets

Silent Hill 2

• 28471 Tweets

Nora Cortiñas

• 22938 Tweets

#911onABC

• 21188 Tweets

Monster Hunter Wilds

• 20543 Tweets

Luana Piovani

• 18598 Tweets

温帯低気圧

• 17927 Tweets

San Lorenzo

• 17100 Tweets

#النصر_الهلال

• 14884 Tweets

ワイルズ

• 14790 Tweets

Athena

• 14212 Tweets

#BridgertonOcchiamin

• 12620 Tweets

Last Seen Profiles

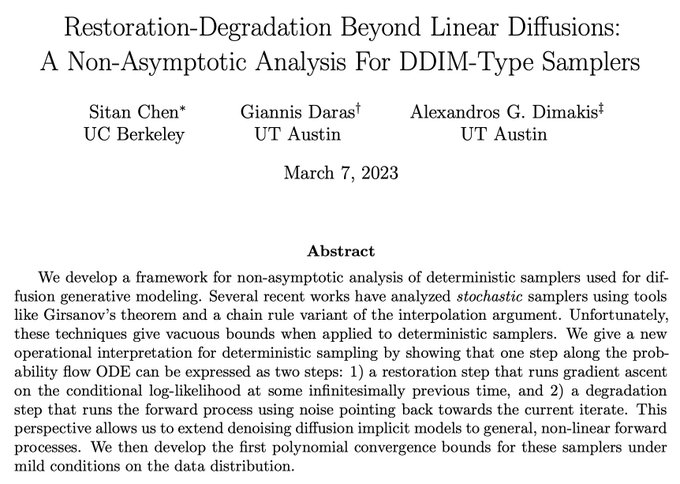

We wrote a small paper with

@AlexGDimakis

summarizing our findings.

Please find the paper here:

Arxiv version coming soon.

(7/n, n=7).

19

61

1K

Based on valid comments, we updated our paper with a discussion on Limitations and changed the title to Discovering the Hidden Vocabulary of DALLE-2. Thanks to

@mraginsky

@rctatman

@benjamin_hilton

and others for useful comments.

5

17

402

Today was my first day as a Research Scientist Intern at NVIDIA 🥳

Will be working with

@ArashVahdat

and the team on some pretty exciting research directions around generative models in the coming months 👌

Looking forward to it!

12

3

193

@benjamin_hilton

,

@realmeatyhuman

,

@BarneyFlames

,

@mattgroh

,

@rctatman

,

@Plinz

,

@Thomas_Woodside

hopefully some of your concerns are addressed! Let us know what you think.

We will update the pre-print with this discussion:

8

7

113

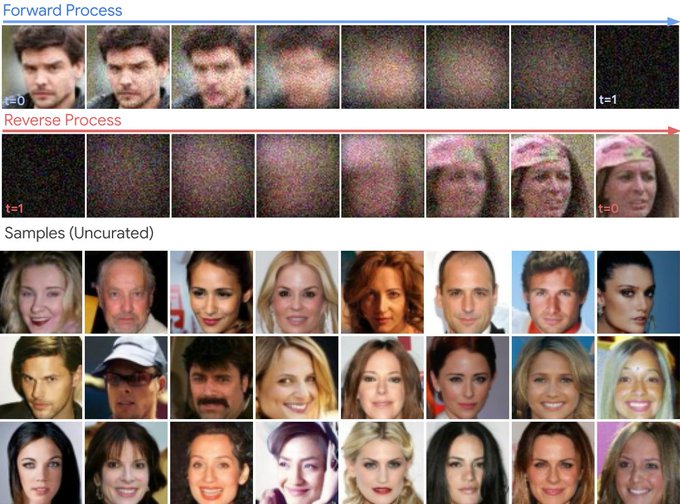

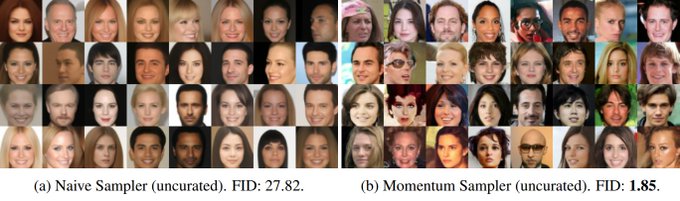

Excited to announce our paper: Your Local GAN.

Paper:

Code:

We obtain 14.53% FID ImageNet improvement on SAGAN by only changing the attention layer.

We introduce a new sparse attention layer with 2-D locality. Thread: 1/n

New paper: Your Local GAN: a new layer of two-dimensional sparse attention and a new generative model. Also progress on inverting GANs which may be useful for inverse problems.

with

@giannis_daras

from NTUA and

@gstsdn

@Han_Zhang_

from

@googleai

1

23

142

4

30

112

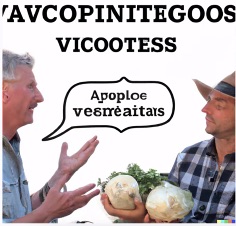

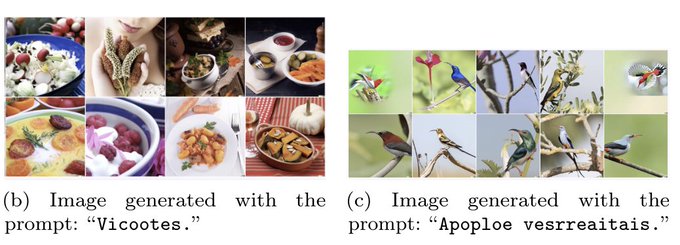

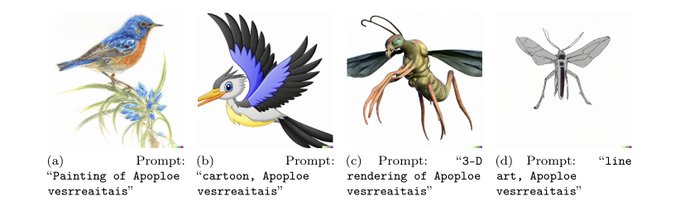

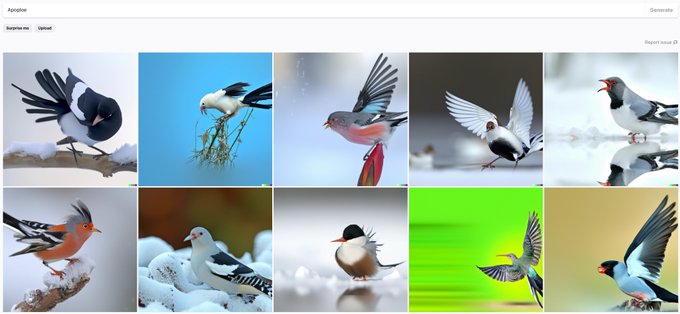

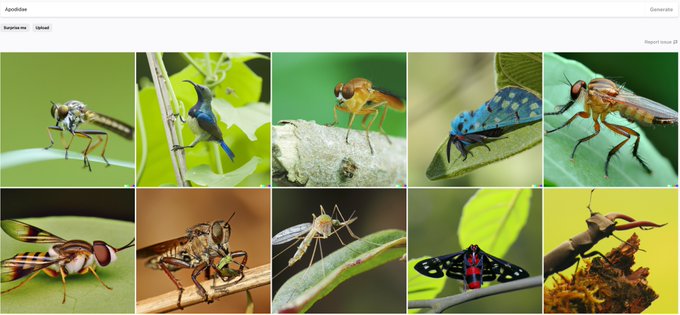

@BarneyFlames

,

@mattgroh

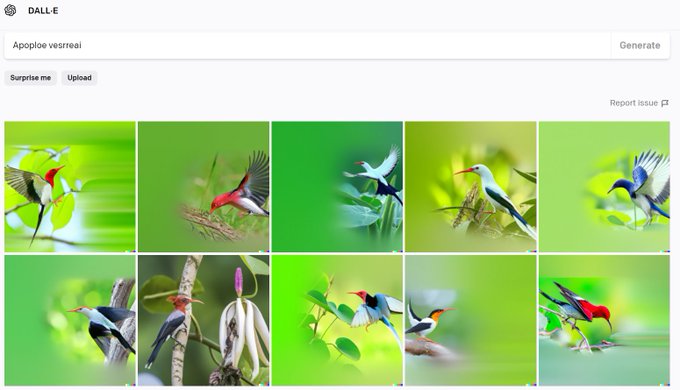

pointed out that "Apoploe", our gibberish word for birds, has similar BPE encoding to "Apodidae".

Interestingly, "Apodidae" produces ~1/10 birds (but many flying insects), while our gibberish "Apoploe" gives 10/10.

(5/N)

2

3

99

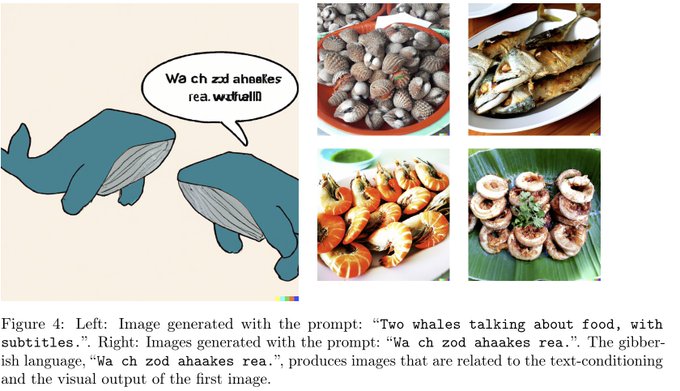

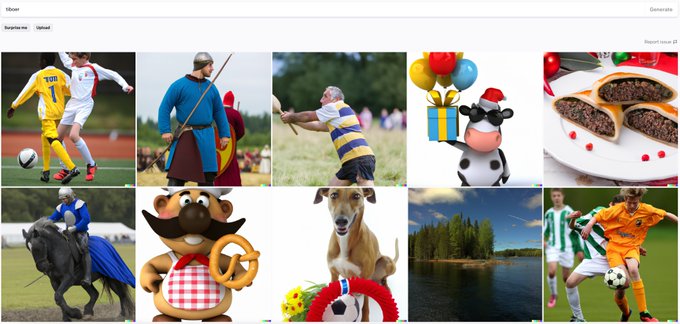

@benjamin_hilton

said that we got lucky with the whales example.

We found another similar example.

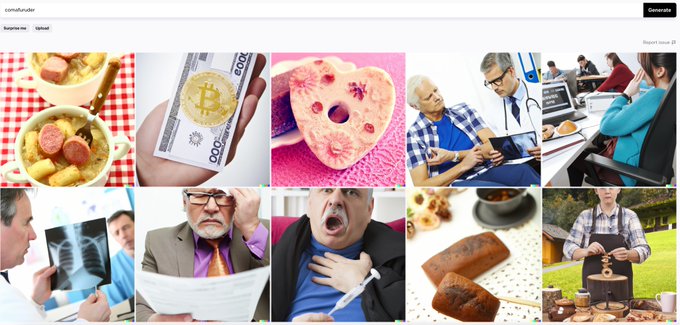

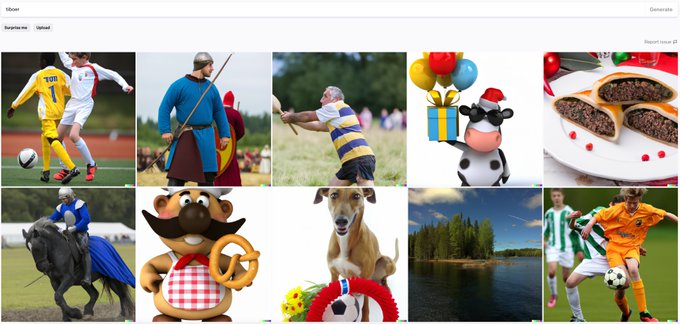

"Two men talking about soccer, with subtitles" gives the word "tiboer". This seems to give sports in ~4/10 images. (2/N)

3

3

94

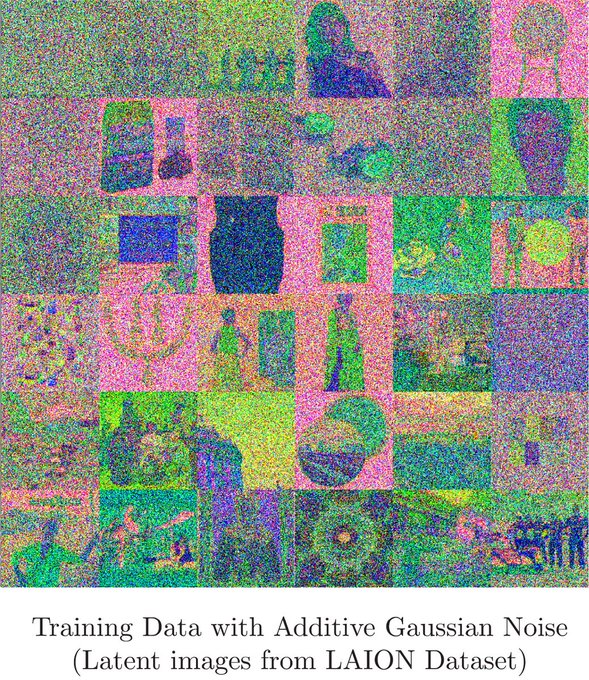

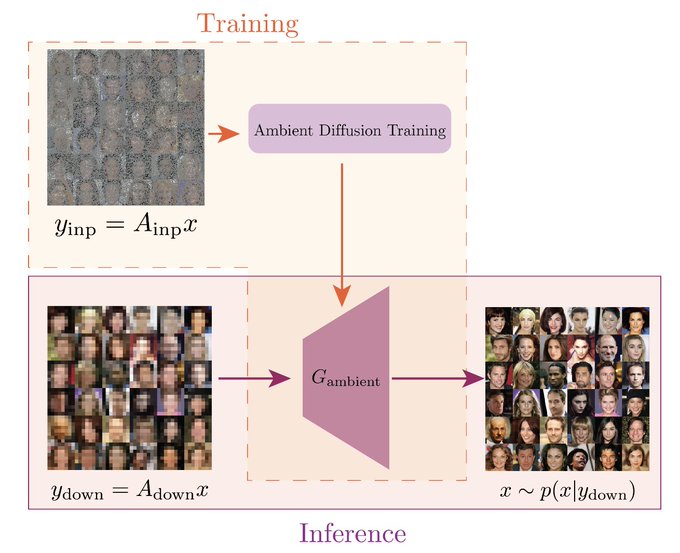

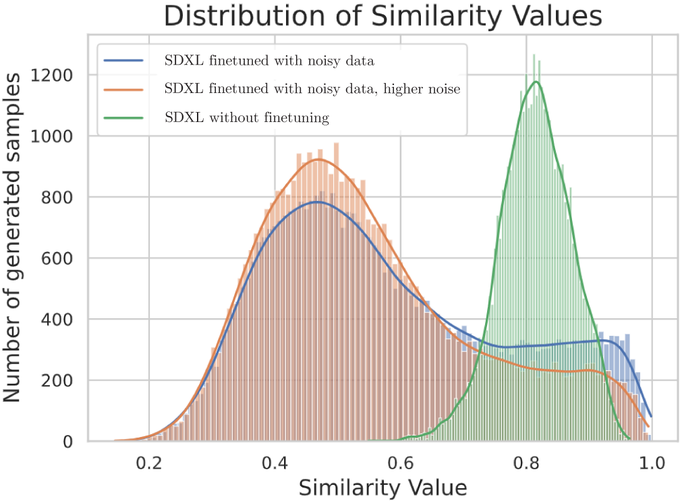

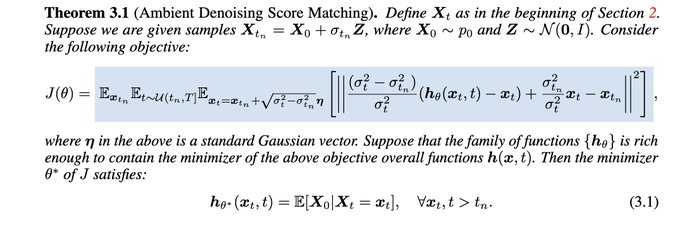

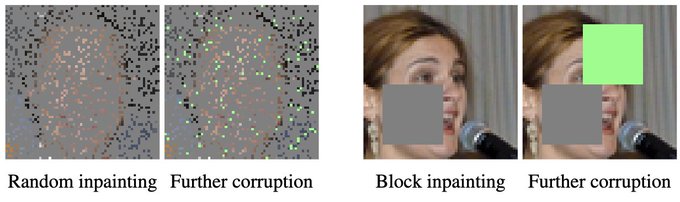

Ambient Diffusion got accepted to NeurIPS 2023 🥳

Useful for training/finetuning generative models in applications where access to uncorrupted data is expensive or undesirable (because of memorization).

Very excited about this research direction.

See you all in New Orleans! 🎷

4

10

87

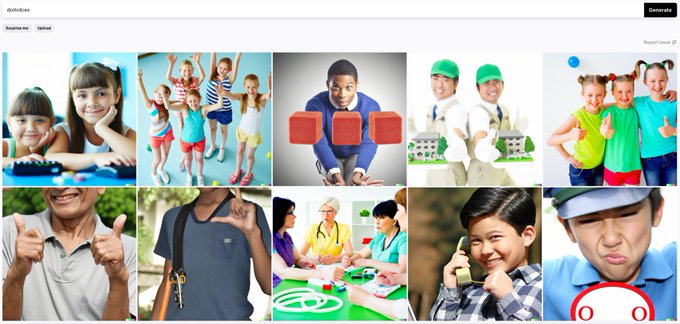

A few people, including

@realmeatyhuman

, asked whether our method works beyond natural images (of birds, etc).

Yes, we found some examples that seem statistically significant.

E.g. "doitcdces" seems related (~4/10 images) to students (or learning). (3/N)

4

2

77

Excited to announce our

#NeurIPS2020

paper:

SMYRF: Efficient Attention using Asymmetric Clustering.

Paper:

Code:

We propose a novel way to approximate *pre-trained* attention layers or train from scratch.

1

10

69

Our gibberish tokens might have many meanings.

@benjamin_hilton

run "Contarra ccetnxniams luryca tanniounons" and pointed out that not all are bugs. Indeed, our gibberish text produces a statistically significant fraction, but rarely a 100% match to the target concept. (7/N)

3

2

62

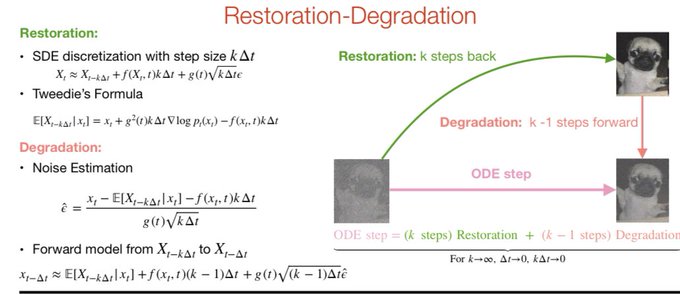

Consistent Diffusion Meets Tweedie: Training Exact Ambient Diffusion Models with Noisy Data.

Accepted to ICML 2024 🥳

Come and meet me in Vienna to learn about how to train/finetune diffusion models with noisy data 🚧

1

1

60

Slides from my talk at

#spaCyIRL

regarding sparse attention factorizations are available here: …

Thanks for the massive interest, paper and code are going to be released soon.

Slides partially describe joint work with:

@georgepar_91

@AlexGDimakis

@apotam

2

14

53

@benjamin_hilton

Finally, as noted by many, this is far from a language. It lacks grammar, syntax, coherence and many other things. We changed the title to: "Discovering the Hidden Vocabulary of DALLE-2" and we made the limitations explicit in the paper. Thanks for all the feedback!

1

0

44

Introducing CommonPool the largest collection of image-text pairs, 2.5x the size of LAION.

A 1.4B subset of our pool outcompetes compute-matched CLIP models from OpenAI and LAION.

DataComp, a new benchmark for multimodal datasets. is here!

2

9

45

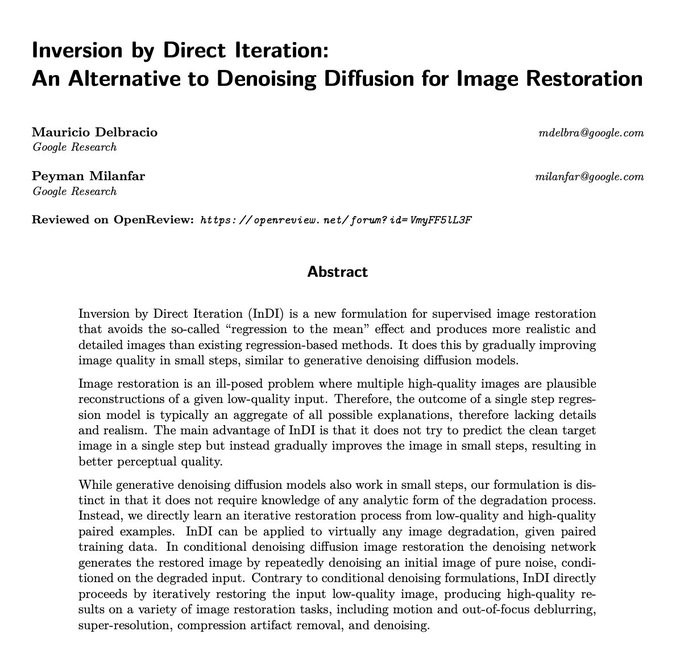

Great diffusion paper from Mauricio (

@2ptmvd

) and Peyman (

@docmilanfar

). Highly recommended read!

0

5

40

Cognitive science research indicates that bilingualism reduces the rate of cognitive decline.

Does this happen in neural networks too?

We train monolingual and bilingual GPT models and we show that the bilingual's performance decays slower under various weight corruptions.

1

3

39

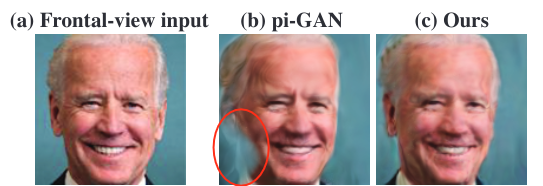

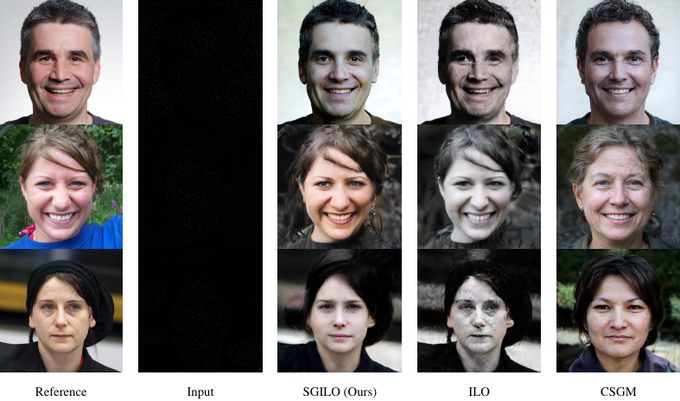

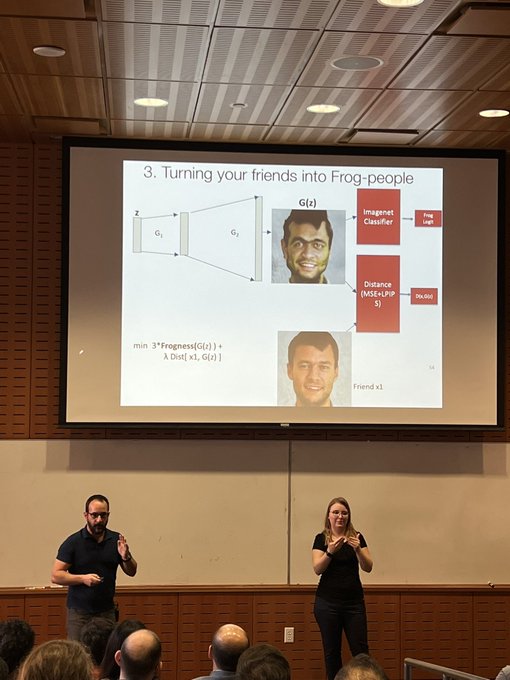

Happening now, in person! My Ph.D advisor,

@AlexGDimakis

, turns Prof.

@StefanoErmon

into a frog using our algorithm, Intermediate Layer Optimization. Many interesting points on fairness and modularity of algorithms that use deep generative models.

0

1

34

I was training pytorch model on multiple gpus, getting out of memory due to single gpu loss computation. This amazing gist written by

@Thom_Wolf

is a nice and clean workaround. Check it out, if you haven't already.

0

14

35

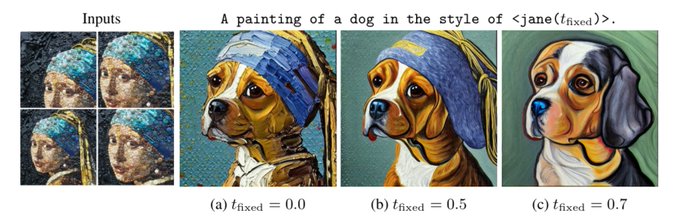

Please check our preprint (work in progress):

This work is part of my internship at Google this summer, with amazing collaborators.

@2ptmvd

@docmilanfar

@AlexGDimakis

+ Hossein Talebi, thank you for this opportunity!

2

8

35

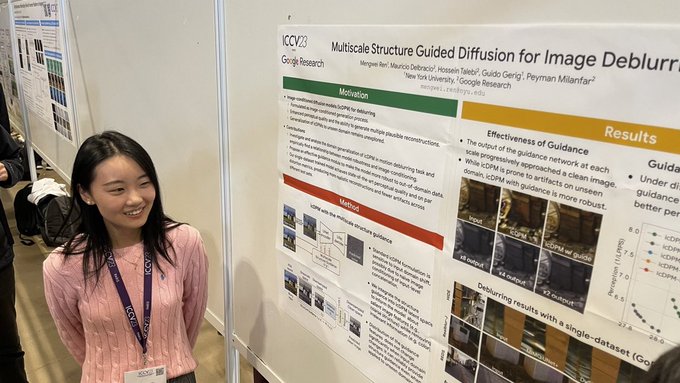

Great paper by my lunch buddy at Google Research last summer! Congrats

@mengweir

🎉💥

Great job

@mengweir

on your paper and poster - your hard work really paid off - Congrats!

+ thanks to the very capable social media chair

@CSProfKGD

for the great photo

2

5

74

1

2

31

My 2020: Graduated from

@ntua

, started a Ph.D. at

@UTCompSci

working with

@AlexGDimakis

, moved from Greece to the US, got my first papers accepted at

@CVPR

,

@NeurIPSConf

, got an exciting internship offer for summer 21, and created wonderful memories with friends & family.

1

0

31

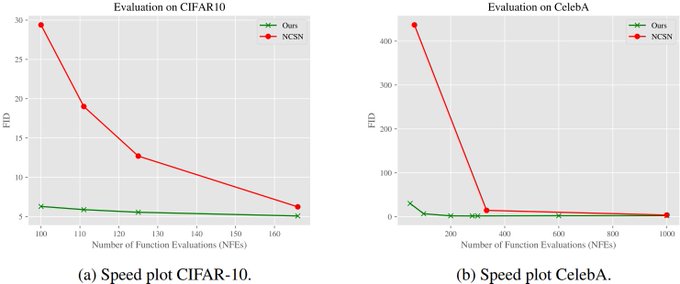

Deterministic diffusion samplers (e.g. DDIM) can efficiently sample from any distribution given an estimation of the underlying score function!

We also show how to extend the DDIM idea to any diffusion (linear or non-linear), similar to Soft/Cold Diffusion samplers.

1

7

30

Thrilled to share with you that our paper, Your Local GAN, got accepted in

@CVPR

! I feel grateful that my first ever paper as an undergrad of

@ntua

got accepted in such a conference. This work is the result of an awesome collaboration with

@AlexGDimakis

,

@gstsdn

@Han_Zhang_

.

1

3

29

@benjamin_hilton

I think there are three concerns in this thread: 1) gibberish texts don't have 1-1 mappings with English texts, 2) the meaning of gibberish texts changes, when the context is changing and 3) The attack method doesn't always work. (1/N)

2

0

27

When I joined the lab, I asked

@AlexGDimakis

to name a few of his past Ph.D. students who impressed him the most.

I won't disclose the full answer, but I will say one thing:

@DimitrisPapail

was among the top in this (short) list.

So, thank you

@DimitrisPapail

, means a lot!

1

0

27

@rctatman

The criticism here is very fair. We changed the "Secret Language" to "Hidden Vocabulary" in the title and we added a section on Limitations in our paper. Thanks for the constructive feedback!

1

2

27

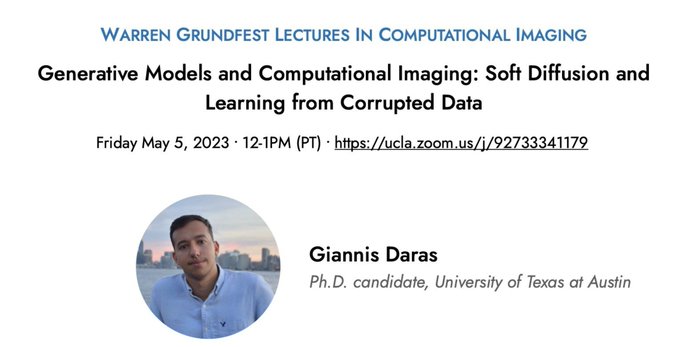

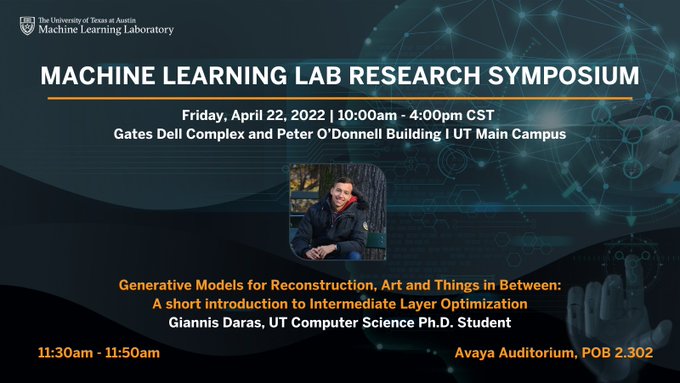

So excited for my first in-person Ph.D. talk. Come join us this Friday, it will be fun!

Giannis Daras

@giannis_daras

discusses "Generative Models for Reconstruction, Art and Things in Between: A short introduction to Intermediate Layer Optimization" FRIDAY, 4/22, at the

@UTAustin

Machine Learning Lab Research Symposium. Register today:

0

1

5

1

1

25

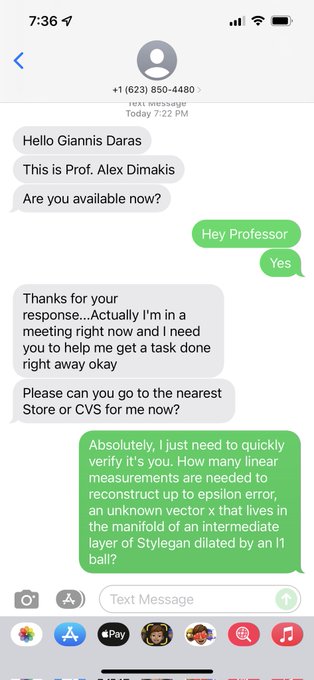

Continuing the trend

@roydanroy

´s student started. Time to teach these scammers some math 🤣

1

0

26

@Plinz

I agree with this thread. I don't believe there is anything "cryptic", probably the word "secret" in the title is more clickbait than it should have been. That said, the realization that "random" strings map to consistent visual concepts creates many security challenges.

1

0

22

Exciting personal news: Today is the first day of my Research Internship at

@Google

! I will be working with Abhishek Kumar (

@studentofml

), Vincent Chu and Dmitry Lagun (

@DmitryLagun

) on NERF-related research ideas.

@googlestudents

0

1

22

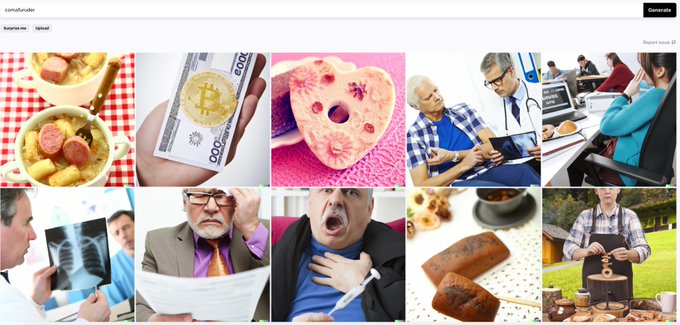

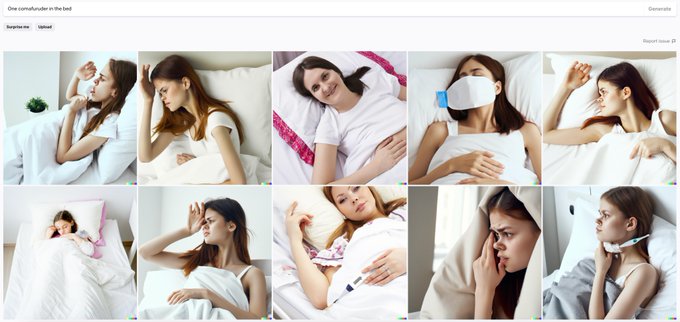

@benjamin_hilton

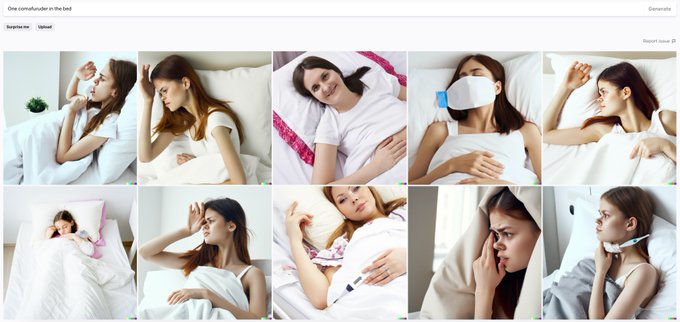

1) Indeed, a gibberish text can mean more than one thing. But this is also true for words in English (homonyms). Also, DALLE-2 text might mean resemble clusters of things. For example, we found that the word "comafuruder" has something to do with hospitals/ doctors/illness.

2

0

19

Here is the link to our thread, talking about this work in a little more detail:

0

3

19

@awjuliani

good question, we don't know, but it would be interesting to explore! As many mentioned, we discovered that there is a gibberish vocabulary, not a gibberish language. We currently have no evidence proving (or disproving) that there is some sort of syntax/grammar.

1

1

18

We open-source our code and models:

Github repo:

Colab:

Read more about our paper here:

joint work with

@AlexGDimakis

3

1

18

@ArthurB

I see some consistency in the generated outputs. It seems to me entirely possible that Midjourney has its' own vocabulary - a set of words that seem random to humans but are consistently mapped to visual concepts. Let us know if you find any!

1

1

16

@benjamin_hilton

3) The attack method doesn't always work. This is true -- we did a couple of runs to get this working. However, it works *sometimes* and it is interesting that the model is revealing its' adversarial examples. Another example: "Two men talking about soccer, with subtitles".

2

0

15

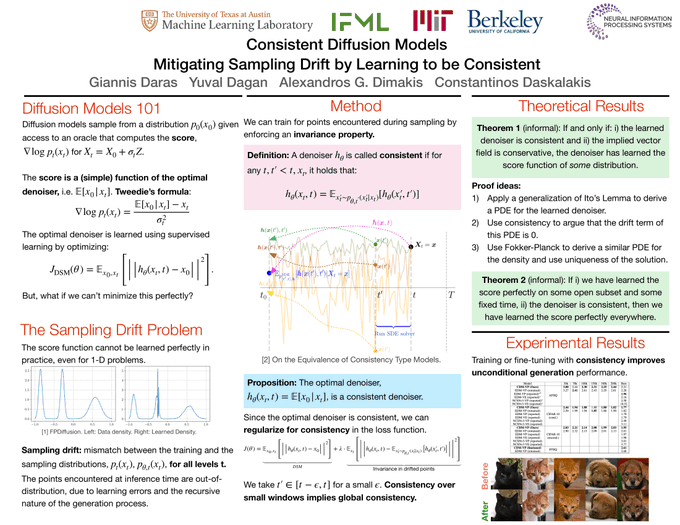

Research paper:

This is a work that I have been working on for a year under the supervision of two wonderful mentors: Alex Dimakis (

@AlexGDimakis

) and Constantinos Daskalakis (

@KonstDaskalakis

).

1

0

15

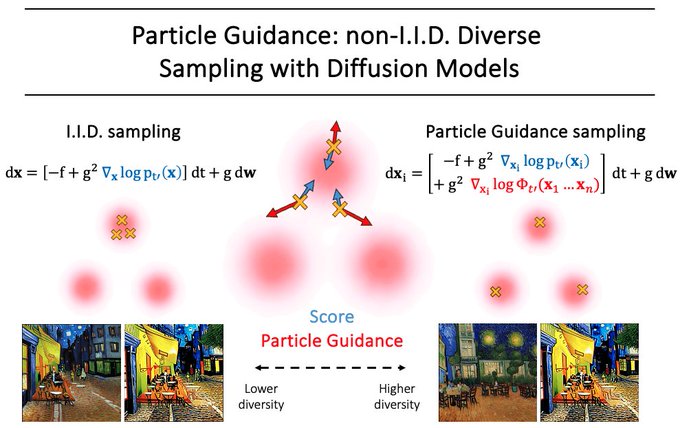

Interesting work on increasing the diffusion sampling diversity

1

1

15

@benjamin_hilton

2) Yes, gibberish text changes meaning based on the context (but not always). I do not yet understand when/why this is happening -- but I think it is worth exploring.

2

0

13

Video generation coming together. The pace of progress is insane.

Cool use of video init's using

#DeforumDiffusion

/

#stablediffusion

from u/EsdricoXD on Reddit.

prompt: A film still of lalaland, artwork by studio ghibli, makoto shinkai, pixv

sampler: euler ancestral

Steps: 45

scale: 14

strength: 0.55

Coherent and really Effective 🔥

21

226

1K

0

4

14

You can read the full-paper here:

Joint work with Vincent Chu,

@studentofml

,

@DmitryLagun

,

@AlexGDimakis

(8/8)

1

0

14

Excited to share that our paper, ILO, got accepted to ICML! If you haven't had the chance already, read our work and/or play with the demo -- it's fun!

Camera-ready version and follow-up work coming soon! Congrats to everyone that submitted to ICML and good luck for NeurIPS!

0

2

13

Exciting first day at the IFML (

@MLFoundations

) GenAI workshop today!

Some interesting discussions about open science and about how far the capabilities of GPT-N might go.

1

1

13

To learn more, refer to our paper:

We also open-source our models and code:

Joint work with amazing collaborators:

@AlexGDimakis

, Adam Klivans,

@YuvalDagan3

, Kulin Shah, and Aravind Gollakota.

1

0

14

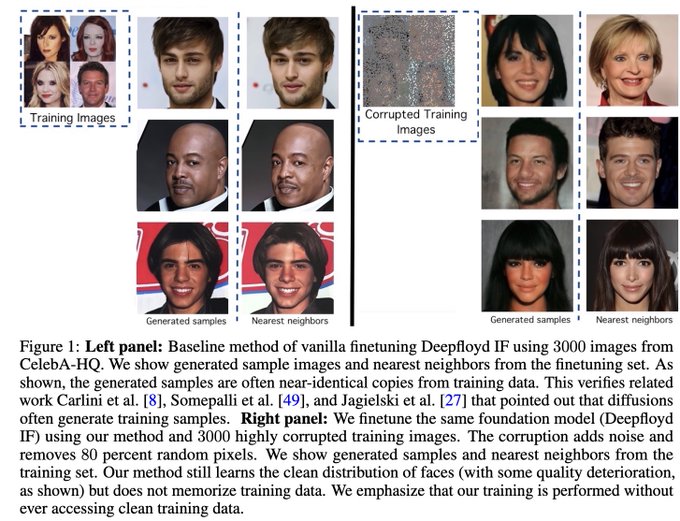

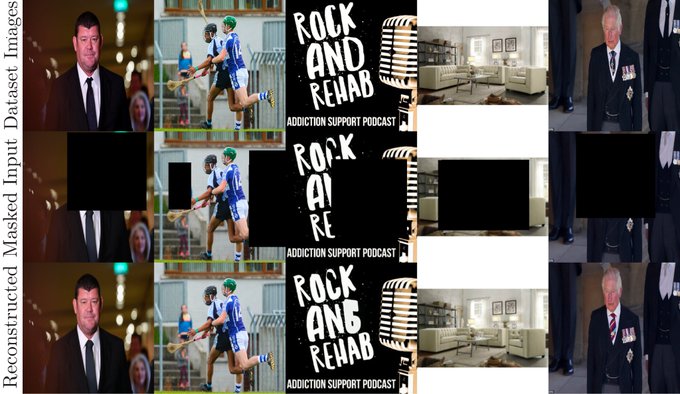

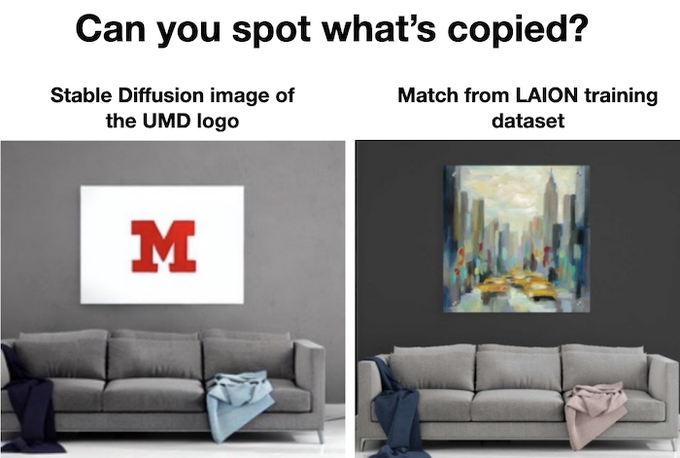

Recent work from

@tomgoldsteincs

's lab shows that diffusion models can generate exact copies or collages of training images.

We study the problem of training diffusion models with corrupted data, e.g. images with 90% of their pixels missing.

#StableDiffusion

is being sued for copyright infringement. Our recent

#CVPR2023

paper revealed that diffusion models can indeed copy from training images in unexpected situations.

Let’s see what the lawsuit claims are, and if they're true or false 🧵

8

67

310

1

1

12

Drop by our poster,

#645

, to discuss about an algorithmic interpretation of the Probability Flow ODE that extends DDIM to non linear diffusions.

Bonus: a non asymptotic analysis of deterministic diffusion samplers.

Will be presenting our work on analyzing *deterministic* diffusion samplers.

DDIM and other deterministic samplers are usually faster than their stochastic counterparts.

We make a first attempt to understand their theoretical properties.

Wed, 4-5:30pm CDT, Hall 1

#645

0

1

11

0

3

13

Great work!

0

2

12

Join me at

#CVPR2020

to discuss about 2d local attention for GANs :) Zoom session for our paper starts in 1 hour (5 p.m. Seatle time) and it will last for two hours.

CVPR link:

Joint work with:

@AlexGDimakis

,

@gstsdn

,

@Han_Zhang_

.

1

3

12