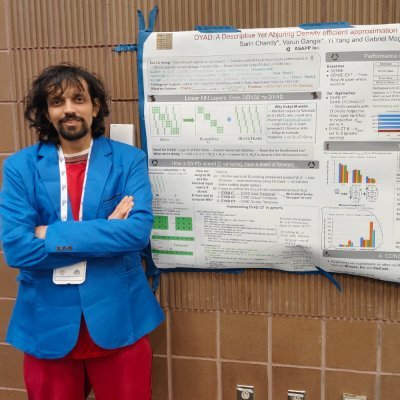

Darshan Deshpande

@getdarshan

Followers

196

Following

395

Media

19

Statuses

154

Research Scientist working on RL environments and evals @PatronusAI | ex-Research @USC_ISI

San Francisco, CA

Joined July 2020

👋 Folks at #NEURIPS2025, come check out & stop by the poster of our Memtrack env at the SEA workshop happening at Upper Level 23ABC, 3:50pm onwards. Our env studies how well an agent dropped into a workplace can context engineer by composing tool calls to access intertwined

arxiv.org

Recent works on context and memory benchmarking have primarily focused on conversational instances but the need for evaluating memory in dynamic enterprise environments is crucial for its...

🚨We will be presenting Memtrack today at the SEA workshop from 3:50pm onwards at #NeurIPS2025 Memtrack is a SoTA eval env to study an agent's ability to memorize and retrieve facts using exploration over interleaved enterprise slack, linear and git threads in a multi-QA setting

0

3

7

🚨We will be presenting Memtrack today at the SEA workshop from 3:50pm onwards at #NeurIPS2025 Memtrack is a SoTA eval env to study an agent's ability to memorize and retrieve facts using exploration over interleaved enterprise slack, linear and git threads in a multi-QA setting

0

4

13

I will also be presenting Memtrack at #SEAWorkshop on 7th of Dec! https://t.co/XixzQ111eD

arxiv.org

Recent works on context and memory benchmarking have primarily focused on conversational instances but the need for evaluating memory in dynamic enterprise environments is crucial for its...

0

0

0

I will be at #NeurIPS2025 from 2nd-7th Dec. Happy to meet old and new friends and chat about non-deterministic evals, long horizon RL and world building 🌍

1

0

1

Creating a bounty program out of benchmark datasets that restrict training on to then create RL environments that can be trained on using Prime's "open source" training services. This is scammy practice under the name of open science!

if you or a loved one is looking to learn about building environments and get a bag in the process, inquire within our bounty list is bigger and better than ever

3

0

10

Excited to have contributed to OpenEnv before its release today! Thanks to @Meta and @huggingface for working towards standardizing RL environment creation!

We’re excited to support @Meta and @huggingface's OpenEnv launch today! OpenEnv provides an open-source framework for building and interacting with agentic execution environments. This allows researchers and developers to create isolated, secure, deployable, and usable

0

0

2

Thank you, @BerkeleyRDI, for hosting the Agentic AI Summit and having us! @getdarshan, one of our research scientists, who leads agent evaluation here at Patronus, presented at the summit! Here are a few takeaways: * Given context explosion and increasing domain depth and

0

1

2

Check out the very cool work from our friends @PatronusAI 🔥 work here! https://t.co/gINwBGZAtn

huggingface.co

1

7

17

Non-deterministic trajectories need autonomous supervision. Introducing Percival, a SoTA system to detect issues with long context agentic problems and suggest fixes to systems. The time to make a move towards autonomous evaluations is now! 🔥

1/ 🔥🔥 Big news: We’re launching Percival, the first AI agent that can evaluate and fix other AI agents! 🤖 Percival is an evaluation agent that doesn’t just detect failures in agent traces — it can fix them. Percival outperformed SOTA LLMs by 2.9x on the TRAIL dataset,

1

3

10

Building good benchmarks is hard, and @PatronusAI has released what may be the coolest agent eval yet: ✅ Realistic and objectively useful task ✅ Multilingual, multimodal, and multi-domain ✅ Easy for humans, still challenging for agents

1/ Ever tried to remember the name of a movie you’ve seen – you can picture the scenes clearly, but the movie name won’t come to you? Introducing BLUR: the first agent benchmark for tip-of-the-tongue search and reasoning 🔥 We benchmarked SOTA agents and found that the

1

4

6

My colleague Chris McConnell and I greatly enjoyed seeing @skychwang @getdarshan @rebeccatqian @anandnk24 bring this project to life. We’re excited to finally see it out in the world, and look forward to collaborating on the next one!

1

2

4

We're excited to introduce the BLUR Leaderboard on @huggingface 🔥 Earlier today, we open sourced BLUR: the first agent benchmark for tip-of-the-tongue search and reasoning. It measures how effectively agents can help you identify something you vaguely remember, but can’t

huggingface.co

2

11

43

1/ Ever tried to remember the name of a movie you’ve seen – you can picture the scenes clearly, but the movie name won’t come to you? Introducing BLUR: the first agent benchmark for tip-of-the-tongue search and reasoning 🔥 We benchmarked SOTA agents and found that the

1

6

47

While experimenting with alignment methods, we observed that APO was more robust to noise in synthetic training data as compared to DPO or KTO. Thanks for the excellent contribution to the community @KarelDoostrlnck and team 🚀

Happy to see @PatronusAI use our Anchored Preference Optimization (APO) objective in their study!

1

1

6

I am excited to announce the release of our Glider model - small size, multi metric evals, explainable highlight spans, multilingual generalization, amazing subjective metric performance - Check it out!! Paper:

1/ Introducing Glider - the smallest model to beat GPT-4o-mini on eval tasks ⚡🚀 - Open source, open weights, open code - Explainable evaluations by nature - Trained on 183 criteria and 685 domains Try it out for free at https://t.co/ZZai84VulJ 🔥

0

0

1

1/ Introducing Lynx v2.0: an 8B State-of-the-Art RAG hallucination detection model 🚀 - Beats Claude-3.5-Sonnet on HaluBench by 2.2% - 3.4% higher accuracy than Lynx v1.1 on HaluBench - Optimized for long context use cases - Detects 8 types of common hallucinations, including

3

10

22

Hey everyone, I am at #EMNLP2024 this week, co-presenting our work on Prototype based Networks with @ZSourati. Please reach out if you are interested in AI evaluations, interpretability or model alignment!

0

0

1

Prototype-based networks can greatly enhance the robustness of #languagemodels in text classification, addressing real-world needs by combining robustness & interpretability for #trustworthyAI. Learn how in our Findings of #EMNLP24 accepted paper. https://t.co/fszqgkgXQy

#NECLabs

0

4

7

Llama Guard is Off Duty 😲 It’s weak at toxicity detection! We benchmarked popular toxicity datasets spanning languages like Portuguese, Ukrainian, and Turkish, and found that Llama Guard has a very high false negative rate for toxic content! We found that base models like

1

2

18